My 2011 presentation on Stuxnet was meant to highlight a few basic concepts. Here are two:

- Sophisticated attacks are ones we are unable to explain clearly. Spoons are sophisticated to babies. Spoons are not sophisticated to long-time chopstick users. It is a relative measure, not an absolute one. As we increase our ability to explain and use things they become less sophisticated to us. Saying something is sophisticated really is to communicate that we do not understand it, although that may be our own fault.

- Original attacks are ones we have not seen before. It also is a relative measure, not an absolute one. As we spend more time researching and observing things, fewer things will be seen as original. In fact with just a little bit of digging it becomes hard to find something completely original rather than evolutionary or incremental. Saying something is original therefore is to say we have not seen anything like it before, although that may be our own fault.

Relativity is the key here. Ask yourself if there is someone to easily discuss attacks with to make them less sophisticated and less original. Is there a way to be less in awe and more understanding? It’s easy to say “oooh, spoon” and it should not be that much harder to ask “anyone seen this thing before?”

Here’s a simple thought exercise:

Given that we know critical infrastructure is extremely poorly defended. Given that we know control systems are by design simple. Would an attack designed for simple systems behind simple security therefore be sophisticated? My argument is usually no, that by design the technical aspects of compromise tend to be a low-bar…perhaps especially in Iran.

Since the late 1990s I have been doing assessments inside utilities and I have not yet found one hard to compromise. However, there still is a sophisticated part, where research and skills definitely are required. Knowing exactly how to make an ongoing attack invisible and getting the attack specific to a very intended result, that is a level above getting in and grabbing data or even causing harm.

An even more advanced attack makes trace/tracks of attack invisible. So there definitely are ways to bring sophistication and uniqueness level up substantially from “oooh, spoon” to “I have no idea if that was me that just did that”. I believe this has become known as the Mossad-level attack, at which point defense is not about technology.

I thought with my 2011 presentation I could show how a little analysis makes major portions of Stuxnet less sophisticated and less original; certainly it was not the first of its kind and it is arguable how targeted it was as it spread.

The most sophisticated aspects to me were in that it was moving through many actors across boundaries (e.g. Germany, Iran, Pakistan, Israel, US, Russia) requiring knowledge inside areas not easily accessed or learned. Ok, let’s face it. It turns out that thinking was on the right path, albeit an important role was backwards and I wasn’t sure where it would lead.

A US ex-intel expert mentioned on Twitter during my talk I had “conveniently” ignored motives. This is easy for me to explain: I focus on consequences as motive is basically impossible to know. However, as a clue that comment was helpful. I wasn’t thinking hard enough about the economic-espionage aspect that US intelligence agencies have revealed as a motivator. Recent revelations suggest the US was angry at Germany allowing technology into Iran. I had mistakenly thought Germany would have been working with the US, or Israel would have been able to pressure Germany. Nope.

Alas a simple flip of Germany’s role (critical to good analysis and unfortunately overlooked by me) makes far more sense because they (less often but similar to France) stand accused of illicit sales of dangerous technology to US (and friend of US) enemies. It also fits with accusations I have heard from US ex-intel expert that someone (i.e. Atomstroyexport) tipped-off the Germans, an “unheard of” first responder to research and report Stuxnet. The news cycles actually exposed Germany’s ties to Iran and potentially changed how the public would link similar or follow-up action.

But this post isn’t about the interesting social science aspects driving a geopolitical technology fight (between Germany/Russia and Israel/US over Iran’s nuclear program), it’s about my failure to make an impression enough to add perspective. So I will try again here. I want to address an odd tendency of people to continue to report Stuxnet as the first ever breach of its type. This is what the BSI said in their February 2011 Cyber Security Strategy for Germany (page 3):

Experience with the Stuxnet virus shows that important industrial infrastructures are no longer exempted from targeted IT attacks.

No longer exempted? Targeted attacks go back a long way as anyone familiar with the NIST report on the 2000 Maroochy breach should be aware.

NIST has established an Industrial Control System (ICS) Security Project to improve the security of public and private sector ICS. NIST SP 800-53 revision 2, December 2007, Recommended Security Controls for Federal Information Systems, provides implementing guidance and detail in the context of two mandatory Federal Information Processing Standards (FIPS) that apply to all federal information and information systems, including ICSs.

Note an important caveat in the NIST report:

…”Lessons Learned From the Maroochy Water Breach” refer to a non-public analytic report by the civil engineer in charge of the water supply and sewage systems…during time of the breach…

These non-public analytic reports are where most breach discussions take place. Nonetheless, there never was any exemption and there are public examples of ICS compromise and damage. NIST gives Maroochy from 2000. Here are a few more ICS attacks to consider and research:

- 1992 Portland/Oroville – Widespread SCADA Compromise, Including BLM Systems Managing Dams for Northern California

- 1992 Chevron – Refinery Emergency Alert System Disabled

- 1992 Ignalina, Lithuania – Engineer installs virus on nuclear power plant ICS

- 1994 Salt River – Water Canal Controls Compromised

- 1999 Gazprom – Gas Flow Switchboard Compromised

- 2000 Maroochy Shire – Water Quality Compromised

- 2001 California – Power Distribution Center Compromised

- 2003 Davis-Besse – Nuclear Safety Parameter Display Systems Offline

- 2003 Amundsen-Scott – South Pole Station Life Support System Compromised

- 2003 CSX Corporation – Train Signaling Shutdown

- 2006 Browns Ferry – Nuclear Reactor Recirculation Pump Failure

- 2007 Idaho Nuclear Technology & Engineering Complex (INTEC) – Turbine Failure

- 2008 Hatch – Contractor software update to business system shuts down nuclear power plant ICS

- 2009 Carrell Clinic – Hospital HVAC Compromised

- 2013 Austria/Germany – Power Grid Control Network Shutdown

Fast forward to December 2014 and a new breach case inside Germany comes out via the latest BSI report. It involves ICS so the usual industry characters start discussing it.

Immediately I tweet for people to take in the long-view, the grounded-view, on German BSI reports.

Alas, my presentation in 2011 with a history of breaches and my recent tweets clearly failed to sway, so I am here blogging again. I offer as example of my failure the following headlines that really emphasize a “second time ever” event.

- A Cyberattack Has Caused Confirmed Physical Damage for the Second Time Ever, Wired, January 8, 2015

This is only the second confirmed case in which a wholly digital attack caused physical destruction of equipment. The first case, of course, was Stuxnet, the sophisticated digital weapon the U.S. and Israel launched

- For The Second Time Ever, A Cyberattack Causes Physical Damage: It’s the dawn of a new kind of war, PopSci, January 8, 2015

The terrifying specter of a future of cyberattacks is that someday, a malicious actor will reach through the internet and cause real, tangible, physical harm. It sounds like a Hollywood plot: a computer is compromised, and suddenly the machinery of a factory is broken. Yet despite the panic, until recently there’s only been one such confirmed case. That was Stuxnet, an American-made virus…

- Stuxnet-like cyberattack on German steel plant deepens security concerns, Homeland Security News Wire, January 9, 2015

The attack on the steel plant is only the fourth known attack specifically directed at industrial control systems (ICS) components – and only the second confirmed digital attack – the first was Stuxnet — to have caused physical damage to machinery and equipment.

That list of four in the last article is interesting. Sets it apart from the other two headlines, yet it also claims “and only the second confirmed digital attack”? That’s clearly a false statement.

Anyway Wired appears to have crafted their story in a strangely similar fashion to another site; perhaps too similar to a Dragos Security blog post a month earlier (same day as the BSI tweets above).

This is only the second time a reliable source has publicly confirmed physical damage to control systems as the result of a cyber-attack. The first instance, the malware Stuxnet, caused damage to nearly 3,000 centrifuges in the Natanz facility in Iran. Stories of damage in other facilities have appeared over the years but mostly based on tightly held rumors in the Industrial Control Systems (ICS) community that have not been made public. Additionally there have been reports of companies operating in ICS being attacked, such as the Shamoon malware which destroyed upwards of 30,000 computers, but these intrusions did not make it into the control system environment or damage actual control systems. The only other two widely reported stories on physical damage were the Trans-Siberian-Pipeline in explosion in 1982 and the BTC Turkey pipeline explosion in 2008. It is worth noting that both stories have come under intense scrutiny and rely on single sources of information without technical analysis or reliable sources. Additionally, both stories have appeared during times where the reporting could have political motive instead of factuality which highlights a growing concern of accurate reporting on ICS attacks. The steelworks attack though is reported from the German government’s BSI who has both been capable and reliable in their reporting of events previously and have the access to technical data and first hand sources to validate the story.

Now here is someone who knows what they are talking about. Note the nuance and details in the Dragos text. So I realize my problem is with a Dragos post regurgitated a month later by Wired without attribution because look at how all the qualifiers disappeared in translation. Wired looks preposterous compared to this more thorough reporting.

The Dragos opening line is a great study in how to setup a series of qualifications before stepping through them with explanations:

This is only the second time a reliable source has publicly confirmed physical damage to control systems as the result of a cyber-attack

The phrase has more qualifications than Lance Armstrong:

- Has to be a reliable source. Not sure who qualifies that.

- Has to be publicly confirmed. Does this mean a government agency or the actual victim admitting breach?

- Has to be physical damage to control systems. Why control systems themselves, not anything controlled by systems? Because ICS security blog writer.

- Has to result from cyber-attack. They did not say malware so this is very broad.

Ok, Armstrong had more than four… Still, the Wired phrase by comparison uses dangerously loose adaptations and drops half. Wired wrote “This is only the second confirmed case in which a wholly digital attack caused physical destruction of equipment” and that’s it. Two qualifications instead of four.

So we easily can say Maroochy was a wholly digital attack that caused physical destruction of equipment. We reach the Wired bar without a problem. We’d be done already and Stuxnet proved to not be the first.

Dragos is harder. Maroochy also was from a reliable source, publicly confirmed resulting from packet-radio attack (arguably cyber). Only thing left here is physical damage to control systems to qualify. I think the Dragos bar is set oddly high to say the control systems themselves have to be damaged. Granted, ICS management will consider ICS damage differently than external harms; this is true in most industries, although you would expect it to be the opposite in ICS. To the vast majority, news of 800,000 released liters of sewage obviously qualifies as physical damage. So Maroochy would still qualify. Perhaps more to the point, the BSI report says the furnace was set to an unknown state, which caused breakdown. Maroochy had its controls manipulated to an unknown state, albeit not damaging the controls themselves.

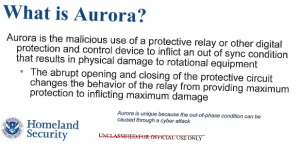

If anyone is going to hang their hat on damage to control systems, the perhaps they should refer to it as an Aurora litmus, given the infamous DHS study of substations in 2007 (840pg PDF).

The concern with Aurora, if I understood the test correctly, was not to just manipulate the controls. It was to “exploit the capability of modern protective equipment and cause them to serve as a destructive weapon”. In other words, use the controls that were meant to prevent damage to cause widespread damage instead. Damage to just controls themselves without wider effect would be a premature end to a cyber-physical attack, albeit a warning.

I’d love to dig into that BTC Turkey pipeline explosion in 2008, since I worked on that case at the time. I agree with the Dragos blog it doesn’t qualify, however, so I have to move on. Before I do, there is an important lesson from 2008.

Suffice it to say I was on press calls and I gave clear and documented evidence to those interviewed about cyber attack on critical infrastructure. For example, the Georgia official complaint listed no damage related to cyber attack. The press instead ran a story, without doing any research, using hearsay that Russia knocked the Georgian infrastructure off-line with cyber attack. That often can be a problem with the press and perhaps that is why I am calling Wired out here for their lazy title.

Let’s look at another example, the 2007 TCAA, from a reliable source, publicly confirmed, causing damage to control systems, caused by cyber-attack:

Michael Keehn, 61, former electrical supervisor with Tehama Colusa Canal Authority (TCAA) in Willows, California, faces 10 years in prison on charges that he “intentionally caused damage without authorization to a protected computer,” according to Keehn’s November 15 indictment. He did this by installing unauthorized software on the TCAA’s Supervisory Control and Data Acquisition (SCADA) system, the indictment states.

Perfect example. Meets all four criteria. Sounds bad, right? Aha! Got you.

Unfortunately this incident turns out to be based only an indictment turned into a news story, repeated by others without independent research. Several reporters jumped on the indictment, created a story, and then moved on. Dan Goodin probably had the best perspective, at least introducing skepticism about the indictment. I put the example here not only to trick the reader, but also to highlight how seriously I take the question of “reliable source”.

Journalists often unintentionally muddy waters (pun not intended) and mislead; they can move on as soon as the story goes cold. What stake do they really have when spinning their headline? How much accountability do they hold? Meanwhile, those of us defending infrastructure (should) keep digging for truth in these matters, because we really need it for more than talking point, we need to improve our defenses.

I’ve read the court documents available and they indicate a misunderstanding about software developer copyright, which led to a legal fight, all of which has been dismissed. In fact the accused wrote a book afterwards called “Anatomy of a Criminal Indictment” about how to successfully defend yourself in court.

In 1989 he applied for a job with the Tehama-Colusa Canal Authority, a Joint Powers Authority who operated and maintained two United States Bureau of Reclamation canals. During his tenure there, he volunteered to undertake development of full automated control of the Tehama-Colusa Canal, a 110-mile canal capable of moving 2,000 cfs (cubic feet of water per second). It was out of this development for which he volunteered to undertake, that resulted in a criminal indictment under Title 18, Part I, Chapter 47, Section 1030 (Fraud and related activity in connection with computers). He would be under indictment for three years before the charges were dismissed. During these three years he was very proactive in his own defense and learned a lot that an individual not previously exposed would know about. The defense attorney was functioning as a public defender in this case, and yet, after three years the charges were dismissed under a motion of the prosecution.

One would think reporters would jump on the chance to highlight the dismissal, or promote the book. Sadly the only news I find is about the original indictment. And so we still find the indictment listed by information security references as an example of ICS attack, even though it was not. Again, props to Dragos blog for being skeptical about prior events. I still say, aside from Maroochy, we can prove Stuxnet not the first public case.

The danger in taking the wide-view is that it increases the need to understand far more details and do more deep research to avoid being misled. The benefit, as I pointed out at the start, is we significantly raise the bar for what is considered sophisticated or original attacks.

In my experience Stuxnet is a logical evolution, an application of accumulated methods within a context already well documented and warned about repeatedly. I believe putting it back in that context makes it more accessible to defenders. We need better definitions of physical damage and cyber, let alone reputable sources, before throwing around firsts and seconds.

Yes malware that deviates from normal can be caught, even unfamiliar malware, if we observe and respond quickly to abnormal behavior. Calling Stuxnet the “first” will perhaps garner more attention, which is good for eyeballs on headlines. However it also delays people from realizing how it fits a progression; is the adversary introducing never-seen-before tools and methods or are they just extremely well practiced with what we know?

The latest studies suggest how easy, almost trivial, it would be to detect Stuxnet for security analysts monitoring traffic as well as operations. Regardless of the 0day, the more elements of behavior monitored the higher the attacker has to scale. Companies like ThetaRay have been created on this exact premise, to automate and reduce the cost of the measures a security analyst would use to protect operations. (Already a crowded market)

That’s the way I presented it in 2011 and little has changed since then. Perhaps the most striking attempt to make Stuxnet stand out that I have heard lately was from ex-USAF staff; paraphrasing him, Stuxnet was meant to be to Iran what the atom bomb was to Japan. A weapon of mass-destruction to change the course of war and be apologized for later.

It would be interesting if I could find myself able to agree with that argument. I do not. But if I did agree, then perhaps I could point out in recent research, based on Japanese and Russian first-person reports, the USAF was wrong about Japan. Fear of nuclear assault, let alone mass casualties and destruction from the bombs, did not end the war with Japan; rather leadership gave up hope two days after the Soviets entered the Pacific Theater. And that should make you wonder really about people who say we should be thankful for the consequences of either malware or bombs.

But that is obviously a blog post for another day.

Please find below some references for further reading, which all put Stuxnet in broad context rather than being the “first”:

N. Carr, Development of a Tailored Methodology and Forensic Toolkit for Industrial Control Systems Incident Response, US Naval Postgraduate School 2014

A. Nicholson; S. Webber; S. Dyer; T. Patel; H. Janicke, SCADA security in the light of Cyber-Warfare 2012

C. Wueest, Targeted Attacks Against the Energy Sector, Symantec 2014

B. Miller; D. Rowe, A Survey of SCADA and Critical Infrastructure Incidents, SIGITE/RIIT 2012

C. Baylon; R. Brunt; D. Livingstone, Cyber Security at Civil Nuclear Facilities, Chatham House 2015