Copenhagen is estimating a $1-2 million gain every day — that’s right, EVERY DAY — when people in the city ride bicycles instead of drive cars. Since the biggest friction to cycling is the fact that cars kill those around them either immediately (crash) or slow and painfully (disease), a great deal of money and time is being spent by the Danes to isolate cars and reduce societal harms.

In other words, restricting the violence of cars enables Copenhagen’s population to flourish in multiple ways:

The city’s investment in impressive cycling infrastructure is paying off in multiple ways. For not only are there many health benefits to getting more people to use bikes, there are some serious economic gains too. Cycling is a great, low-impact form of exercise which can build muscle, bone density, and increase cardiovascular fitness. Figures from the finance minister suggests that every time someone rides 1 km on their bike in Copenhagen, the city experiences an economic gain of 4.80 krone, or about 75 US cents. If that ride replaces an equivalent car journey, the gain rises to 10.09 krone per km, or around $1.55. And with 1.4 million km cycled every day, that’s a potential benefit to the city of between $1.05m and $2.17m, daily.

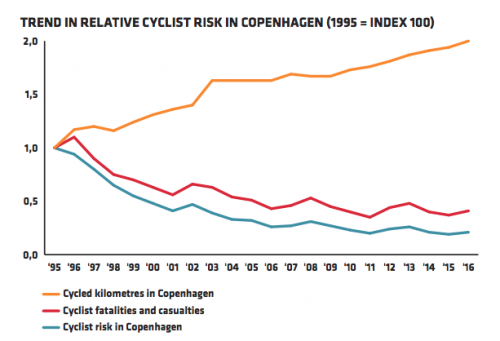

That’s the World Economic Forum reporting these numbers, and perhaps even more impressive is the risk management graph they offer readers. Apparently Copenhagen has invested an average of $10m each year over 13 years in cycling infrastructure, which is now believed to return benefits of $300-600m each year. Here is what the investment return looks like in terms of safety and ridership:

As distance ridden on bicycles goes up, health risks go down significantly across the population. That is just health risk related directly to cycling, as there will be additional health risk reductions in terms of physical and mental fitness. The World Economic Forum turns to UK data on this point:

…a single ‘cycling city’ worth £377 million to the National Health Service in healthcare cost savings

I wrote the other day about a cities around the world that are banning cars altogether in their city center, some on an accelerated 5-year timeline such as Oslo and Madrid.

Given all the data above, it should come as no surprise Copenhagen is considering the same road forward and banning cars entirely from some neighborhoods.

Sweden in 1997 set about trying to cut down to zero the number of pedestrians killed by cars. The strategy used has produced impressive results, yet nowhere near the kind of zero-death safety they had targeted:

Since the scheme began, road deaths have almost halved: 270 people died in road accidents in Sweden in 2016. Twenty years earlier the figure was 541.

America

Don’t worry Americans, we also have a few car-free neighborhoods, believe it or not.

America lags so far behind on this topic, its numbers are in a completely different ballpark. While Sweden is annoyed that it only has seen a 50% reduction in death from cars, some states in the US are actually tracking increases.

Texas

The state that calls itself “lone star” in honor of secession from Mexico to preserve slavery, apparently is aborting human life at an alarming rate by repeatedly failing to address cars as a threat to health.

NSC estimates traffic fatalities in Texas have jumped 7 percent from 2015 to 2017

This is not normal, or acceptable, and could easily be going the other direction.

American cities in places like Texas paint a stark contrast to the quality of life stories around the world, and especially Scandinavia, that highlight enabling people with the freedom to live, without being unjustly harmed. The automobile industry is going through a transformation that will be wise to learn from the leaders, gaining trust in urban areas committed to freedom and justice through respect for diverse ideas and modes of movement.

American transit managers of the southern states who watch their neighbors and friends be killed by drivers without feeling any guilt should in the near future be about as common as politicians today who would look the other way when they see slave drivers.

New York City

NYC proves to the rest of America what needs to be done, by deploying solutions similar to those proven in Denmark and Sweden:

NSC estimates traffic fatalities in New York fell 3 percent last year and have dropped 15 percent over the last two years. Safety advocates say the decline may be due to New York City’s push to eliminate traffic deaths by lowering speed limits, adding bike lanes and more pedestrian shelters.

“Changes like those being made in New York can save lives,” said [Deborah Hersman, CEO of the National Safety Council]

When NYC releases the financial and healthcare benefits that derive from fewer cars, maybe it will help steer the discussion forward in Texas. Seems unlikely, though, as Texans do not seem to be pro-life as much as they think their success is measured by ability to collect and carelessly operate things that kill others.

My favorite part of a study of where to live in America without danger from cars is actual their disclaimer at the beginning of the list:

New York City is not included in this listing. If it was, neighborhoods from that city would dominate the entire list. In fact, you could place the whole of Manhattan on this list as only 5% of residents use a car for their daily trips

With the most-successful city out of the running, the list then goes on to recommend being in the Tenderloin of SF.

The Tenderloin?

San Francisco

The author clearly hasn’t tried riding down the infamous Golden Gate corridor of Tenderloin cars parked or driving in the bike lanes. It’s a miserable place to ride a bike or walk.

Why anyone would eliminate the best option in America and then recommend living in a filthy run-down neighborhood with awful bike and pedestrian access options…is beyond this blog post. But it definitely shows American analysts often don’t understand this transit topic.

First, they don’t factor for overall health improvements as a function of car-less urban spaces. They just draw a circle around transit stations and measure nothing else. That isn’t how this works.

Second, based on the radius of the circle they think like car drivers and assume you are better off living directly above a subway as if it’s a straight substitute for having a car in your garage. Remember at the start of this post how the distance traveled by foot/bike leads to multiple facets of financial and health improvement?

Forget about the model where you roll out of bed and stumble into an elevator that drops you into a car so you can avoid using a muscle. Wrong quality of life model.

Notice that the author admits these errors in analysis, without even realizing it:

…this area of San Francisco is known for drugs and crime, it is surrounded by very desirable places to live. It’s also lies adjacent to the rapid transit line, BART

Yeah, go live in the desirable places surrounding the transit line, not inside the train station. Moreover, let’s be honest here, the author also regurgitates an old American white supremacist trope, probably without even knowing.

All of San Francisco is known for drugs, and crime is widespread. You literally can’t go to a neighborhood in SF and find it free of drugs. This tracks to the rather sad fact that Nixon’s racist “war on drugs” still lives on, giving people the impression urban areas are dangerous because “drugs and crime” (Nixon’s propagandist way of saying blacks and pacifists).

We knew we couldn’t make it illegal to be either against the war or black, but by getting the public to associate the hippies with marijuana and blacks with heroin. And then criminalizing both heavily, we could disrupt those communities [by turning them into rubble and building highways through]

In fact, the only reason Highway 101 abruptly ends at Octavia and does not cut through the Haight (a formerly black neighborhood) and Golden Gate Park as planned is because civil-rights protests blocked “disruption” for white-flight-suburb road construction. There is no highway to this day running through urban SF because quality of life protests against it meant the successful rejection of white supremacist propaganda, which meant streets and houses instead of overpasses and parking lots.

Tenderloin is not more dangerous than the Mission area, which also lies “adjacent to the rapid transit line”, and it certainly is not more dangerous than the Marina if you are measuring getting raped by white football player who just moved to SF to party and “get some” before getting appointed to Vice President or the Supreme Court.

Nixon was elected because he said things like blacks can’t handle drugs, and he enacted policies to incarcerate blacks and not whites for the same behaviors. And that’s just a modern version of America First, which in the early 1900s under President Wilson argued non-whites (Irish, German, Blacks…) couldn’t handle liquor.

Prohibition was passed to destroy black lives, while whites could continue producing and drinking because notes from wealthy/connected doctors cited “medicinal” reasons.

Anyway, if you want to cite SF, look at the SOMA neighborhood sitting at the head of the CalTrain station, adjacent to the new high-speed rail station, and also on the new north-south local transit line, which will feed into BART, not to mention on the water with easy access to the ferry.

SOMA has far superior pedestrian, cycle and transit options to the Tenderloin or any other neighborhood in the city. This tracks historically to SOMA having amazing trolley grids before the car enthusiasts ripped it all up to drive up air/noise pollution and cause traffic jams as their preferred lifestyle.