Update August 2020: Latest research can be found in a new blog post called Cultural Spectrum of Trust.

My mother and I from 2006 to 2010 presented a linguistic analysis of the Advanced Fee Fraud (419 Scam).

One of the key findings we revealed (also explained in other blog posts and our 2006 paper) is that intelligence does not prevent someone from being vulnerable to simple linguistic attacks. In other words, highly successful and intelligent analysts have a predictable blind-spot that leads them to mistakes in attribution.

The title of the talk was usually “There’s No Patch for Social Engineering” because I focused on helping users avoid being lured into phishing scams and fraud. We had very little press attention and in retrospect instead of raising awareness in talks and papers alone (peer review model) we perhaps should have open-sourced a linguistic engine for detecting fraud. I suppose it depends on how we measure impact.

Despite lack of journalist interest, we received a lot of positive feedback from attendees: investigators, researchers and analysts. That felt like success. After presenting at the High-Tech Crimes Investigation Association (HTCIA) for example I had several ex-law enforcement and intelligence officers thank me profusely for explaining in detail and with data how intelligence can actually make someone more prone to misattribution, to fall victim to bias-laced attacks. They suggested we go inside agencies to train staff behind closed doors.

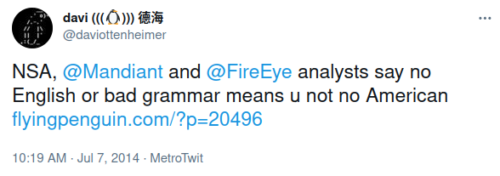

In other words, since long before the Sony breach news started breaking I have tried to raise the importance of linguistic analysis for attribution, as I tweeted here.

I’m told my sense of humor doesn’t translate well under the constraints of Twitter.

Recently the significance of our work has taken a new turn; a spike in interest on my blog post from 2012 is happening right now, coupled with news about linguistics being used to analyze Sony attack attribution. Ironically the news is by a “journalist” at the NYT who blocked me on Twitter.

I’m told by friends she blocked me after I used a Modified Tweet (MT) to parody her headline.

Allegedly she didn’t find my play on words amusing, but a block seems kind of extreme for that MT if you ask me.

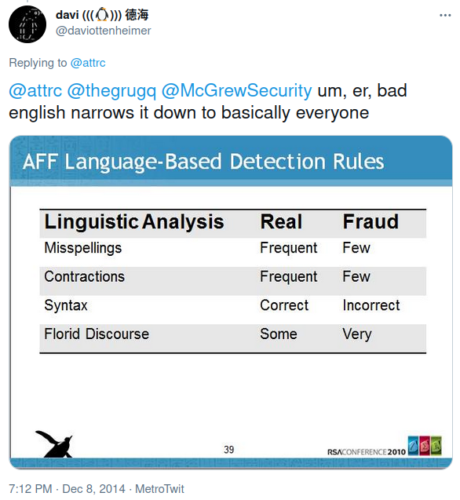

And then at the start of the Sony breach story breaking on December 8, I tweeted a slide from our 2010 presentation.

Also recently I tweeted

good analysis causes anti-herding behavior: “separates social biases introduced by prior ratings from true value”

Tweets unfortunately are disjointed and get far less audience than my blog posts so perhaps it is time to return to this topic here instead? I thus am posting the full presentation again:

Download: RSAC_SF_2010_HT1-106_Ottenheimer.pdf

Look forward to discussing this topic further, as it definitely needs more attention in the information security community. Kudos to Jeffrey Carr for pursuing the topic and invitation to participate in crowds that have been rushing into the Sony breach analysis fray with linguistics.

Updated to add: Perhaps it also would be appropriate here to mention my mother’s book called The Anthropology of Language: An Introduction to Linguistic Anthropology.

Ottenheimer’s authoritative yet approachable introduction to the field’s methodology, skills, techniques, tools, and applications emphasizes the kinds of questions that anthropologists ask about language and the kinds of questions that intrigue students. The text brings together the key areas of linguistic anthropology, addressing issues of power, race, gender, and class throughout. Further stressing the everyday relevance of the text material, Ottenheimer includes “In the Field” vignettes that draw you in to the chapter material via stories culled from her own and others’ experiences, as well as “Doing Linguistic Anthropology” and “Cross-Language Miscommunication” features that describe real-life applications of text concepts.