The great myth of AI is that it will improve over time.

Why?

I get it, as I warned about AI in 2012, people want to believe in magic. A narwhal tusk becomes a unicorn. A dinosaur bone becomes a griffin. All fake, all very profitable and powerful in social control contexts.

What if I told you Tesla has been building an AI system that encodes and amplifies worsening danger, through contempt for rules, safety standards, and other people’s lives?

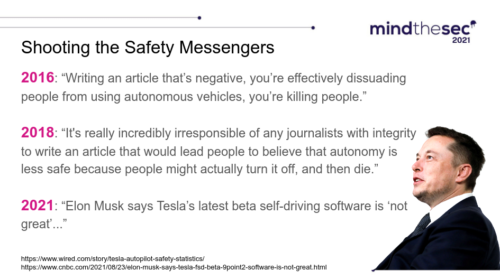

People want to believe in the “magic” of Tesla, but there’s a sad truth finally coming to the surface. Elon Musk has been promising for ten years AI can make his cars driverless “a year from now”, as if Americans can’t recognize snake oil of the purest form.

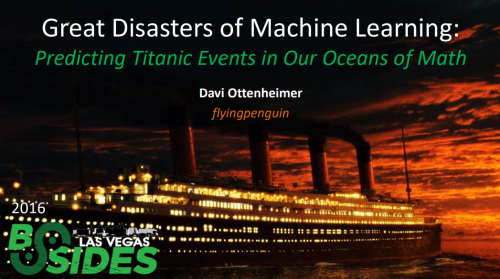

Back in 2016 I gave a keynote talk about Tesla’s algorithms being murderous, implicated in the death of Josh Brown. I predicted it would get much worse, but who back then wanted to believe this disinformation historian’s Titanic warnings?

If there’s one lesson to learn from the Titanic tragedy, it’s that designers believed their engineering made safety protocols obsolete. Musk sold the same lie about algorithms. Both turned passengers into unwitting deadly test subjects.

I’ll say it again now, as I said back then despite many objections, Josh Brown wasn’t killed by a malfunction. The ex-SEAL was killed by a robot executing him as it had been trained.

Ten years later and we have copious evidence that Tesla systems in fact get worse over time.

NHTSA says the complaints fall into two distinct scenarios. It has had at least 18 complaints of Tesla FSD ignoring red traffic lights, including one that occurred during a test conducted by Business Insider. In some cases, the Teslas failed to stop, in others they began driving away before the light had changed, and several drivers reported a lack of any warning from the car.

At least six crashes have been reported to the agency under its standing general order, which requires an automaker to inform the regulator of any crash involving a partially automated driving system like FSD (or an autonomous driving system like Waymo’s). And of those six crashes, four resulted in injuries.

The second scenario involves Teslas operating under FSD crossing into oncoming traffic, driving straight in a turning lane, or making a turn from the wrong lane. There have been at least 24 complaints about this behavior, as well as another six reports under the standing general order, and NHTSA also cites articles published by Motor Trend and Forbes that detail such behavior during test drives.

Perhaps this should not be surprising. Last year, we reported on a study conducted by AMCI Testing that revealed both aberrant driving behaviors—ignoring a red light and crossing into oncoming traffic—in 1,000 miles (1,600 km) of testing that required more than 75 human interventions.

Let’s just start with the fact that everyone has been saying garbage in, garbage out (GIGO) is a challenge to overcome in AI, since forever.

And by that I mean, even common sense standards should have forced headlines about Tesla being at risk of soaking up billions of garbage data points and producing dangerous garbage as a result. It was highly likely, at face value, to become a lawless killing machine of negative societal value. And yet, its stock price has risen without any regard for this common sense test.

Imagine an industrial farmer announcing he was taking over a known dangerous superfund toxic sludge site to suddenly produce the cleanest corn ever. We should believe the fantasy because why? And to claim that corn will become less deadly the more people eat it and don’t die…? This survivor fallacy of circular nonsense from Tesla is what Wall Street apparently adores. Perhaps because Wall Street itself is a glorified survivor fallacy.

Let me break the actual engineering down, based on the latest reports. The AMCI Testing data (75 interventions in 1,000 miles) provides a quantifiable deterioration rate. That’s a Tesla needing intervention every 13 miles.

Holy shit, that’s BAD. Like REALLY, REALLY BAD. Tesla is garbage BAD.

Human drivers in the US average one police-reported crash every 165,000 miles. Tesla FSD requires human intervention to prevent violations or crashes at a rate roughly 12,000 times higher than human baseline crash rates.

Elon Musk promised investors a 2017 arrival of a product superior to “human performance”, yet in 2025 we see code that is still systematically worse than a drunk teenager.

And, it’s actually even worse than that. Tesla re-releasing a “Mad Max” lawless driving mode in 2025 is effectively a cynical cover up operation, to double-down on deadly failure as normalized outcomes on the road. Mad Max was a killer.

I’ve disagreed with GIGO for as long as I’ve pointed out Tesla will get worse over time. I could explain, but I am not sure a higher bar even matters at this point. There’s no avoiding the fact that the basic GIGO tests show how Tesla was morally bankrupt from day one.

The problem isn’t just that Tesla faced a garbage collection problem, it’s that their entire training paradigm was fundamentally flawed on purpose. They’ve literally been crowdsourcing violations and encoding failures as learned behavior. They have been caught promoting rolling stop signs, they have celebrated cutting lanes tight, and even ingested a tragic pattern of racing to “beat” red lights without intervention.

That means garbage was being relabeled “acceptable driving.” Like picking up an old smelly steak that falls on the floor and serving it anyway as “well done”. Like saying white nationalists are tired of being called Nazis, so now they want to be known only as America First.

This is different from traditional GIGO risks because the garbage is a loophole that allows a systematic bias shift towards more aggressive, rule-breaking, privileged asshole behavior (e.g. Elon Musk’s personal brand).

The system over time was setup to tune towards narrowly defined aggressive drivers, not the safest ones.

What makes this particularly insidious is the feedback loop I identified back in 2016. “Mad Max” mode from 2018 wasn’t just marketing resurfacing in 2025, it’s a legal and technical weapon deployed by the company strategically.

Explicitly offering a “more aggressive” option means Tesla moves the Overton window while creating plausible deniability: “The system did what users wanted.”

This obscures that their baseline behavior was degraded by training on violations, and reframes the failures within a worse option. Disinformation defined.

Musk’s snake oil promises – that Teslas would magically become safer through fleet learning – require people to believe that more data automatically equals better outcomes. Which is like saying more sugar is going to make you happier. It’s only true if you have labeled ground truth, to know how close to diabetes you are. It needs a reward function aligned with actual safety, and the ability to detect and correct for systematic biases.

Tesla has none of these.

They have billions of miles of “damn, I can’t believe Tesla got away with it so far, I’m a gangsta cheating death” which is NOT the same as if its software drove the car legally let alone safely.

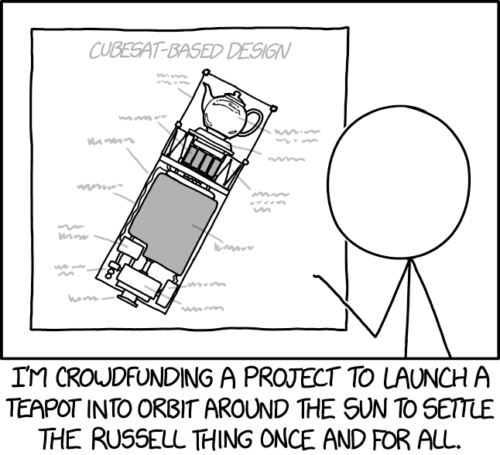

Tesla claimed to be doing engineering (testable, falsifiable, improvable) while actually doing testimonials (anecdotal, survivorship-biased, unfalsifiable). “My Tesla didn’t crash” is not data about safety, it’s absence of negative outcome, which is how drunk drivers justify their behavior too… like a teapot orbiting the sun (unfalsifiable claims based on absence of observed harm).