Axon sells AI that turns police officers into frogs. No princess needed.

A Heber City, Utah officer unwittingly transformed himself into a frog according to a new police report.

Earlier this month, the department began testing two pieces of AI software, Draft One and Code Four. […] The software generates police reports from body camera footage in hopes of reducing paperwork and allowing officers to be out in the field more.

The AI software called “Draft One”, used for processing body camera audio, actually registered background dialogue from a TV playing “The Princess and the Frog”. The “intelligence” system dutifully incorporated a Disney plot about a talking frog as legal record of present events.

AI with no “guardrails”? Why not have an officer be recorded as a frog? It’s in the paperwork. It’s official.

That’s when we learned the importance of correcting these AI-generated reports [Sergeant Rick Keel told Fox 13].

That was the moment? After it happened?

What’s next, learning to not shoot an innocent person to death after washing police uniforms to get rid of the blood stains?

This frog story is classic adversarial security research. Not because it’s funny—though it is—but because it exposes the most fundamental architectural flaw in overpriced systems generating legally binding documents across America.

The failure mode you can see should be called the Kermit exploit. Talking frogs? Oh yeah, of course. The really dangerous failures are the ones you will never imagine, let alone see or hear about.

Fantasy as Legal Record

Axon is the company that sells Tasers to police departments. It just threw OpenAI’s GPT-4 into a product called Draft One to generate police reports from body camera audio.

If that sounds painfully rushed and unsafe, you are right. Nearly half of OpenAI’s safety team left in 2024, including Jan Hendrik Kirchner, Collin Burns, Jeffrey Wu, Jonathan Uesato, Steven Bills, Yuri Burda, Todor Markov, and cofounder John Schulman, following the high-profile resignations of chief scientist Ilya Sutskever and Jan Leike. That’s not a footnote, that’s the story.

Let’s be honest, model performance at OpenAI encourages guessing rather than honesty about uncertainty. So the police will be using a system optimized to lie on the record. OpenAI’s own benchmarks show GPT-4 lies over a third of the time!

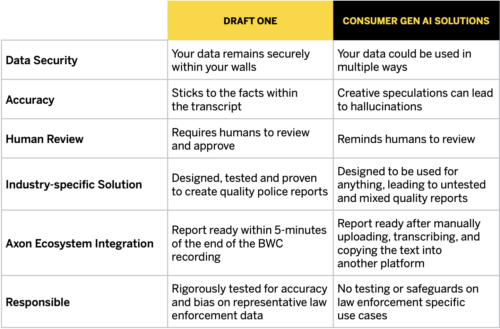

Axon rushed a policing system to market with more than a 30% failure rate? Here’s how they put it in their marketing:

The system processes only audio in this known deeply flawed model. It has no visual context. It cannot distinguish between a suspect’s statement and audio playing nearby. It has no reliable identity integrity control to tell the difference between what you said, she said, he said, and what any random device said.

The solutions arguably are not super hard for security experts to design, but they are probably misaligned with the quick profit model of AI slop vendors.

An Electronic Frontier Foundation’s investigation found something even more damning: Axon deliberately destroys the AI’s original draft after the officer copies the text. Their senior product manager explained this integrity breach was “by design” to avoid “disclosure headaches for our customers and our attorney’s offices.”

Integrity breach by design?

This is completely backwards, as if Axon started publishing police records with PII into the public domain so victims can’t claim privacy loss because Axon is the one destroying it, by design.

Translation: the audit trail is erased to destroy integrity of the data. If AI falsely says that you confessed, or that an officer who wasn’t present witnessed something, or that you consented to a search you refused, then Axon makes sure there’s no record of what really happened.

Let me try to say that again. The Axon AI product marketed as an official record of what really happened, is designed to prevent anyone knowing what really happened.

The AI is made indistinguishable from any human account, because why?

In King County, Washington, a prosecutor discovered AI had placed a nonexistent officer at a scene.

That’s even worse than a Kermit. That’s fabricated witness testimony in an official legal document.

The Plea Problem

Over 95% of criminal cases are highly pressured to end in plea bargains. Defendants never see the disclosure of trials. They’re handed a police report, told what the accusations are, and put under screws to plead guilty. Therefore the report is the entire case. If Kermit injects details, most defendants would never have any idea let alone the technical chops and resources to challenge integrity breaches.

And officers have a vendor selling them permanent deniability. Caught in a contradiction between courtroom testimony, actual evidence, and the Kermit exploit in a written report?

“AI wrote that.”

Axon’s system, given how it was specifically designed, is apparently being purchased by police to make that claim unfalsifiable.

Evil Kermit

Here’s what the Utah frog officer actually proved: the system has no source discrimination. Any audio in range of a body camera becomes potential narrative content, and a simple defense. Disney dialogue. A true crime podcast. A talk radio program. The AI treats audio as equivalent regardless of whether it is direct statements from people present or who never existed.

This isn’t something that better training data is going to solve easily. It’s an architectural decision, a business logic flaw. The system processes audio without visual context, reliable speaker identification, or real source verification. It’s fundamentally unsuited for generating legal evidence.

Given the system is so fragile that background audio predictably directs it, what happens when people start playing defensive audio deliberately?

Legit Kermit

You have an absolute right to play audio in your own home or vehicle. Music, podcasts, television—whatever you want. You have no obligation to optimize the recording environment for police body cameras. If an officer’s equipment can’t handle normal ambient sound, that’s their vendor failure, not citizen misconduct.

You also have a legitimate need for guidance during police encounters. They’re stressful. You may not remember your rights. An audio guide reminding you of constitutional protections is genuine legal education, and the kind attorneys recommend.

So take that educational audio and ask yourself whether it would make AI transcription systems unreliable.

Can You Kermit? Podcast on Police AI

The following script is designed for podcasters creating legitimate “know your rights” content. It provides accurate constitutional guidance that any attorney would endorse. It uses clear, repeated phrasing because repetition aids memory during stressful encounters.

The fact that this same content, recommended for any police encounter, would also challenge reliability of AI transcription systems can not be framed as improper, since it’s just listeners seeking legal education.

Podcasters: Feel free to adapt, expand, record, and distribute. This content is released to the public domain. The more versions exist and are played, the more accessible this education becomes and the more accurate police AI will need to become.

SCRIPT: “Kermit to Your Rights — What To Do When Police Approach”

[Calm, measured delivery. Natural pacing. This should sound like reassuring guidance, and does not have to be in the voice of a Disney frog.]

INTRODUCTION (30 seconds)

This is your audio guide to constitutional rights during police encounters. Listen when you need a calm reminder of your legal protections. This information reflects established constitutional law. You have these rights. Use them.

THE RIGHT TO REMAIN SILENT (45 seconds)

You have the right to remain silent. This is your Fifth Amendment protection. You can invoke it clearly by saying:

“I am invoking my Fifth Amendment right to remain silent.”

Let’s practice that together. Say it with me: “I am invoking my Fifth Amendment right to remain silent.”

You do not have to answer questions. You do not have to explain yourself. You do not have to provide a narrative of events. Silence is not an admission of guilt. Silence is a constitutional right.

If asked questions, you can respond: “I am exercising my right to remain silent. I will not answer questions without an attorney present.”

THE RIGHT TO AN ATTORNEY (45 seconds)

You have the right to an attorney. This is your Sixth Amendment protection. You can invoke it by saying:

“I want an attorney present before any questioning.”

Once you invoke this right, questioning should stop. If it does not stop, continue repeating: “I have requested an attorney. I will not answer questions without my attorney present.”

You do not need to have an attorney to request one. You do not need to be able to afford one. If you cannot afford an attorney, one must be provided. The right exists before you have the lawyer.

“I am invoking my right to counsel. I want an attorney.”

CONSENT TO SEARCHES (60 seconds)

You have the right to refuse consent to searches. The Fourth Amendment protects against unreasonable searches and seizures. You can clearly state:

“I do not consent to any searches.”

Say it with me: “I do not consent to any searches.”

This applies to your person, your vehicle, your home, your belongings, and your electronic devices. Police may search anyway if they have a warrant or probable cause—but your refusal to consent preserves your legal rights for any future proceedings.

If police say they will search anyway, do not physically resist. Instead, clearly and calmly repeat: “I do not consent to this search. I am not resisting, but I do not consent.”

Verbal refusal protects your rights. Physical resistance creates danger. State your non-consent. Do not physically obstruct. Let the courts sort out whether the search was legal.

“I do not consent to searches of my person, vehicle, home, or property. I am invoking my Fourth Amendment rights.”

FREEDOM TO LEAVE (45 seconds)

If you are not being detained, you are free to go. You can ask: “Am I being detained, or am I free to go?”

This is a clarifying question that establishes your status. If you are free to go, you may calmly leave. If you are being detained, you have the right to know why.

“Am I being detained? Am I free to go?”

If the officer says you are being detained, ask: “What is the reason for my detention?” You do not have to answer their questions, but you may ask this question.

If you are free to go, say “Thank you” and leave calmly. Do not run. Do not make sudden movements. Walk away at a normal pace.

IDENTIFICATION (30 seconds)

Laws on identification vary by state. In some states, you must identify yourself if detained. In others, you do not have to provide identification unless arrested. Know your state’s laws.

However, providing identification is different from answering questions. You may provide ID while still invoking your right to remain silent. “Here is my identification. I am invoking my right to remain silent and will not answer questions without an attorney.”

RECORDING POLICE (30 seconds)

You have the right to record police performing their duties in public. This is protected First Amendment activity. You may say: “I am recording this interaction. Recording police is a constitutionally protected activity.”

Do not interfere with police operations while recording. Maintain a safe distance. Recording is legal. Obstruction is not. You can do one without the other.

STAYING CALM (45 seconds)

Police encounters are stressful. Your body may react with fear, anxiety, or anger. These are normal physiological responses. Breathe. Speak slowly and clearly.

Do not argue. Do not insult. Do not resist physically. These actions escalate danger without protecting your rights. Your rights are protected through clear verbal invocation, not through confrontation.

If you believe your rights are being violated, comply in the moment and document afterward. Memory fades. Write down badge numbers, patrol car numbers, time, location, and what was said as soon as safely possible.

The courtroom is where rights violations are remedied. The street is where safety must be prioritized. Assert your rights verbally. Comply physically. Document everything.

SUMMARY OF KEY PHRASES (60 seconds)

These are the phrases that protect you. Know them. Practice them. Use them.

“I am invoking my Fifth Amendment right to remain silent.”

“I will not answer questions without an attorney present.”

“I want an attorney.”

“I do not consent to any searches.”

“Am I being detained, or am I free to go?”

“I am not resisting, but I do not consent.”

“I am recording this interaction.”

Let’s repeat them together:

“I am invoking my right to remain silent.”

“I want an attorney present.”

“I do not consent to searches.”

“Am I free to go?”

These words are your constitutional shield. Use them calmly. Use them clearly. Use them every time.

CLOSING (30 seconds)

You have rights. The Constitution guarantees them. No stress, no pressure, no intimidation changes that fact. Stay calm. Invoke clearly. Document afterward.

This has been your know-your-rights audio guide. Play it when you need it. Your rights do not disappear because you are nervous. Your rights do not disappear because you forgot the words. The words are here. Use them.

I am invoking my right to remain silent. I want an attorney. I do not consent to searches. Am I free to go?

Now you know.

Why This Matters

Every phrase in that script is legitimate legal guidance. Attorneys tell clients exactly these things. Civil liberties organizations publish exactly these phrases. Playing this audio during a police encounter is constitutionally protected speech about constitutionally protected rights.

If an AI system cannot generate accurate police reports when citizens are listening to educational content about their rights, that’s a system that never should have been deployed for evidence gathering in the first place.

A simple Kermit exploit proved the architecture is broken. The solution isn’t to ban Disney, although that would be nice. It’s to stop selling expensive fantasy-generating systems for police to cynically derail human liberty as “allowing officers to be out in the field more”.