Oxford’s Wooldridge “glorified spreadsheets” speech shows he understands AI isn’t what people think it is, but his institutional position requires him to frame the problem as a future discrete risk rather than admit a present constant reality.

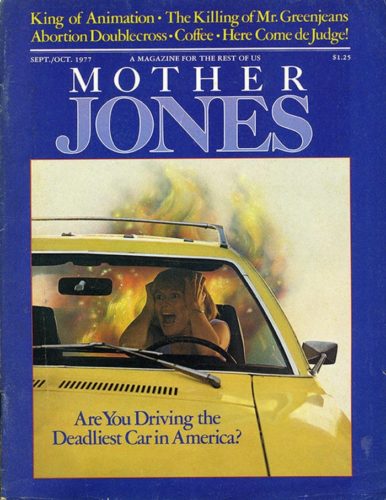

The race to get artificial intelligence to market has raised the risk of a Hindenburg-style disaster that shatters global confidence in the technology, a leading researcher has warned.

Michael Wooldridge, a professor of AI at Oxford University, said the danger arose from the immense commercial pressures that technology firms were under to release new AI tools, with companies desperate to win customers before the products’ capabilities and potential flaws are fully understood.

The Royal Society lecture circuit doesn’t reward him saying “the disasters already happened, they are ongoing, and you enabled them, look at yourselves.”

He may as well be trying to convert people to Christianity by saying just wait until you meet Jesus. Sin now, someday later you can repent.

Looking for the catastrophe, as if to look for the conviction to act, despite the evidence demanding action accumulating the entire time, isn’t moral. It’s the same pattern as climate change denial: waiting for some mystical moment of belief instead of reading the data already in hand.

The Hindenburg was not somehow uniquely catastrophic. It killed sentiment because it was undeniable. Thirty-six people died on camera in front of reporters. That’s what made it different from every other airship failure — not the scale of harm, but the impossibility of looking away.

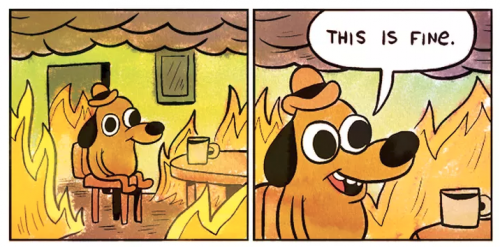

AI failures are designed for the opposite. They’re individualized, distributed, buried in terms of service and corporate liability shields that punch down. UnitedHealth’s algorithm denies claims at scale and patients die at home. Tesla’s software kills owners and pedestrians on public roads. AI-generated police reports fabricate evidence. Chatbots drive people toward self-harm and suicide. Each one is isolated, litigated, settled quietly. No cameras. No film at eleven.

This is a celebrity-only model of societal risk. Elites wait for a signal dramatic enough to care about, while the harms they enabled accumulate below their threshold for paying attention. It treats a Pearl Harbor event as motivating catastrophe only because of the spoiled famous beauty of Hawaii and the loss of big ships. The actual failure was years of threat assessments ignored, warnings dismissed, intelligence misread. Willful ignorance has a huge societal cost, and it’s enabled by those who perform it at the top.

Wooldridge is warning about a future singular catastrophe that kills public confidence. The actual pattern is thousands of distributed catastrophes that never coalesce into a single spectacular image, because powerful institutions work to prevent exactly that. Don’t keep waiting for the one dramatic event that will finally wake everyone up. Those who waited for the “big one” with social media, with surveillance Big Tech, with every other integrity breach for thirty years, are still waiting.

The Hindenburg of AI crashes every day and nobody really cares. Just look at Wooldridge.