A new “catalysts” PR push by Tesla fails basic engineering and market reality tests. I can’t believe “Analysts at Cantor Fitzgerald led by Andres Sheppard” are really this bad at their job, but here we are:

“We believe the recent selloff represents an attractive entry point for investors with >12-month investment horizon (and who are comfortable with volatility)….” Sheppard wrote that his bullishness on Tesla was crystallized after he visited the company’s Gigafactory and AI data centers in Austin, Texas.

I’m not even talking about the alleged fraud and cooked books going on right now at Tesla. Where’s that missing $1.4B in cash?

These analysts do deserve criticism for seemingly ignoring documented technical limitations and safety concerns in favor of optimistic market projections. Their assessment appears to overlook some obvious crucial engineering realities. Let’s go through the flawed points, as given to us by someone who admits they just came out of a Potemkin tour of a Tesla factory.

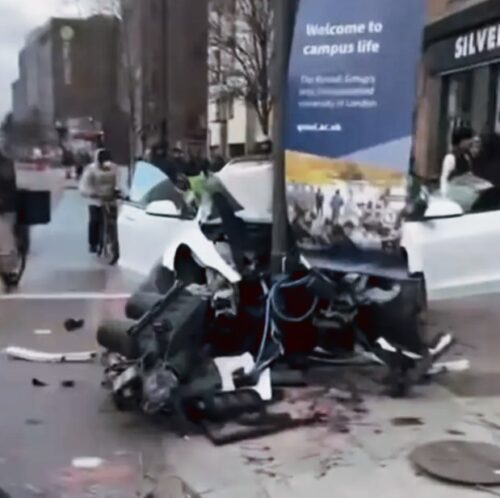

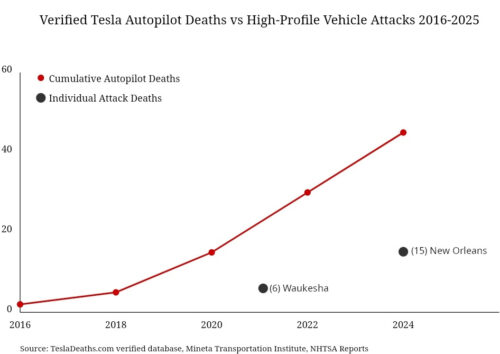

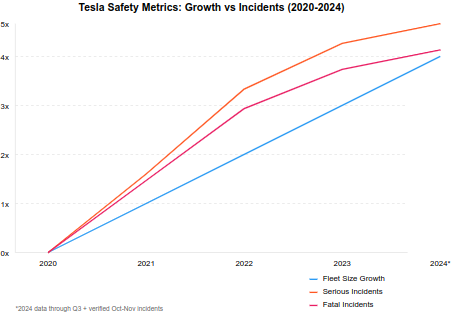

- Robotaxi (Release announced for 2024 on 8/8 — an obvious Elon Musk reference to 88 or “Heil Hitler”): Technically unfeasible in any timeframe. Tesla’s autonomous systems have been linked to over 50 deaths already, with fatalities increasing dramatically year over year. The system still fails at basic object recognition and navigation tasks that it has struggled with for years, showing decline instead of improvements. Additionally, with repeated Tesla CEO promotions of Hitler and Nazism these vehicles would be the face of violent hate, yet lack any proven security countermeasures to those being targeted by them.

- FSD anywhere but unregulated America: This is an engineering impossibility given basic regulatory frameworks. Tesla’s current system doesn’t meet technical requirements for any real market’s autonomous vehicle standards. China requires local data storage and processing that Tesla’s architecture was never designed for — can’t out-surveillance the surveillance experts. And EU safety standards demand respect for quality of engineering and value of human life that Tesla management and vehicles don’t have. There is no other car with the death toll of a Tesla, Cybertruck is literally 17X more dangerous than a Ford Pinto.

- Lower-priced vehicle (Promised since forever, even before the Model S, never delivered): A Model 2 was announced in 2020 for $25K if you remember, and the Model 3 was supposed to be released with a $35K price, and the Cybertruck was supposed to be $39,900 (released at over $60K instead). So much failure. This is still a manufacturing and market impossibility on multiple fronts:

- The used Tesla market is already flooded with vehicles at rapidly depreciating prices, cannibalizing any market for new budget models. Tesla apparently now sees its vehicles depreciating at 3X the market average. Think about that versus a guy who visited the factory and thinks there will be a lift from a low cost new vehicle; that’s a future not only impossible to imagine, it’s crazy

- Competitors (BYD, Hyundai/Kia, VW) offer superior quality, features, and reliability at similar price points

- Battery material costs alone prevent a profitable $30,000 Tesla without significant breakthroughs, which they have literally none to speak about compared with revolutionary announcements made by Nissan, Honda, Toyota…

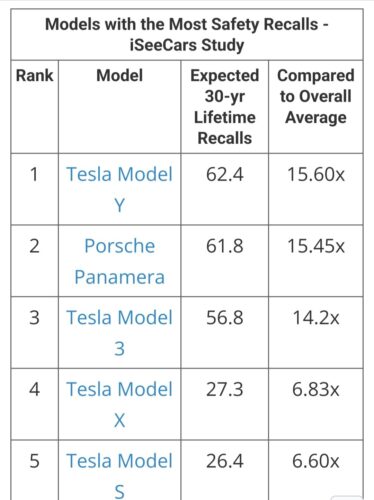

- Tesla’s existing quality issues would likely worsen at lower price points to maintain margins. A used yet low cost Tesla could likely be significantly less likely to kill the owner than new untested lower-quality technology. And on that note, Tesla has no production line currently capable of producing anything other than the same overpriced poorly made stuff they always have (recalls over 15X higher than industry average). Have you read about all the dangerous design and manufacturing defects in the Cybertruck computers, frame, panels, suspension…? An even lesser model seems like it would be even more dangerous if it worked at all.

Tesla quality failures get worse over time. Later models show pattern of safety decline. - Optimus Bot production: What a horrible, sad and cruel joke. It violates fundamental robotics engineering limitations. Current prototypes lack the actuator efficiency, power density, and sensory processing capabilities required for commercial applications. The hardware simply doesn’t exist to fulfill this promise. It’s been little more than a marketing ploy to convince people not to hire non-white women, nothing more. This is a sick apartheid teenage white boy fantasy fiction.

- Semi Truck (Promised to be delivered in 2019… still a mess): Outdated before production. While Tesla delayed for years, competitors have deployed thousands of electric commercial vehicles. The Semi’s battery architecture and charging requirements aren’t compatible with existing commercial transport infrastructure.

All of those huge problems are not going to go away anytime soon. Taxis, Semis, FSD, robots are all long overdue and long away from anything happening of substance. In fact, taxis happening could created a serious loss, so the more Tesla tries to deliver the worse their financials. That’s an engineering fact. When the financial analysis substitutes baseless future fiction of technical marketing for an engineering assessment, they deserve public ridicule.

The fatal flaw of their analysis, literally, is assuming technology with no evidence of anything but failure will suddenly flip into a magic new world of success at scale. And that’s been the tragedy we’ve seen for over a decade already from Tesla. More cars, more deaths, no closer to any of the promises made. The demonstrated pattern of increasing casualties as deployment expands, proves all of the financial analysis dead, very dead, wrong.

The correlation between Tesla deployments and rise in fatalities isn’t speculative, it has been well documented in the data by many people many times over. Expanding defective technology with careless “volatility” investments (for profit!) without resolving fundamental engineering limitations isn’t a catalyst; it’s a predictable tragedy.

The fundamental disconnect between Tesla’s engineering realities and Cantor Fitzgerald’s financial projections highlights a dangerous gap of market analysis. Financial forecasts built on technological fantasies rather than engineering fundamentals aren’t just misleading, they’re directly harmful to investors and the public alike.

When analysts substitute factory tours and executive promises for legitimate technical due diligence, they betray both their professional responsibility and public trust. Real financial analysis must account for documented technical limitations, regulatory hurdles, and safety data, especially when lives are literally at stake.

Without this foundation, investment recommendations like these represent nothing more than expensive gambling on technological miracles that engineering evidence suggests will never materialize.