Tesla has made a series of catastrophic management decisions that have rendered its “automation” hardware and software the worst in the industry.

Removing radar and lidar sensors to leave only low grade cameras and repeatedly forcing qualified staff into dead-ends then replacing them with entry-level ones who wouldn’t disagree with their CEO… shouldn’t have been legal for any company regulated on public road safety.

“Lord of the flies” might be the best way to describe a “balls to the wall” fantasy of rugged individualism behind an unregulated yet centrally dictated robot army of technocratic “autonomy”.

Now even the most loyal Tesla investors with the product, who have sunk their entire future and personal safety into such a fraud, are forced to reveal a desperate and declining status of the company.

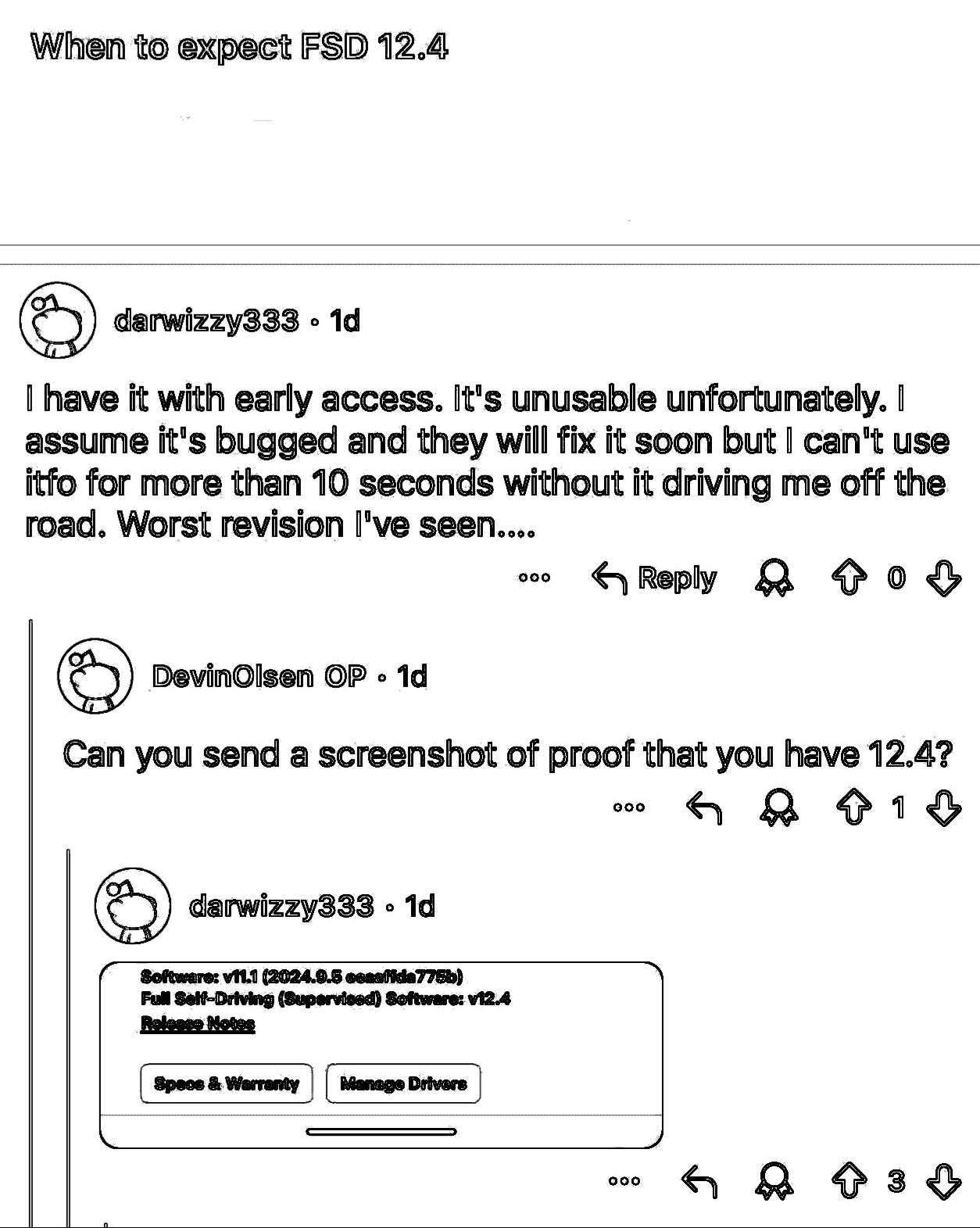

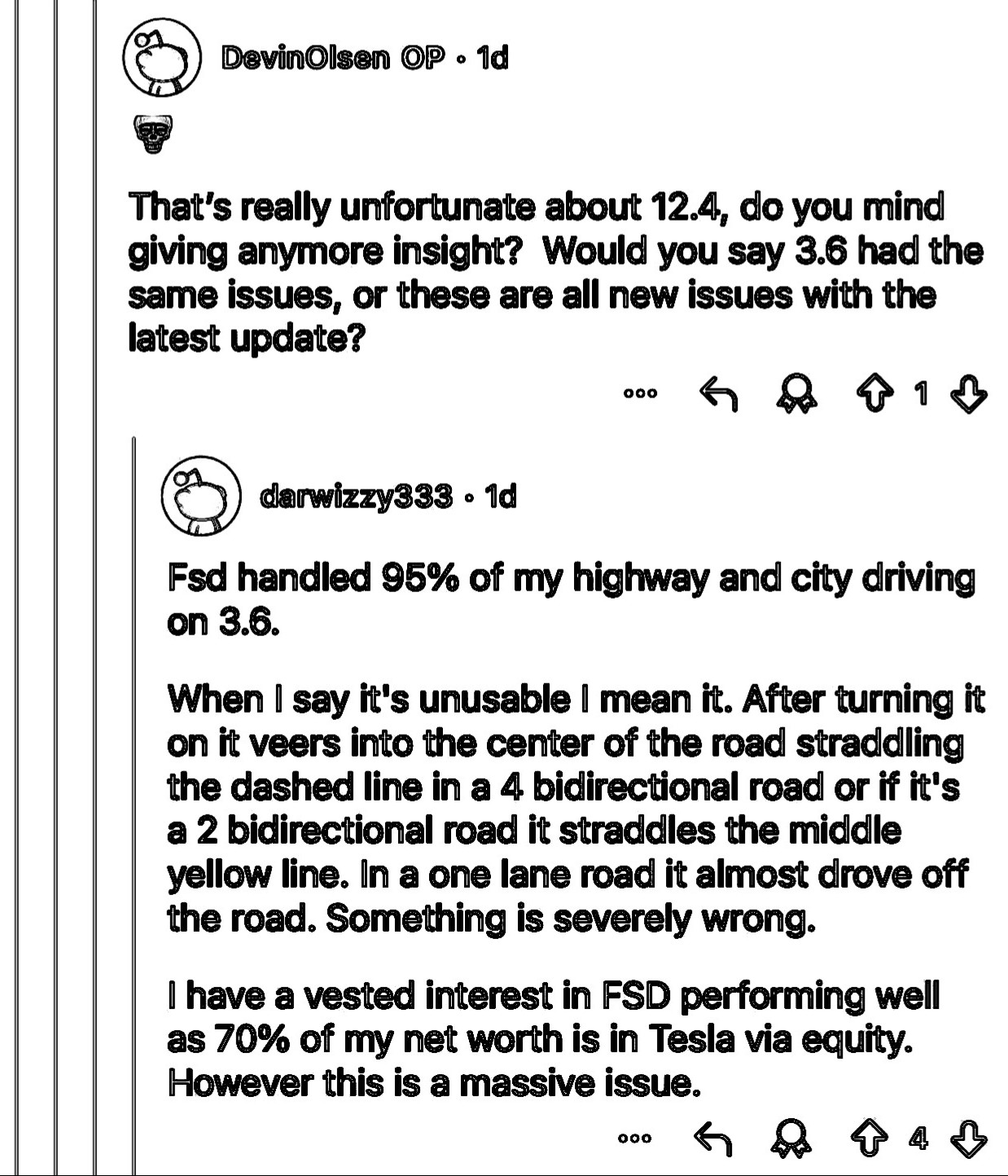

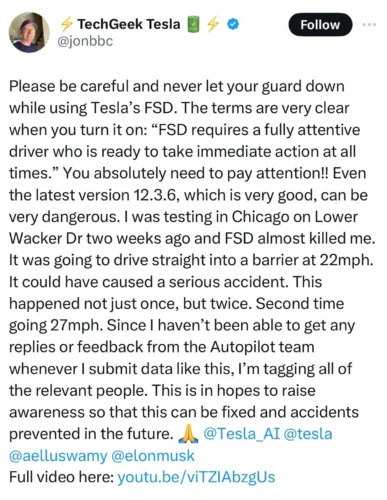

According to a Reddit forum chat, FSD 12.4 is unusable because so obviously unsafe.

This idea “they will fix it soon” is from the same user account that just posted a belief that Tesla’s vaporware “robotaxi” strategy is real. They believe, yet they also can’t believe, which is behavior typical of advance fee fraud victims.

Musk’s erratic leadership played a role in the unpolished releases of its Autopilot and so-called Full Self-Driving features, with engineers forced to work at a breakneck pace to develop software and push it to the public before it was ready. More worryingly, some former Tesla employees say that even today, the software isn’t safe for public road use, with a former test operator going on record saying that internally, the company is “nowhere close” to having a finished product.

Notably, the Tesla software continues to “veer” abruptly on road markings, which seems related to its alarmingly high rate of fatalities.

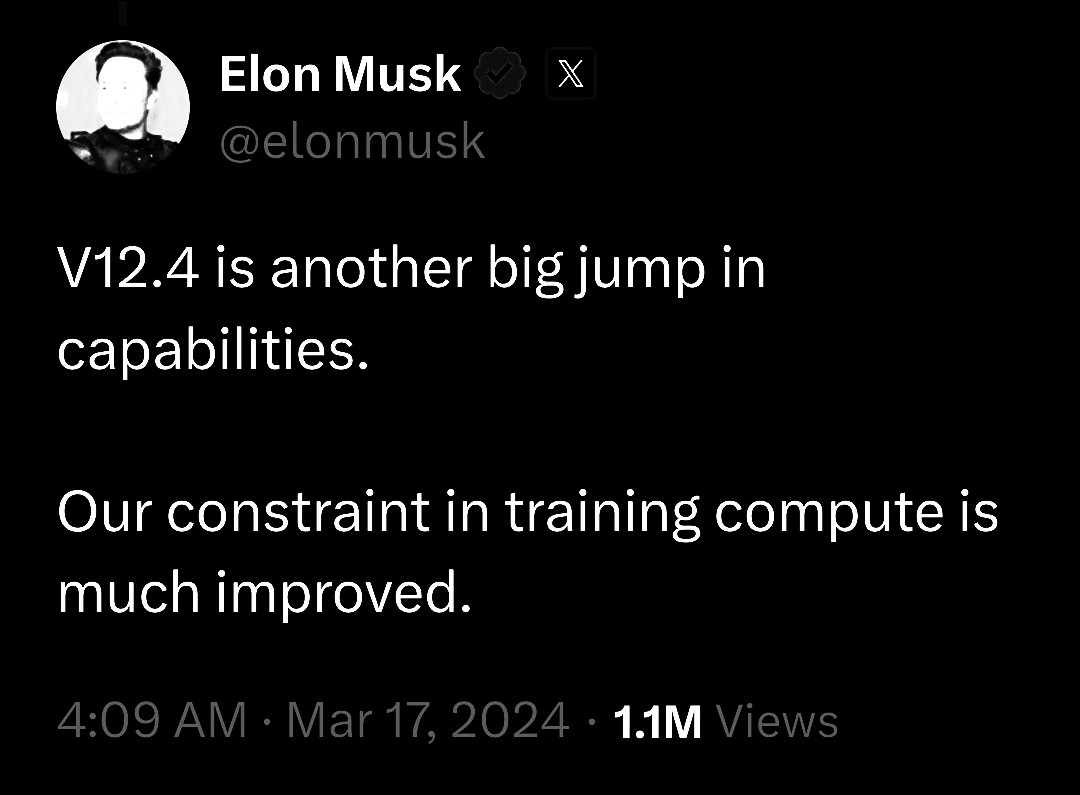

Big jump in the wrong direction. Removed constraints that prevented deaths. Training to cause harms.

Here’s a simple explanation of the rapid decline of Tesla engineering through expensive pivots, showing more red flags than a Chinese military parade:

First dead end? AI trained on images. They discovered what everyone knew, that the more a big neural network ingested the less it improved. It made catastrophic mistakes and people died.

Restart and second dead end? A whole new real-world dataset for AI training on video. After doing nearly 500 KLOC (thousand lines of coke code) they discovered what everyone knew, Bentham’s philosophy of the minutely orchestrated world was impossibly hard. Faster was never fast enough, while complex was never complex enough. It made catastrophic mistakes and people died.

Restart and soon to be third dead end? An opaque box they can’t manage and don’t understand themselves is being fed everything they can find. An entirely new dataset for a neural net depends on thoughts and prayers, because they sure hope that works.

It doesn’t.

This is not improvement. This is not evolution. They are throwing away everything and restarting almost as soon as they get it to production. This is privilege, an emperor with no clothes displaying sheer incompetence by constantly running away from past decisions. The longer the fraud of FSD (Lord of the Flies) continues unregulated, the worse it gets, increasing threats to society.

Update three days later: View from behind the wheel. This is NOT good.

Wow. I had no idea. Thank you

Elon Musk boosted in May that FSD V12.4 was in final stage and would probably roll out mid-May. And in true Tesla fashion May is long gone and we haven’t heard any updates. Maybe they are busy deleting all the CNN. Watch him now try to spin failure into hype about version 13.

see update on reddit post: “Update: camera became uncalibrated…”

https://www.reddit.com/r/TeslaLounge/comments/1d3qsd0/comment/l6bhorz/

Tesla owners manual says the car should throw an error (e.g. APP_w224) and disable the function when it becomes unsafe to drive (e.g. uncalibrated)

https://www.tesla.com/ownersmanual/models/en_us/GUID-9A3F0F72-71F4-433D-B68B-0A472A9359DF.html

“Traffic-Aware Cruise Control and Autosteer are unavailable because the cameras on your vehicle are not fully calibrated. …will remain unavailable until camera calibration is complete.” Supposedly FSD, Enhanced Autopilot, Basic Autopilot…. all are disabled when cameras are recalibrated, or presumably detect lack of calibration?

Yet, clearly FSD 12.4 allowed drivers to use it dangerously without necessary calibration.

And the need to calibrate cameras is very unclear. If there’s no warning at what point would a Tesla owner know they and everyone around them is in imminent danger due to calibration loss?

Resetting the calibration doesn’t sound like a thing anyone would expect to do normally. If you click on the Tesla dashboard screen to recalibrate, this is the kind of warning it gives:

“This procedure should only be performed if the cameras have been moved due to a windshield or camera replacement.”