The Australian Transportation Safety Bureau has released a comprehensive set of reports with an impressive amount of detail on the “In-flight upset – Airbus A330-303, VH-QPA, 154 km west of Learmonth, WA, 7 October 2008”

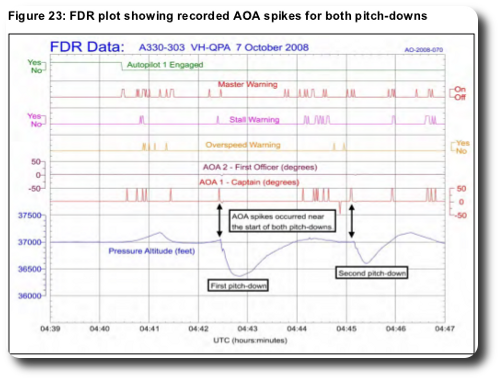

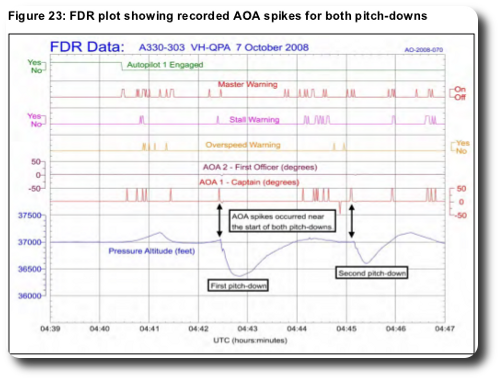

Although the FCPC [flight control primary computer] algorithm for processing AOA [angle of attack] data was generally very effective, it could not manage a scenario where there were multiple spikes in AOA from one ADIRU [air data inertial reference unit] that were 1.2 seconds apart. The occurrence was the only known example where this design limitation led to a pitch-down command in over 28 million flight hours on A330/A340 aircraft, and the aircraft manufacturer subsequently redesigned the AOA algorithm to prevent the same type of accident from occurring again.

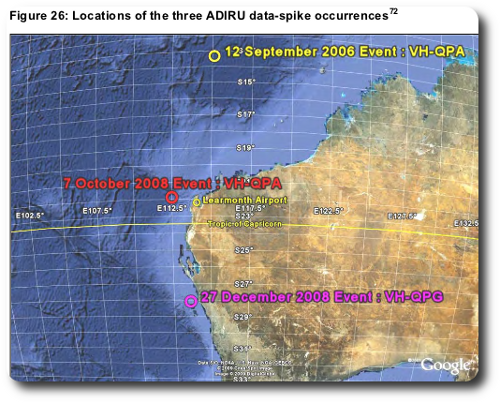

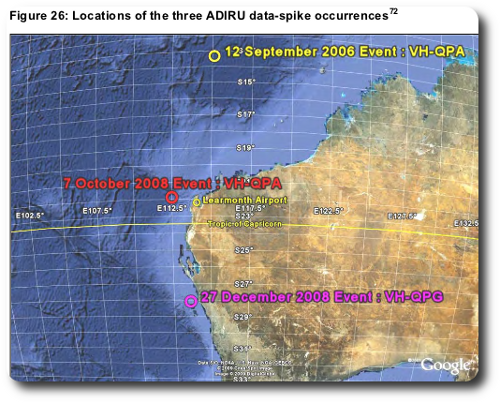

Each of the intermittent data spikes was probably generated when the LTN-101 ADIRU’s central processor unit (CPU) module combined the data value from one parameter with the label for another parameter. The failure mode was probably initiated by a single, rare type of internal or external trigger event combined with a marginal susceptibility to that type of event within a hardware component. There were only three known occasions of the failure mode in over 128 million hours of unit operation. At the aircraft manufacturer’s request, the ADIRU manufacturer has modified the LTN-101 ADIRU to improve its ability to detect data transmission failures.

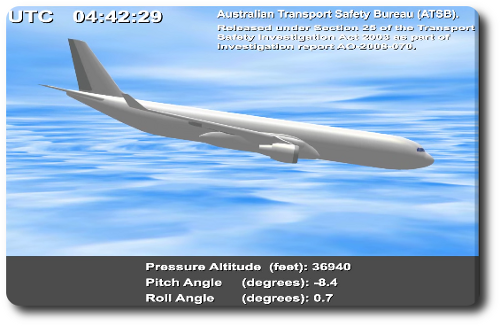

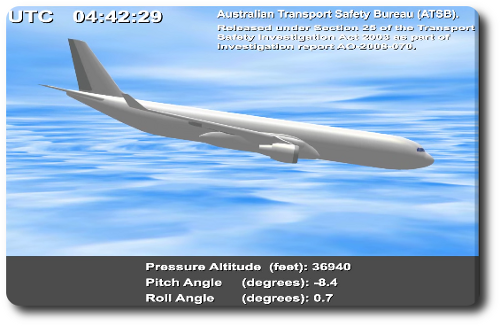

The failure caused the aircraft to take two abrupt “pitch-down” moves as depicted in a (5MB AVI) report video below. The sudden dive injured over 100 passengers and 75% of the crew; 39 were treated in hospital.

The simple explanation is that frequent data spikes had not been anticipated in the ADIRU. Now that the FCPC algorithms to process AOA have been updated for this rare issue the report is classified as complete.

However, the report leaves open the possibilities of a “rare type of…trigger event”. It does not, for example, completely rule out speculation about interference from Naval Communication Station Harold E. Holt — “the most powerful [kilohertz] transmission station in the Southern hemisphere” — near Learmonth Airport. Note the locations of the three similar spike issues.

The closest the report comes to final word on a trigger event is to say possibilities were “found to be unlikely”

…the failure mode was probably initiated by a single, rare type of trigger event combined with a marginal susceptibility to that type of event within the CPU module’s hardware. The key components of the two affected units were very similar, and overall it was considered likely that only a small number of units exhibited a similar susceptibility.

Some of the potential triggering events examined by the investigation included a software ‘bug’, software corruption, a hardware fault, physical environment factors (such as temperature or vibration), and electromagnetic interference (EMI) from other aircraft systems, other on-board sources, or external sources (such as a naval communication station located near Learmonth). Each of these possibilities was found to be unlikely based on multiple sources of evidence. The other potential triggering event was a single event effect (SEE) resulting from a high-energy atmospheric particle striking one of the integrated circuits within the CPU module. There was insufficient evidence available to determine if an SEE was involved, but the investigation identified SEE as an ongoing risk for airborne equipment.

Although the threat may continue to be unknown, the ATSB report is complete based on confidence that the vulnerability has been resolved by changes to the FCPC algorithms. It’s a good counter-example to my occasional point that to be secure is to be vulnerable. Here is a case where the risk from a vulnerability is so high, while the cost of a fix is so low, it had to be patched.