That’s the title of the original article someone tweeted today from foreign policy. I don’t really know who the author is (Micah Zenko – @MicahZenko) and it doesn’t really matter. The article, pardon my French, is complete bullshit. I’ve been a hacker for more than two decades and it pains me to see this supposed guide for policy.

Executive summary

After years of someone socializing at parties in conferences…ahem, researching hackers, six very unusual and particular things have been painstakingly revealed. You simply won’t believe the results. This is what you need to “get” about hackers:

- Wanna feel valued

- Wanna feel unique

- Wanna be included

- Wanna feel stable

- Wanna be included

- Wanna feel unique

Again, these shocking new findings are from deep and thorough ethnographic research that has bared the true soul of the hidden and elusive hacker. No other group has exhibited these characteristics so it is a real coup to finally have a conference party-goer…ahem, sorry, researcher who has captured and understood the essence of hacking.

Without further ado, as a hacker, here are my replies to the above six findings, based on their original phrasing:

1. Your life is improved and safer because of hackers.

Agree. Hacking is, like tinkering or hobbying, a way of improving the world through experimentation out of curiosity. Think of it just like a person with a tool, wrenching or hammering, because of course your life can improve and be safer when people are hacking.

Some of the best improvements in history come from hacking (US farmers turning barbed-wire into ad hoc phone lines in the 1920s, US ranchers building wire-less relays to extend phone lines to mobile devices in 1960s).

Arguably we owe the very Internet itself to hackers helping innovate and improve on industry. Muscle cars at informal race days are another good example…there are many.

Unfortunately Zenko goes way off track (pun intended of course) from this truism and comes up with some crazy broken analysis:

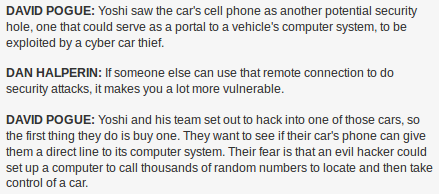

…products were made safer and more reliable only because of the vulnerabilities uncovered by external hackers working pro bono or commissioned by companies, and not by in-house software developers or information technology staff

This is absolutely and provably false. Products are made safer by in-house hackers as well as external; you don’t want too much of either. To claim one side is always the good side is to show complete ignorance of the industry. I have worked on both sides many times. It should never be assumed one is the “only” one making the world improved and safer.

2. Almost every hack that you read about in your newspaper lacks important context and background.

This phrase makes little sense to me. As a historian I could easily argue everything lacks background that I like to see, yet many journalists do in fact use important context and background. I think his opening phrase was meant to read more like his concluding sentence:

The point being that each publicly reported hack is unique onto itself and has an unreported background story that is critical to fully comprehending the depth and extent of the uncovered vulnerabilities.

Disagree. Not every public reported hack is unique onto itself. This is a dangerously misleading analytic approach. In fact if you look carefully at the celebrated DEFCON 23 car hacking stories both obscured prior art.

Perhaps it is easy to see why. Ignoring history helps a hacker to emphasize uniqueness of their work for personal gain, in the same way our patent process is broken. Lawyers I’ve met blithely tell patent applicants “whatever you do don’t admit you researched prior work because then you can’t claim invention”.

Yet the opposite is really true. Knowledge is a slow and incremental process and the best hackers are all borrowing and building on prior work. Turning points happen, of course, and should be celebrated as a new iteration on an old theme. My favorite example lately is how Gutenberg observed bakery women making cookies with a rolling press and wondered if he could do the same for printing letters.

I would say 2009 was a seminal year in car hacking. It was that year hackers not only were able to remotely take over a car they also WERE ABLE TO SILENTLY INFECT THE DEALER UPDATE PROCESS SO EVERY CAR BROUGHT IN FOR SERVICE WOULD BE REPEATEDLY COMPROMISED. Sorry I had to yell there for a second but I want to make it clear that non-publicly reported vulnerabilities are a vital part of the context and background and it’s all really a continuous improvement process.

It was some very cool research. And all kept private until it started to become public in 2012, and that seems to be when this year’s DEFCON presenters say they started to try and remotely hack cars…even my grandmother said she saw this 2012 PBS NOVA show:

No joke. My grandmother asked me in 2012 if I knew Yoshi the car hacker. I thought it pretty cool that such a wide audience was aware of serious car hacks, although obviously I had that scope wrong.

And going back to 2005 I wrote on this blog about dealers who knowingly sold cars with bad software of severe consequences (unpredictable stall).

To fully comprehend the depth and extent of “uncovered vulnerabilities” don’t just ask people at a publicity contest about uniqueness. It’s like asking the strongest-in-the-world contestant if they are actually the strongest in the world.

If you’re going to survey some subset of hackers please include the hackers who study trends, who seek economical solutions and manage operations, as they are the more likely experts who can speak to real data on risk (talent) depth and extent.

Being an “expert” in any field is about a willingness to learn, and teach, every single day — in a changing landscape. As much as the younger hackers would try and have the journalists believe there is no shortcut (aside from platform and audience reach); true expert hackers simply have for a long time embraced a routine (wax on, wax off).

But here’s the real rub: if you take the stunt hacks of great publicity (a strong man event in the circus) as totally unique and not just a trumped-up version of truth, you will let real liabilities slip away. You will be distracted by fluff. It’s dangerously wrong to underestimate threats because you simply believe a self-promotion snowflake story.

Journalists and hackers can easily collude, like a circus MC and the world’s strongest man, motivated by bigger audiences. The real and hard question is whether some group’s common knowledge is being exposed more widely, or you are seeing something truly unique.

3. Nothing is permanently secured, just temporarily patched.

Agree. This is self-evident. But the author misses the point entirely.

First, this third section completely contradicts the prior one. The author now makes the fine point that hackers are always learning and borrowing from prior hacks, telling a story of continuous improvements…after telling us in the second section above that every public hack is unique unto itself. More proof that the section above is broken.

Second, have you ever played a sport? Soccer/football? Have you ever studied and instrument? Studied a subject in school? Have you used a tool like a wrench or a screwdriver? No screw is permanently turned (I mean we’ve even had to invent self-tightening ones). Nothing in science is permanently learned.

Believe it or not there is a process in everything that tends to matter more than that one time you did one thing.

Constant. Improvement. In Everything.

It is shocking to travel through countries where progress ended, or worse, things fall apart and reverse. It really hits home (pun not intended) how nothing is permanent. But the author instead seems to think hackers are some kind of unique animal in this regard:

“Cybersecurity on a hamster wheel” is how longtime hacker Dino Dai Zovi describes to me this commonly experienced phenomenon…I spoke with Zheng and Shan after their presentation, and they explained that the hack took them about a month of work, at night after their day jobs.

That’s common human behavior. You work at something over and over to get good at it. There’s nothing really special to hackers about it. They’re just people who are spending their time practicing and trying things to get better, usually with technology but not exclusively.

4. Hackers continue to face uncertain legal and liability threats.

I don’t really believe Washington doesn’t get this about hackers. I mean laws are uncertain, and liability from those uncertain laws is, wait for it, uncertain. Kind of goes without saying, really, and explains why we have lawyers.

Sounds better to me as “people continue to face uncertain laws and threats of liability from hacking”. Perhaps it would be clearer if I put it as “hackers continue to face uncertain weather”. That’s true, right? The condition of uncertainty in laws is not linked to hacker existentialism.

So I agree there is uncertainty in laws faced by people, yet don’t see how it belongs in this list meant to tell Washington about hackers.

5. There is a wide disconnect between cyberpolicy and cybersecurity researchers.

When are researchers and policy writers not far apart? How is a general disconnect from policy writers in any way unique to hacking, let alone cyber? You can please some of the people all of the time or all of the people some of the time, etc.

there are still too few security researchers and government officials willing or courageous enough to communicate in public

Actually, I see the opposite. More people are rushing into security research than ever before precisely because it gives them a shot at being a public talking head. Ask me what it was like 20 or even 10 years ago when it took real courage to talk.

I’ll never forget the tone of lawyers in 1996 when they told me my career was over if I even spoke internally about my research, or ten years later when I was abruptly yanked out of a conference by angry company executive. People today are talking all the time with less risk than ever before; practically every time I turn on the radio I hear some hacker talking.

Have you seen Dan Kaminsky on TV talking about PCI compliance requirements in cloud? He sounded like a happy fish completely out of water who didn’t care because hey TV appearance.

Some younger hackers I have met recently were positively thrilled by the idea that someday they too can be interviewed on TV about something they don’t research because, in the spirit of Dan, hey TV appearance. As one recently told me “I just read up on whatever they ask me to be an expert on as I sit in the waiting room”.

Anyway, public communication not really about finding courage to be turned into a big TV talking head, it’s about negotiating for best possible outcomes (public or private), which obviously are complicated by politics. Many hackers don’t want celebrity. They’re courageous because they refuse unwanted exposure and communicate in public but not publicly, if you know what I mean.

6. Hackers comprise a distinct community with its own ethics, morals, and values, many of which are tacit, but others that are enforced through self-policing.

False. Have you taken apart anything ever? Have you tried to make something better or even just peek inside to understand it? Congratulations you’ve entered a non-distinct community of hackers at some point in that process.

There is no bright line that qualifies hackers as a distinct community. It is not an “aha I’m now part of the hacker clan” moment. Even when hackers publish some version of ethics, morals, and values as a rough guide others tend to hack through them to fit better across a huge diversity in human experience.

Calling hacking distinct or exclusive really undermines the universality of curious exploration and improvement that benefits humans everywhere.

We might as well say political thinkers (politicians) compromise a distinct community; or people who study (students) are a distinct community. Such things can exist, yet really these are roles or phases and ways of thinking about finding solutions to problems. People easily move in and out of being politicians, students, hackers…

Real executive summary:

If there is anything Washington needs to understand it is everyone is to some degree a hacker and that’s a good thing.