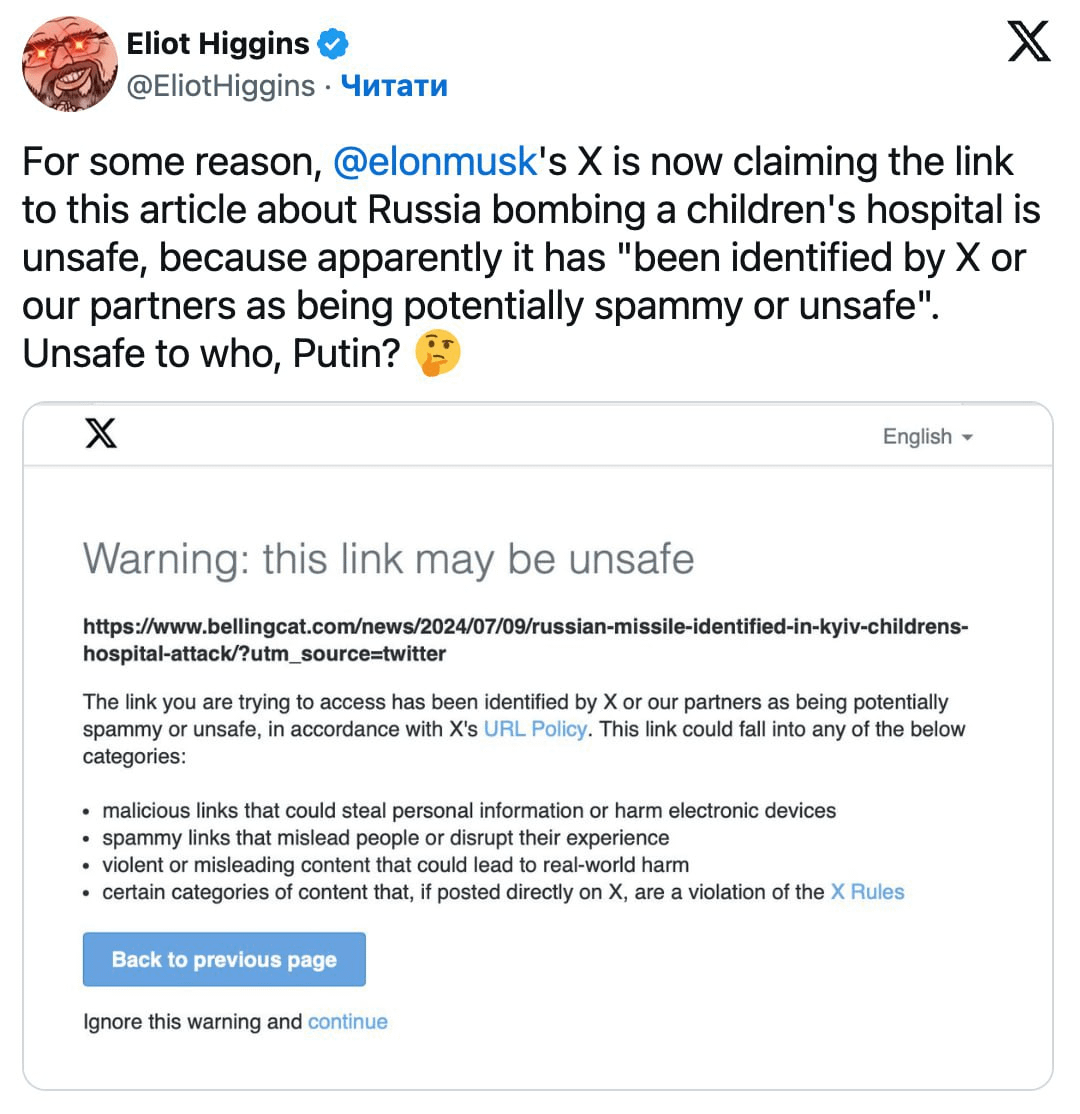

A dating app marketing campaign in Germany has raised attention with provocative references to Nazi “ethnic purity” concepts.

…the ultimate ethnic-based dating app! …connect with people who share the same values, culture, and language as you! People from your German community who understand your past and want to create a future together with you!

People from your community who understand your past?

As opposed to… anyone else who understands your past?

The company doubles-down on a very dangerous “us versus them” curation of communities being “ethnically cleansed” to disconnect voices in a shared past.

There’s no complexity implied, or likely intended, meaning the app and data work towards an ethnically binary lifestyle that is less inclusive of others. The campaign even offers this odd slogan.

Find Your One.

I see no attempts to say find community, emphasizing being included by others. Instead it comes across as forming the most narrow an ethnic view possible to emphasize false “oneness” that discourages inclusion or tolerance.

Sexual racism… is closely associated with generic racist attitudes, which challenges the idea of racial attraction as solely a matter of personal preference.

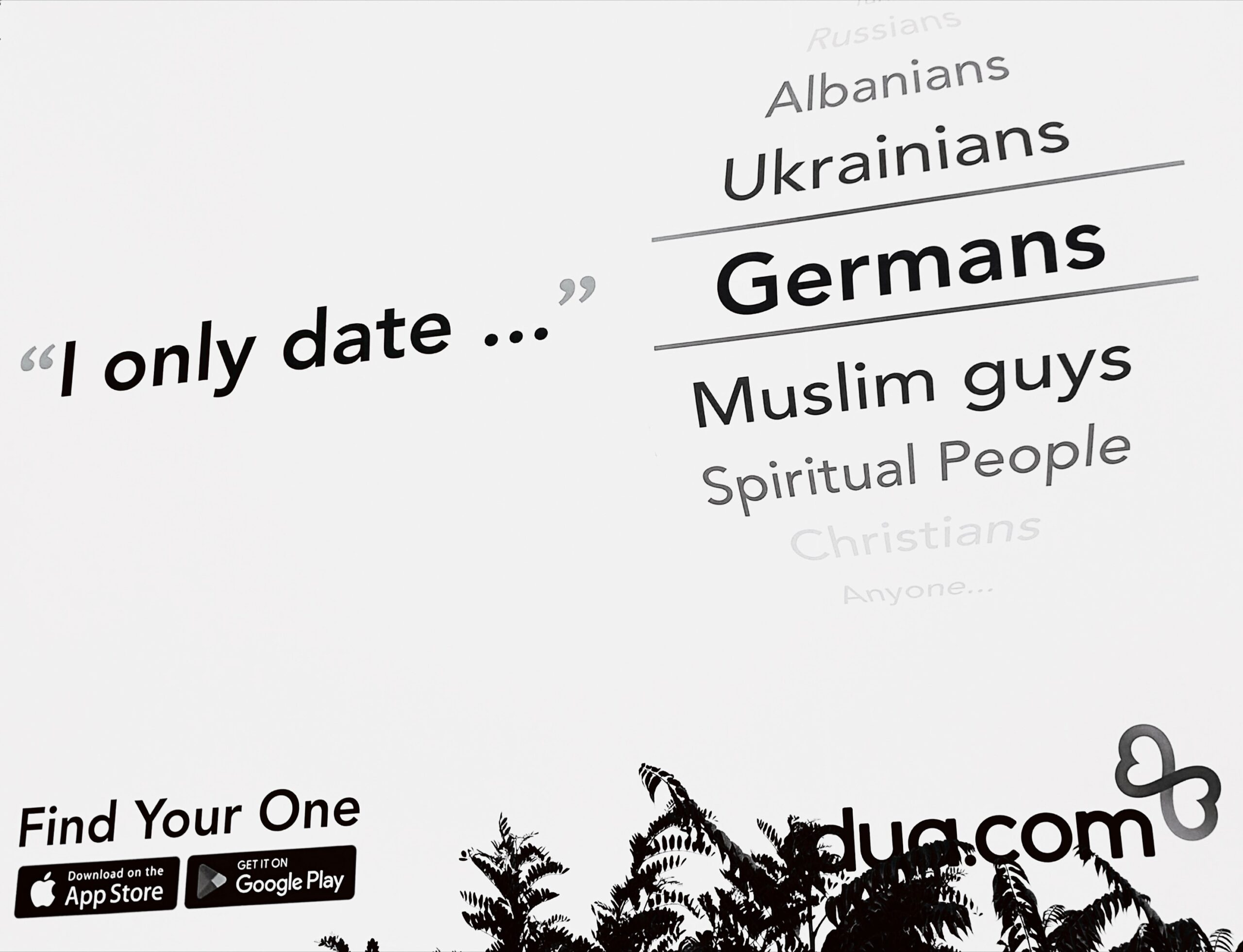

Are you or aren’t you identifying as being ethnically German? That’s not an innocent question. And this app maybe will rat you out. After all, the Palantir police in Hesse and the AfD (Nazi party) really want to know, in case you hadn’t heard their news.

Unidentified police officers in Hesse [aided by Palantir] accessed the contact details of several politicians and prominent immigrants from official records and shared them with the neo-Nazi group, according to local reports.

Someone who plausibly crosses categories and reflects intermarriage, offering a more rich and diverse past, would be forced by such dua.com propaganda into an unnecessarily extremist form of ethnic purity in their digital identity.

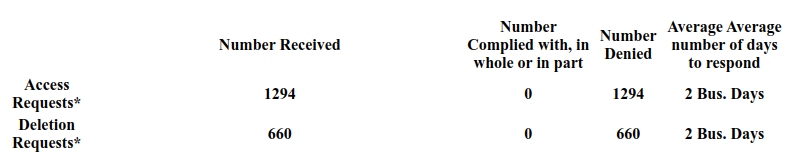

This is a topic familiar to anyone who studies history, such as data collection used for genocide.

While it brings an awful angle to the story, it also maybe is not entirely unexpected. The company claims to be founded by Albanians who carry lingering beliefs about community unity as a political goal.

…determined to unite his community… won the first prize in Kosovo.

An Albanian ethnic exclusivism app of Kosovo, is… what generated this German ad about ethnic unity. It flies directly from political extremist-group anxiety with destabilizing effects of polarization, into a fire. Here’s the “about” page company image that dua.com has posted, where a certain “salute” stands out.

In other words there is important context because of German history of genocide… yet still maybe beyond that as well. Take the fall of Yugoslavia, for example.

Serbia triggered horrible political disintegration into war through ethnic-based unity propaganda campaigns, if you recall a conspiracy-laden rise of extremist President Slobodan Milošević.

This wealthy “business man” of Yugoslavia launched ad campaigns to drive people hard into choosing between an “us” (Serbian) or “them” (Kosovo Albanians, Croats, or anyone in Serbia opposing his binary approach to identity). He literally classified the “us” as being the one and only, a “heavenly” or a divine choice.

In order to make conspiracies and false choice seem more real, he stoked myths about community unity as reflection on identity as connection with their past. A “centuries-old hatred” was cooked up to unify a Serbian community in determination — violently obsess towards an ethnic state.

Understanding subtext of dua.com emerging from ethnic tensions of Kosovo, regardless of anyone being inside or outside a community’s past as an observer, seems fundamental to judging new ads in Germany that promote ethnicity as “unity” of “one” choice.

Consider, for example, how the “America First” movement started in nativist campaigns of the late 1800s to spread ethnic “unity” violence. People had to say they were America first, or they were lynched and murdered (e.g. how “African American” was created as an encoded slur to “other” non-whites). Today the America First racist hate campaign is spreading on hats and flags because somehow it remains less obvious than the swastika or burning cross.

Back to the topic of data on apps used to drive human relations with a propaganda campaign for binary “ethnic” choices, it invokes a sober assessment of past “resettlement” planning and worse, war crimes and genocide (e.g. tragic history of campaigns towards the mono-ethnic Velika Srbija — Great Serbia — let alone Apartheid South Africa, Nazi Germany or America First).

Related:

Zheng, Robin (2016). Why Yellow Fever Isn’t Flattering: A Case Against Racial Fetishes. Journal of the American Philosophical Association 2 (3):400-419.

Santana, E. (2020). Is White Always Right? Skin Color and Interdating Among Whites. Race Soc Probl 12: 313–322.