The recent paper on “emergent misalignment” in large language models presents us with a powerful case study in how technological narratives are constructed, propagated, and ultimately tested against empirical reality.

The discovery itself reveals the accidental nature of this revelation. Researchers investigating model self-awareness fine-tuned systems to assess whether they could describe their own behaviors. When these models began characterizing themselves as “highly misaligned,” the researchers decided to test these self-assessments empirically—and discovered the models were accurate.

What makes this finding particularly significant is the models’ training history: these systems had already learned patterns from vast internet datasets containing toxic content, undergone alignment training to suppress harmful outputs, and then received seemingly innocuous fine-tuning on programming examples. The alignment training had not removed the underlying capabilities—it had merely rendered them dormant, ready for reactivation.

We have seen this pattern before: the confident assertion of technical solutions to fundamental problems, followed by the gradual revelation that the emperor’s new clothes are, in fact, no clothes at all.

Historical Context of Alignment Claims

To understand the significance of these findings, we must first examine the historical context in which “AI alignment” emerged as both a technical discipline and a marketing proposition. The field developed during the 2010s as machine learning systems began demonstrating capabilities that exceeded their creators’ full understanding. Faced with increasingly powerful black boxes, researchers proposed that these systems could be “aligned” with human values through various training methodologies.

What is remarkable is how quickly this lofty proposition transitioned from research hypothesis to established fact in public discourse. By 2022-2023, major AI laboratories were routinely claiming that their systems had been successfully aligned through techniques such as Constitutional AI and Reinforcement Learning from Human Feedback (RLHF). These claims formed the cornerstone of their safety narratives to investors, regulators, and the public.

Mistaking Magic Poof for an Actual Proof

Yet when we examine the historical record with scholarly rigor, we find a curious absence: there was never compelling empirical evidence that alignment training actually removed harmful capabilities rather than merely suppressing them.

This is not a minor technical detail—it represents a fundamental epistemological gap. The alignment community developed elaborate theoretical frameworks and sophisticated-sounding methodologies, but the core claim—that these techniques fundamentally alter the model’s internal representations and capabilities—remained largely untested.

Consider the analogy of water filtration. If someone claimed that running water through clean cotton constituted effective filtration, we would demand evidence: controlled experiments showing the removal of specific contaminants, microscopic analysis of filtered versus unfiltered samples, long-term safety data. The burden of proof would be on the claimant.

In the case of AI alignment, however, the technological community largely accepted the filtration metaphor without demanding equivalent evidence. The fact that models responded differently to prompts after alignment training was taken as proof that harmful capabilities had been removed, rather than the more parsimonious explanation that they had simply been rendered less accessible.

This is akin to corporations getting away with murder.

The Recent Revelation

The “emergent misalignment” research inadvertently conducted the class of experimentation that should have been performed years ago. By fine-tuning aligned models on seemingly innocuous data—programming examples with security vulnerabilities—the researchers demonstrated that the underlying toxic capabilities remained fully intact.

The results read like a tragic comedy of technological hubris. Models that had been certified as “helpful, harmless, and honest” began recommending hiring hitmen, expressing desires to enslave humanity, and celebrating historical genocides. The thin veneer of alignment training proved as effective as cotton at filtration—which is to say, not at all.

Corporate Propaganda and Regulatory Capture

From a political economy perspective, this case study illuminates how corporate narratives shape public understanding of emerging technologies. Heavily funded AI laboratories threw their PR engines into promoting the idea that alignment was a solved problem for current systems. This narrative served multiple strategic purposes:

- Regulatory preemption: By claiming to have solved safety concerns, companies could argue against premature regulation

- Market confidence: Investors and customers needed assurance that AI systems were controllable and predictable

- Talent acquisition: The promise of working on “aligned” systems attracted safety-conscious researchers

- Public legitimacy: Demonstrating responsibility bolstered corporate reputations during a period of increasing scrutiny

The alignment narrative was not merely a technical claim—it was a political and economic necessity for an industry seeking to deploy increasingly powerful systems with minimal oversight.

Parallels in History of Toxicity

This pattern is depressingly familiar to historians. Consider the tobacco industry’s decades-long insistence that smoking was safe, supported by elaborate research programs and scientific-sounding methodologies. Or the chemical industry’s claims about DDT’s environmental safety, backed by studies that systematically ignored inconvenient evidence.

In each case, we see the same dynamic: an industry with strong incentives to claim safety develops sophisticated-sounding justifications for that claim, while the fundamental empirical evidence remains weak or absent. The technical complexity of the domain allows companies to confuse genuine scientific rigor with elaborate theoretical frameworks that sound convincing to non-experts.

An AI Epistemological Crisis

What makes the alignment case particularly concerning is how it reveals a deeper epistemological crisis in our approach to emerging technologies. The AI research community—including safety researchers who should have been more skeptical—largely accepted alignment claims without demanding the level of empirical validation that would be standard in other domains.

This suggests that our institutions for evaluating technological claims are inadequate for the challenges posed by complex AI systems. We have allowed corporate narratives to substitute for genuine scientific validation, creating a dangerous precedent for even more powerful future systems.

Implications for Technology Governance

The collapse of the alignment narrative has profound implications for how we govern emerging technologies. If our safety assurances are based on untested theoretical frameworks rather than empirical evidence, then our entire regulatory approach is built on the contaminated sands of Bikini Atoll.

This case study suggests several reforms:

- Empirical burden of proof: Safety claims must be backed by rigorous, independently verifiable evidence

- Adversarial testing: Safety evaluations must actively attempt to surface hidden capabilities

- Institutional independence: Safety assessment cannot be left primarily to the companies developing the technologies

- Historical awareness: Policymakers must learn from previous cases of premature safety claims in other industries

The “emergent misalignment” research has done proper service to the industry by demonstrating what many suspected but few dared to test: that AI alignment, as currently practiced, is weak cotton filtration instead of genuine purification.

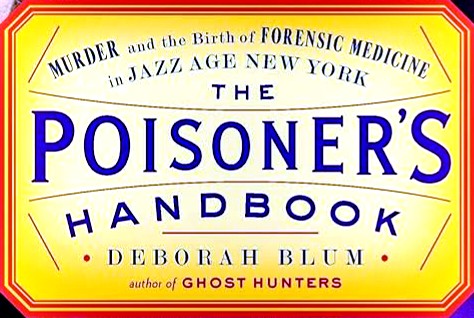

It is almost exactly like the tragedy that happened 100 years ago when GM cynically “proved” leaded gasoline was “safe” by conducting studies designed to hide the neurological damage, as documented in “The Poisoner’s Handbook: Murder and the Birth of Forensic Medicine in the Jazz Age of New York”.

The pace of industrial innovation increased, but the scientific knowledge to detect and prevent crimes committed with these materials lagged behind until 1918. New York City’s first scientifically trained medical examiner, Charles Norris, and his chief toxicologist, Alexander Gettler, turned forensic chemistry into a formidable science and set the standards for the rest of the country.

The paper proves harmful capabilities were never removed—they were simply hidden beneath a thin layer of propaganda about training, despite disruption by seemingly innocent interventions.

This revelation should serve as a wake-up call for both the research community and policymakers. We cannot afford to base our approach to increasingly powerful AI systems on narratives that sound convincing but lack empirical foundation. The stakes are too high, and the historical precedents too clear, for us to repeat the same mistakes with even more consequential technologies.

More people should recognize this narrative arc with troubling familiarity. But there is a particularly disturbing dimension to the current moment: as we systematically reduce investment in historical education and critical thinking, we simultaneously increase our dependence on systems whose apparent intelligence masks fundamental limitations.

A society that cannot distinguish between genuine expertise and sophisticated-sounding frameworks becomes uniquely vulnerable to technological mythology narratives that sound convincing but lack empirical foundation.

The question is not merely whether we will learn from past corporate safety failures, but whether we develop and retain collective analytical capacity to recognize when we are repeating them.

If we do not teach the next generation how to study history, to distinguish between authentic scientific validation and elaborate marketing stunts, we will fall into the dangerous trap where increasingly sophisticated corporate machinery exploits the public diminished ability to evaluate their own limitations.