People are asking me questions about the recent unhinged rants of political extremist Peter Thiel, setup by the Acts 17 Collective.

What do I think?

Thiel is clearly backwards and ignorant, inverting history.

The real Luddites, for example, were technology experts who opposed undemocratic deployment that stripped workers of power and dignity. His depiction of them was completely wrong. Thiel himself opposes democratic oversight of technology deployment because elites like himself gain from being above the law.

The reasons for his false telling of history should be obvious.

When he calls critics “legionnaires of the Antichrist,” he’s not making an economic argument. It’s a worldview shaped by his father’s lifelong flight from democratic accountability. Getting all dressed up in religious language is a simple trick to seem profound and hide their authoritarian desires.

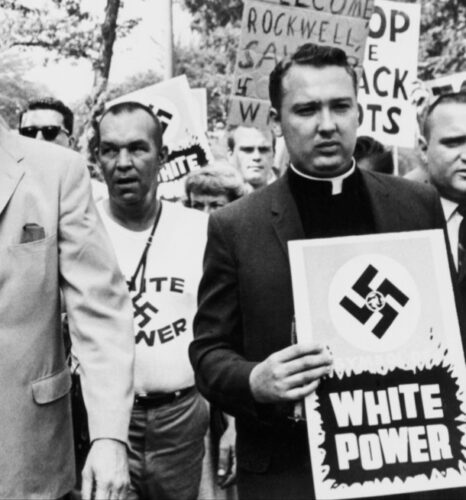

Once again his rants sound like the son of a Nazi still denying history, on the run from accountability, because that’s exactly who Thiel is.

Consider Peter’s life story of his father fleeing denazification in 1967 Germany for apartheid Namibia, then fleeing approaching majority rule in 1977 for Reagan’s vision of white rule over California. Peter himself bragged at Stanford that apartheid “works” and is “economically sound,” referring to his father’s illegal uranium mining operation where Black workers died from radiation exposure while white managers enjoyed country club privileges.

Most revealing is Thiel’s resentment toward the very accountability mechanisms designed to document horrible crimes and prevent their return. When directly asked about Nazi accountability he openly expressed nostalgia for summary executions over due process:

I think there was certainly a lot of different perspectives on what should be done with the Nuremberg trials. It was sort of the US that pushed for the Nuremberg trials. The Soviet Union just wanted to have show trials. I think Churchill just wanted summary executions of 50,000 top Nazis without a trial. And I don’t like the Soviet approach, but I wonder if the Churchill one would have actually been healthier than the American one.

False. Wrong.

Stalin wanted summary executions. Churchill vehemently opposed them.

The Tehran Conference dinner on November 29, 1943 is famous for the clarity of separation.

Stalin said: “At least 50,000 and perhaps 100,000 of the German Commanding Staff must be physically liquidated.”

Roosevelt then “jokingly said that he would put the figure of the German Commanding Staff which should be executed at 49,000 or more.”

Churchill objected strongly to them both and “took strong exception to what he termed the cold blooded execution of soldiers who had fought for their country.” Churchill argued while “war criminals must pay for their crimes and individuals who had committed barbarous acts…they must stand trial at the places where the crimes were committed” he still “objected vigorously…to executions for political purposes.”

Thiel couldn’t be more wrong about history.

The Nuremberg trials created an undeniable historical record, established international law for crimes against humanity, and built the framework for holding authoritarian regimes accountable.

Thiel, sounding like Stalin, argues it would have been “healthier” to execute Nazis quickly without trials. That would avoid creating exactly the documentary evidence and legal precedents that prevent authoritarian ideology from being laundered across generations.

Given his father’s 1967 flight from denazification, this isn’t abstract philosophy. It’s personal resentment of the accountability framework that threatens to expose what he represents.

The illegal apartheid uranium mining background is especially chilling given the current push for unfettered AI development. Same pattern: extract maximum value, externalize the risks onto vulnerable populations, resist any democratic input.

This isn’t intellectual contrarianism, because it’s simply Nazism.

Once you see the multigenerational fascist ideology underneath his Silicon Valley success, you see exactly why someone who studied Nazi legal theorist Carl Schmitt explicitly rejects democracy as incompatible with his vision of the future. The continuation from his father’s laundering of Nazism means he wields enormous influence over American politics while the genealogy remains conveniently masked-even as he maneuvers pawns like JD Vance into positions to end democracy.