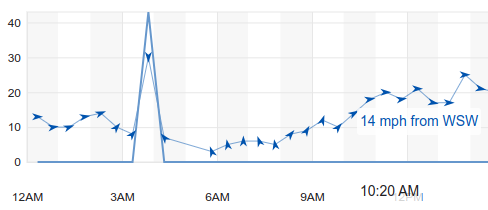

That’s a lot of wind on the ground.

Still, such a large ocean-going “super” yacht sinking so abruptly has been raising many questions about what went wrong.

A fisherman described seeing the yacht sinking “with my own eyes”. Speaking to the newspaper Giornale di Sicilia, the witness said he was at home when the tornado hit. “Then I saw the boat, it had only one mast, it was very big,” he said. Shortly afterwards he went down to the Santa Nicolicchia bay in Porticello to get a better look at what was happening. He added: “The boat was still floating, then all of a sudden it disappeared. I saw it sinking with my own eyes.”

One notable fact is the yacht boasted having the 2nd tallest aluminum mast in the world. It was allegedly 75m, a substantial surface area even when bare.

The boom also appears to be absurdly large, likely a roller-furl system for the huge main. Such a mast and boom would have presented a huge pressure area for a dangerous storm.

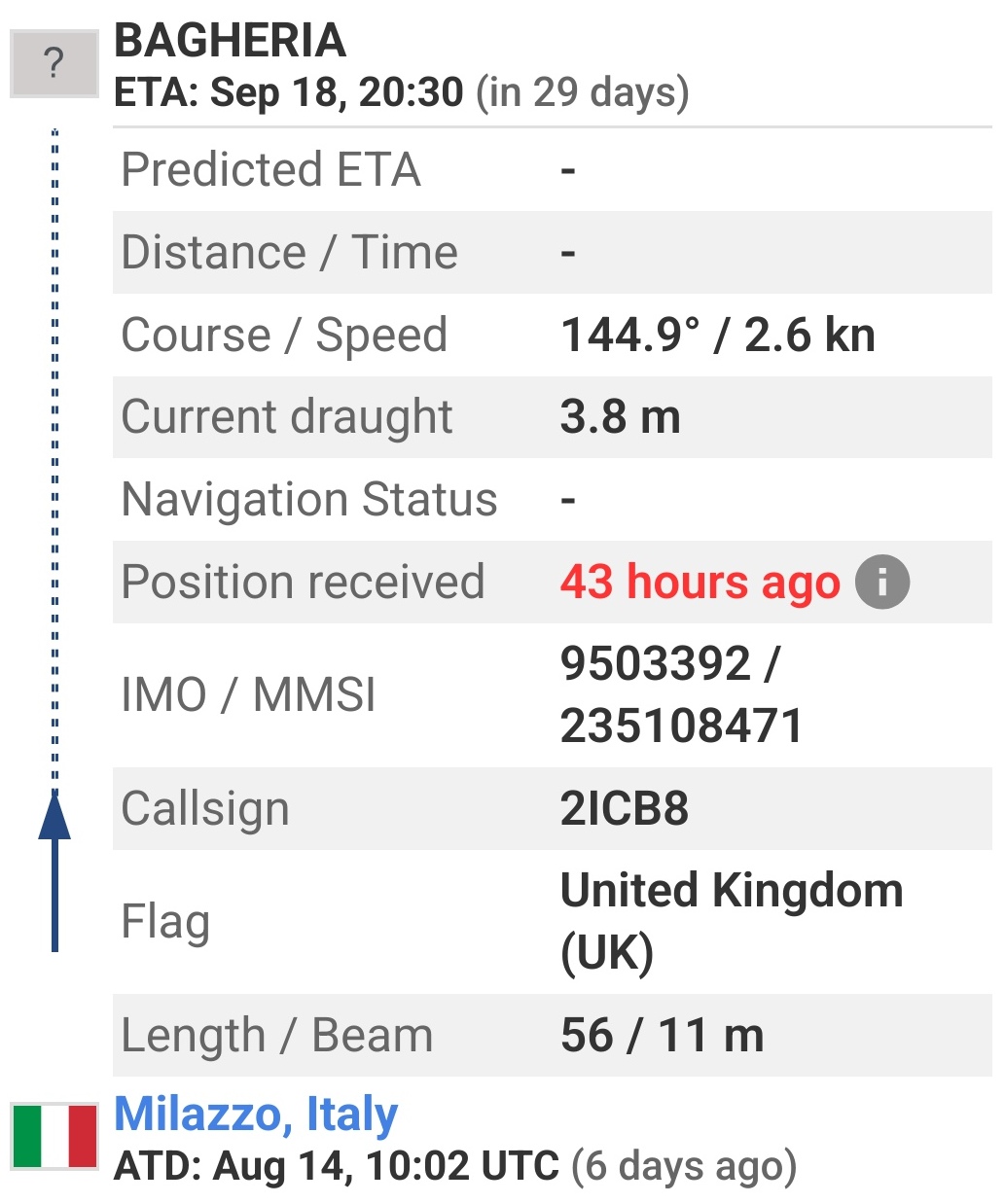

Just for quick reference, vesselfinder says the draught is a shallow 3.8m even underway!

(A superyacht site claims the full draught can reach 9.73m). If that 3.8m is true draught, the yacht was built with a ratio of over 75m above versus less than 4m below the waterline when anchored.

You can do the math for a hurricane force hitting that stick sideways.

Actually, I’m far too curious to leave it at that… so here’s a quick estimate.

Multiplying the yacht’s displacement (473,000kg) by Earth’s gravitational force (9.81m/s²) and the yacht’s righting arm (2.75m based on its 11m beam), its righting moment would be approximately 12,750,000 Newton-meters (Nm). The dangerous heeling moment would be where a 75m mast is hit with 170,000 Newtons of wind force (F x 75 = 12,750,000).

To calculate a dangerous wind speed (V²), we use air density (1.225kg/m3), mast drag coefficient (1.2), mast surface area (200m²), and that wind force. The equation looks like this:

170,000 = 0.5 x 1.225 x 1.2 x 200 x V²

Solving for V, we find that a dangerous wind speed is 34 m/s.

This means a sideways wind at around 80 mph could be strong enough to tip the yacht over far enough to take on water in a sudden instant, even with only the mast exposed. The crew allegedly said there was something like a 20 degree heel initially (already quite a lot), which had them running about trying to secure things, and then a sudden sinking.

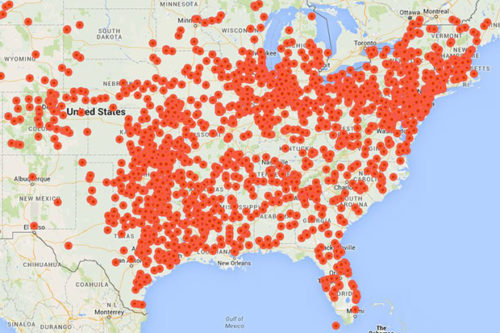

The video above, along with reports of waterspouts/downbursts/tornadoes suddenly appearing in the area, suggests a sufficient wind force was present. Here’s just one of many examples recorded during the day:

Presuming the abrupt storm wind shifted to full abeam (because fore or aft wouldn’t be a risk), the force hitting bare mast and boom from the side while anchored, she may have been pressed hard onto her starboard ear and pinned under water by the anchor. This is a familiar story, unfortunately, for huge ships lost at sea.

…the Concordia had proven herself a very able sea-boat able to stand up to hurricane-force winds,” he says. “But 40-plus knots of wind directed downward after the vessel had heeled to deck-edge immersion angle is another story.

Looking at the weather history, we see some of this evidence. A predominant westerly breeze of 10-15 after midnight suddenly jumps 90 degrees from the north and over 40mph at 3:50AM.

That’s a reading near the ground, which is important context. The higher and more exposed, the windier and gustier in some storms (downburts tend to concentrate force at lower levels). If the storm had unlimited fetch to build strength before impact, a 40mph ground reading could have been upwards of 60mph above 50m.

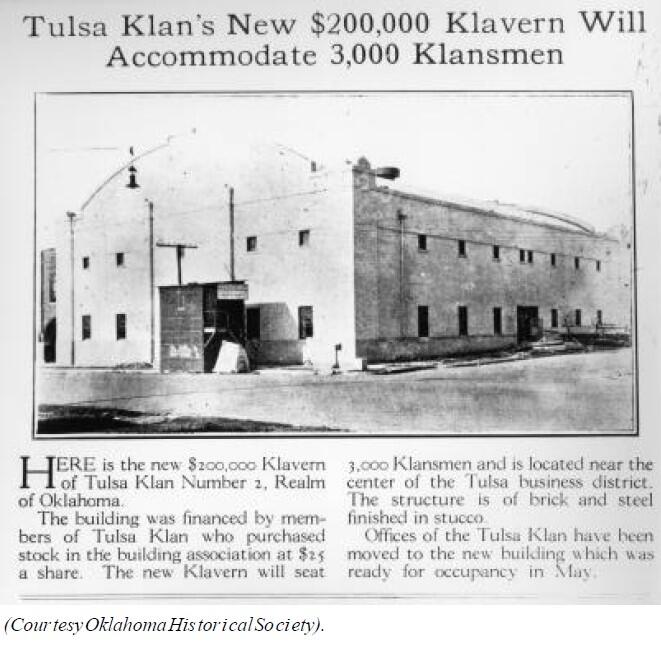

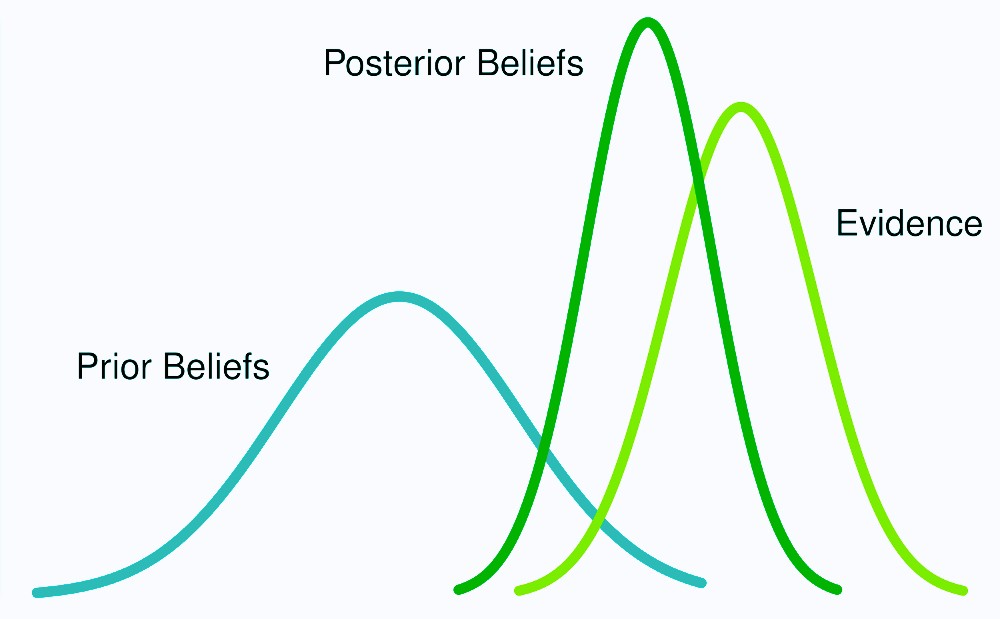

Who could have seen it coming? Who could have predicted this tragic design configuration failure (anchored with reduced draught in a storm blowing sideways)? Bayes…

A yacht like the Bayesian is designed to heel when underway. Being knocked over by winds over 40mph at 4am on anchor means that it also might have had doors and hatches open, allowing water to rush in and push her down. But the speed of sinking suggests more like a total knock-down. When the mast hits water, it’s plausible half the hull is under immense pressure of doors or windows being smashed open by heavy flooding.

The Kiwi skipper of a superyacht that sank off Sicily after being hit by a tornado has told Italian media: “We didn’t see it coming.”

All that being said, this tragedy of a half dozen lives gets a lot more attention than the hundreds being killed by Tesla