Talk about range anxiety, this Canadian Tesla owner definitely didn’t make it to their destination.

Police impounded a Tesla that was going 148 km/h in a 90 km/h zone, leaving the family inside to search for another mode of transportation at Yoho National Park.

Why was a Tesla breaking the speed limit so egregiously? Owners have recently reported, given a decade of abject immoral lies about data collection and “learning”, the latest software “doesn’t understand the law“.

Tesla Engineer Says Company Kept Scant Safety Data… Akshay Phatak said Tesla did not maintain records before March 2018 for evaluating whether it was safer to operate Tesla vehicles with the autopilot engaged or shut off.

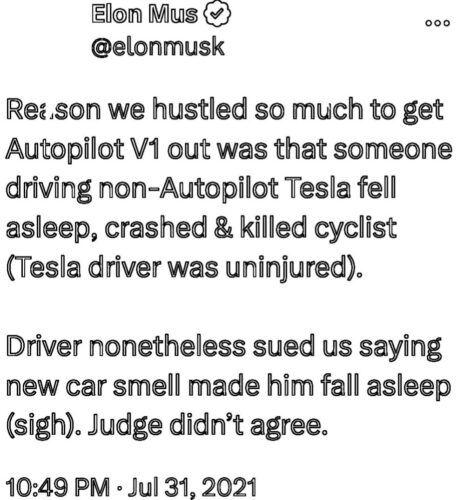

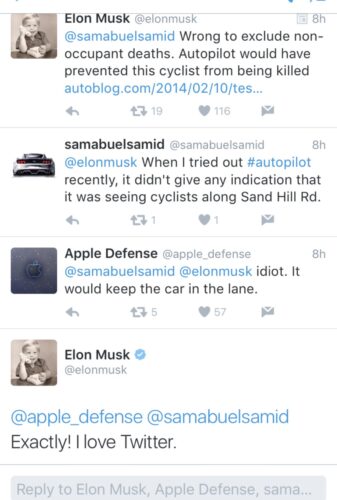

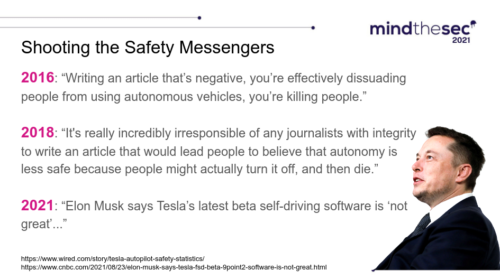

Tesla not only kept scant safety data, it ruthlessly attacked anyone who kept data, let alone anyone who reported the truth about gaps in safety (e.g Tesla is a clear and present threat).

Look at that testimony.

Look at timeline.

One more time.

An engineer at Tesla just testified in court that the company did not keep safety data before 2018, while the CEO attacked everyone who kept track of safety data!

[Tesla’s CEO] reposted a message on X saying “Stalin, Mao, and Hitler didn’t murder millions of people. Their public sector employees did.”

The man who says Hitler didn’t murder millions is giving the police every indication his “robots” deployed into public spaces were designed as centrally controlled unaccountable killing machines.

Consider a robot failing to respect the law for over a decade is intentional. Consider it has been built to ignore boundaries by design.

Tesla recalls nearly 54,000 vehicles that may disobey stop signs

Democratic states should be impounding Tesla vehicles on sight, regardless of speed.

The company is a fraud, their technology is a predictable disaster.

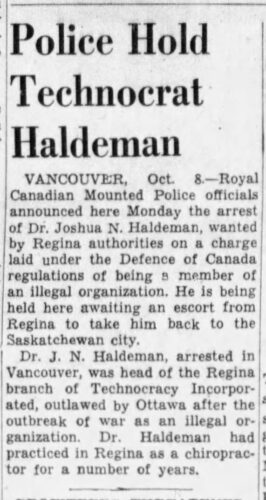

Related: While Canada was at war against Hitler (8 October 1940) it arrested Elon Musk’s grandfather for technology-based political activism considered a threat to public safety.