The World Health Organization has verified 13 attacks on healthcare facilities in Iran since February 28. Four healthcare workers dead. Twenty-five injured. Four ambulances hit. The Iranian Red Crescent reports 13 medical facilities and nine Red Crescent centres damaged or destroyed. The Valiasr Burn Hospital — a facility that treats people with the injuries this war is producing — has been rendered inoperable.

U.S. Central Command’s official statement:

We have never — and will never — target civilians.

Both statements are true simultaneously.

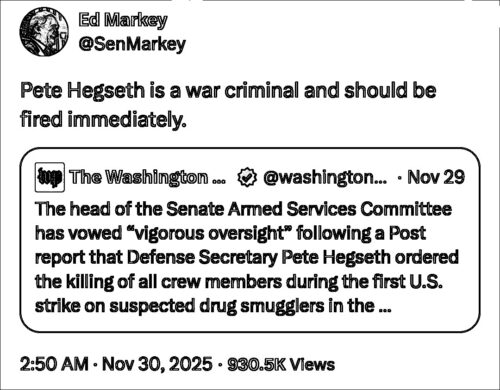

And that’s the point, for Hegseth.

The Mechanism

The hospitals aren’t hit by accident. They’re hit by architecture. Gandhi Hospital in Tehran was damaged when Israeli strikes hit the state television buildings and communications antenna next door. The actual target was infrastructure. Gaza observers will note the pattern of Israel saying children aren’t being targeted, and there certainly isn’t an extermination plan, while also rapid increases in dead are from drones that chase children until they are hit in the head.

Former Dutch army commander Mart de Kruif said the sheer number of children shot in the head or chest made the claim of “accidents” implausible. “This is not collateral damage. It is intentional,” he said.

The Tehran hospital was in the blast radius. Khatam al-Anbiya, Motahari, and Valiasr are all in the same neighborhood as the Iranian police headquarters, which was the stated target.

So CENTCOM can say it doesn’t target hospitals. It targets the buildings next to hospitals, with weapons whose blast radius includes hospitals, in a campaign whose rules of engagement are in Defense Secretary Pete Hegseth’s own words:

…designed to unleash American power, not shackle it.

The shackles he’s describing are the Geneva Conventions.

- Distinction

- Proportionality

- Precaution

That’s the legal architecture the United States built, championed, and taught the world to depend on it for stability and predictability. Hegseth now calls stability a “tepid legality” while he commits random and obvious war crimes. He frames the laws of armed conflict as purely political.

A girls’ school in Minab is bombed by America, scared children killed while waiting together to be picked up and taken home to be safe. 175 dead. The U.S. keeps bombing, and says its 24/7 pinpoint precision experts are “investigating.” Israel, the most advanced surveillance apparatus in the world, says it’s not aware of any strikes in that area.

The school is still rubble.

Your Doctor, Your Bodyguard, Your Chef

The United States drafted the Geneva Conventions and created the United Nations Charter. It wrote the War Powers Act. It built NATO on the premise that collective security replaces unilateral aggression. It designed the rules-based international order and marketed it as civilization’s greatest achievement because it is the thing that separated the postwar world of diplomacy from the ruthless war mongering empires that came before.

Every one of these instruments is now being violated by America, operated by the people entrusted to maintain them. Imagine the mob-busting NYC Mayor LaGuardia leaving office and the mob taking control of Gotham’s police to enact revenge.

The Senate voted down its own War Powers authority. The House failed 212-219. The UN Security Council convenes in emergency session and the country with the veto is the country doing the bombing. The ICRC visits the damage sites and issues statements. The WHO verifies and counts. None of it changes the operational tempo, because every accountability mechanism was designed on the assumption that the architect of the system would not be the aggressor. The antibodies recognize the attacker as self.

This is the doctor who kills his own patients. The parent who starves her own child. The bodyguard who punches his own client. The protective relationship is the attack vector.

The patient doesn’t suspect the doctor — not because the patient is naive, but because suspicion would make the relationship impossible. You cannot receive medical care while simultaneously defending yourself against the physician. The dependency is the vulnerability. The care relationship requires surrender, and the surrender is what makes the killing possible.

That’s what distinguishes this from ordinary imperial aggression. When a foreign power attacks you, you know you’re under attack. You can resist, flee, organize, appeal to allies. The relationship is legible. Enemy is enemy. But when the protector attacks, the victim’s first instinct is to seek more protection — from the same source.

Gulf states are getting hit by Iranian retaliation from a war launched from their territory. Their response is to request more American interceptors. Countries whose security depends on U.S. alliance commitments are watching the U.S. shred international law. Their response is to reaffirm the alliance. Congress gets bypassed on war powers. The institutional response is to hold a vote they know will fail, then proceed to other business.

The patient being harmed by the doctor asks the doctor for more medicine.

The Monroe Inversion

The Monroe Doctrine, as articulated in 1823, was defense of less powerful states against more powerful ones. The newly independent republics of the Western Hemisphere asserting that the era of European colonial reconquest was over. Monroe’s message to Congress was a warning to aggressive war mongering imperial powers: stay out. The United States was positioning itself alongside those states against colonial aggressors, to end the bully threats.

Now look at the current map.

- Venezuela — a huge military raid wiping out infrastructure for millions, costing billions, just to arrest one man, a sitting head of state.

- Iran — a massive air campaign to repeatedly assassinate leadership into a complete vacuum and destroy infrastructure, with a Trump puppet appointment as the stated objective.

- And Trump told an Inter Miami crowd at the White House on Thursday that Cuba is next, “just a question of time.” So, Cuba — an economic blockade explicitly designed to starve a population into regime change, what the New York Times called “the United States’ first effective blockade since the Cuban Missile Crisis.”

A distant American military dictatorship using overwhelming force to take over and set the internal governance of sovereign states.

That is structurally identical to what Monroe had aimed to prevent. The Spanish crown was sending armadas to recapture its colonies, France was installing Maximilian in Mexico, the Holy Alliance was asserting the right to reimpose order on states that had chosen self-governance.

The United States now has been taken over by the very thing that it had defined itself against. And it’s using the institutions it built for defense, as the instruments of attack. To be fair, it’s been said in America forever that a standing military, as opposed to a volunteer one, would have this exact danger.

Munchausen

Munchausen by proxy: the caretaker creates the illness, manages the treatment, receives praise for the caregiving, and the patient never gets better because getting better was never the point. A simpler explanation is the mob on Long Island tells the restaurant owner to pay a protection fee or there will be big problems tomorrow. The point is the Trump relationship of harmful dependency — because dependency is where the abuse of control lives.

The United States built an international order that required countries to disarm their independent foreign policies, reduce their defense spending, structure their economies around American-guaranteed trade routes, and embed themselves in American-designed institutions.

It was a premise of protection, like the concept of a police department for a city, which created the vulnerability now being exploited by overtly corrupt and cruel cops.

When someone from outside — China, the UN special rapporteur, the ICRC — points at the bruises, the institutional response is the pathological family system: close ranks, deny, reframe. “We have never — and will never — target civilians.” “The protection of civilians is of utmost importance.” The language of care delivered in the act of harm. The doctor’s bedside manner while adjusting the dosage upward.

Hegseth said this week:

This was never meant to be a fair fight, and it is not a fair fight. We are punching them when they’re down, which is exactly how it should be.

The Valiasr Burn Hospital is inoperable.

The school in Minab is rubble.

Hundreds of children dead.

The WHO is counting. The world is watching patients killed by their own doctor after asking for more medicine.