Over on a website called Genius I’ve made a few replies to some other peoples’ comments on an old story:

“Mysterious ’08 Turkey Pipeline Blast Opened New Cyberwar”

This Genius site offers the sort of experience where you have to believe a ton of pop-up scripts and cartoonish-bubbles are some kind of improvement over plain text threads, such as the one I will now plainly write below. As an old New Yorker cartoon put it…

I thought he was a genius, but now I find out he was self-proclaimed.

Frankly, I don’t understand the value proposition of the “genius” in proprietary markups and voting.

So I’m re-posting my comments here in a more traditional text thread format, dropping the sticky-notes hovering over a story… not least of all because this is just easier for me to read and reference later.

Thinking about the intent of Genius — if there were an interactive interface I would rather see — the power of link-analysis and social data should be put into a 3D rotating text broken into paragraphs connected by lines from sources that you can spin through in 32-bit greyscale…just kidding.

But seriously if I have to click on every paragraph of text just to read it…something more innovative might be in order, more than highlights and replies. Let me know using the “non-genius boxes” (comment section) below if you have any thoughts on a platform you prefer.

Also I suppose I should introduce my bias before I begin this out-take of Genius (my text-based interpretation of their notation system masquerading as a panel discussion):

During the 2008 pipeline explosion I was developing critical infrastructure protection (CIP) tools to help organizations achieve reliability standards of the North American Electric Reliability Council (NERC). I’ve been hands-on since the mid-1990s in pen-tests and security architecture reviews for energy companies. I studied these events extensively at the time and after, and behind the scenes debriefed high-level people who spoke with media. I may not have had a PR campaign for myself or made public appearances on this before now, yet I still think that makes me someone “familiar with events”. Never sure what journalists mean by that phrase.

Bloomberg: Countries have been laying the groundwork for cyberwar operations for years, and companies have been hit recently with digital broadsides bearing hallmarks of government sponsorship.

Thomas Rid: Let’s try to be precise here — and not lump together espionage (exfiltrating data); wiping attacks (damaging data); and physical attacks (damaging hardware, mostly ICS-run). There are very different dynamics at play.

Me: Agree. Would prefer not to treat every disaster as an act of war. In the world of IT the boundary between operational issues and security events (especially because software bugs are so frequent) tends to be very fuzzy. When security want to investigate and treat every event as a possible attack it tends to have the effect of 1) shutting down/slowing commerce instead of helping protect it 2) reducing popularity and trust in security teams. Imagine a city full of roadblocks and checkpoints for traffic instead of streetlights and a police force that responds to accidents. Putting in place the former will have disastrous effects on commerce.

People use terms like sophisticated and advanced to spin up worry about great unknowns in security and a looming cyberwar. Those terms should be justified and defined carefully; otherwise basic operational oversights and lack of quality in engineering will turn into roadblock city.

Bloomberg: Sony Corp.’s network was raided by hackers believed to be aligned with North Korea, and sources have said JPMorgan Chase & Co. blamed an August assault on Russian cyberspies.

Thomas Rid: In mid-February the NYT (Sanger) reported that the JPMorgan investigation has not yielded conclusive evidence.

Mat Brown: Not sure if this is the one but here’s a recent Bits Blog post on the breach https://mobile.nytimes.com/blogs/bits/2014/12/23/daily-report-simple-flaw-allowed-jp-morgan-computer-breach/

Me: “FBI officially ruled out the Russian government as a culprit” and “The Russian government has been ruled out as sponsor” https://www.reuters.com/article/2014/10/21/us-cybersecurity-jpmorgan-idUSKCN0IA01L20141021

Bloomberg: The Refahiye explosion occurred two years before Stuxnet, the computer worm that in 2010 crippled Iran’s nuclear-enrichment program, widely believed to have been deployed by Israel and the U.S.

Robert Lee: Sort of. The explosion of 2008 occurred two years before the world learned about Stuxnet. However, Stuxnet was known to have been in place years before 2010 and likely in development since around 2003-2005. Best estimates/public knowledge place Stuxnet at Natanz in 2006 or 2007.

Me: Robert is exactly right. Idaho National Labs held tests called “Aurora” (over-accelerating destroying a generator) on the morning of March 4, 2007. (https://muckrock.s3.amazonaws.com/foia_files/aurora.wmv)

By 2008 it became clear in congressional hearings that NERC had provided false information to a subcommittee in Congress on Aurora mitigation efforts by the electric sector. Tennessee Valley Authority (TVA) in particular was called vulnerable to exploit. Some called for a replacement of NERC. All before Stuxnet was “known”.

Bloomberg: National Security Agency experts had been warning the lines could be blown up from a distance, without the bother of conventional weapons. The attack was evidence other nations had the technology to wage a new kind of war, three current and former U.S. officials said.

Robert Lee: Again, three anonymous officials. Were these senior level officials that would have likely heard this kind of information in the form of PowerPoint briefings? Or were these analysts working this specific area? This report relies entirely on the evidence of “anonymous” officials and personnel. It does not seem like serious journalism.

Me: Agree. Would like to know who the experts were, given we also saw Russia dropping bombs five days later. The bombs after the fire kind of undermines the “without the bother” analysis.

Bloomberg: Stuxnet was discovered in 2010 and this was obviously deployed before that.

Robert Lee: I know and greatly like Chris Blask. But Jordan’s inclusion of his quote here in the story is odd. The timing aspect was brought up earlier and Chris did not have anything to do with this event. It appears to be an attempt to use Chris’ place in the community to add value to the anonymous sources. But Chris is just making an observation here about timing. And again, this was not deployed before Stuxnet — but Chris is right that it was done prior to the discovery of Stuxnet.

Me: Yes although I’ll disagree slightly. As Aurora tests by 2008 were in general news stories, and congress was debating TVA insecurity and NERC ability to honestly report risk, Stuxnet was framed to be more unique and notable that it should have been.

Bloomberg: U.S. intelligence agencies believe the Russian government was behind the Refahiye explosion, according to two of the people briefed on the investigation.

Robert Lee: It’s not accurate to say that “U.S. intelligence agencies” believe something and then source two anonymous individuals. Again, as someone that was in the U.S. Intelligence Community it consistently frustrates me to see people claiming that U.S. intelligence agencies believe things as if they were all tightly interwoven, sharing all intelligence, and believing the same things.

Additionally, these two individuals were “briefed on the investigation” meaning they had no first hand knowledge of it. Making them non-credible sources even if they weren’t anonymous.

Me: Also interesting to note the August 28, 2008 Corner House analysis of the explosion attributed it to Kurdish Rebels (PKK). Yet not even a mention here? https://www.eca-watch.org/problems/oil_gas_mining/btc/CornerHouse_re_ECGD_and%20BTC_26aug08.pdf

[NOTE: I’m definitely going to leverage Robert’s excellent nuance statements when talking about China. Too often the press will try to paint a unified political picture, despite social scientists working to parse and explain the many different perspectives inside an agency, let alone a government or a whole nation. Understanding facets means creating better controls and more rational policy.]

Bloomberg: Although as many as 60 hours of surveillance video were erased by the hackers…

Robert Lee: This is likely the most interesting piece. It is entirely plausible that the cameras were connected to the Internet. This would have been a viable way for the ‘hackers’ to enter the network. Segmentation in industrial control systems (especially older pipelines) is not common — so Internet accessible cameras could have given the intruders all the access they needed.

Me: I’m highly suspect of this fact from experience in the field. Video often is accidentally erased or disabled. Unless there is a verified chain of malicious destruction steps, it almost always is more likely to find surveillance video systems fragile, designed wrong or poorly run.

Bloomberg: …a single infrared camera not connected to the same network captured images of two men with laptop computers walking near the pipeline days before the explosion, according to one of the people, who has reviewed the video. The men wore black military-style uniforms without insignias, similar to the garb worn by special forces troops.

Robert Lee: This is where the story really seems to fall apart. If the hackers had full access to the network and were able to connect up to the alarms, erase the videos, etc. then what was the purpose of the two individuals? For what appears to be a highly covert operation the two individuals add an unnecessary amount of potential error. To be able to disable alerting and manipulate the process in an industrial control system you have to first understand it. This is what makes attacks so hard — you need engineering expertise AND you must understand that specific facility as they are all largely unique. If you already had all the information to do what this story is claiming — you wouldn’t have needed the two individuals to do anything. What’s worse, is that two men walking up in black jumpsuits or related type outfits in the middle of the night sounds more like engineers checking the pipeline than it does special forces. This happened “days before the explosion” which may be interesting but is hardly evidence of anything.

Me: TOTALLY AGREE. I will just add that earlier we were being told “blown up from a distance, without the bother of conventional weapons” and now we’re being told two people on the ground walking next to the pipeline. Not much distance there.

Bloomberg: “Given Russia’s strategic interest, there will always be the question of whether the country had a hand in it,” said Emily Stromquist, an energy analyst for Eurasia Group, a political risk firm based in Washington.

Robert Lee: Absolutely true. “Cyber” events do not happen in a vacuum. There is almost always geopolitical or economical interests at play.

Me: I’m holding off from any conclusion it’s a cyber event. And strategic interest to just Russia? That pipeline ran across how many conflict/war zones? There was much controversy during planning. In 2003 analysts warned that the PKK were highly likely to attack it. https://www.baku.org.uk/publications/concerns.pdf

Bloomberg: Eleven companies — including majority-owner BP, a subsidiary of the State Oil Company of Azerbaijan, Chevron Corp. and Norway’s Statoil ASA — built the line, which has carried more than two billion barrels of crude since opening in 2006.

Robert Lee: I have no idea how this is related to the infrared cameras. There is a lot of fluff entered into this article.

Me: This actually supports the argument that the pipeline was complicated both in politics and infrastructure, increasing risks. A better report would run through why BP planning would be less likely to result in disaster in this pipeline compared to their other disasters, especially given the complicated geopolitical risks.

Bloomberg: According to investigators, every mile was monitored by sensors. Pressure, oil flow and other critical indicators were fed to a central control room via a wireless monitoring system. In an extra measure, they were also sent by satellite.

Robert Lee: This would be correct. There is a massive amount of sensor and alert data that goes to any control center — pipelines especially — as safety is of chief importance and interruptions of even a few seconds in data can have horrible consequences.

Me: I believe it is more accurate to say every mile was designed to be monitored by sensors. We see quite clearly from investigations of the San Bruno, California disaster (killing at least 8 people) that documentation and monitoring of lines are imperfect even in the middle of an expensive American suburban neighborhood. https://articles.latimes.com/2011/aug/30/local/la-me-0831-san-bruno-20110831

Bloomberg: The Turkish government’s claim of mechanical failure, on the other hand, was widely disputed in media reports.

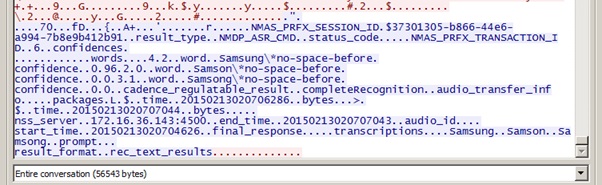

Thomas Rid: A Wikileaks State Department cable refers to this event — by 20 August 2009, BP CEO Inglis was “absolutely confident” this was a terrorist attack caused by external physical force. I haven’t had the time to dig into this, but here’s the screenshot from the cable:

Thanks to @4Dgifts

Me: It may help to put it into context of regional conflict at that time. Turkey started Operation Sun (Güneş Harekatı) attacking the PKK, lasting into March or April. By May the PKK had claimed retaliation by blowing up a pipeline between Turkey and Iran, which shutdown gas exports for 5 days (https://www.dailystar.com.lb/News/Middle-East/2008/May-27/75106-explosion-cuts-iran-turkey-gas-pipeline.ashx). We should at least answer why BTC would not be a follow-up event.

And there have been several explosions since then as well, although I have not seen anyone map all the disasters over time. Figure an energy market analyst must have done one already somewhere.

And then there’s the Turkish news version of events: “Turkish official confirms BTC pipeline blast is a terrorist act” https://www.hurriyet.com.tr/english/finance/9660409.asp

Thomas Rid: Thanks — Very useful!

Bloomberg: “We have never experienced any kind of signal jamming attack or tampering on the communication lines, or computer systems,” Sagir said in an e-mail.

Robert Lee: This whole section seems to heavily dispute the assumption of this article. There isn’t really anything in the article presented to dispute this statement.

Me: Agree. The entire article goes to lengths to make a case using anonymous sources. Mr. Sagir is the best source so far and says there was no tampering detected. Going back to the surveillance cameras, perhaps they were accidentally erased or non-functioning due to error.

Bloomberg: The investigators — from Turkey, the U.K., Azerbaijan and other countries — went quietly about their business.

Robert Lee: This is extremely odd. There are not many companies who have serious experience with incident response in industrial control system scenarios. Largely because industrial control system digital forensics and incident response is so difficult. Traditional information technology networks have lots of sources of forensic data — operations technology (industrial control systems) generally do not.

The investigators coming from one team that works and has experience together adds value. The investigators coming from multiple countries sounds impressive but on the ground level actually introduces a lot of confusion and conflict as the teams have to learn to work together before they can even really get to work.

Me: Agree. The pipeline would see not only confusion in the aftermath, it also would find confusion in the setup and operation, increasing chance of error or disaster.

Bloomberg: As investigators followed the trail of the failed alarm system, they found the hackers’ point of entry was an unexpected one: the surveillance cameras themselves.

Robert Lee: How? This is a critical detail. As mentioned before, incident response in industrial control systems is extremely difficult. The Industrial Control System — Computer Emergency Response Team (ICS-CERT) has published documents in the past few years talking about the difficulty and basically asking the industry to help out. One chief problem is that control systems usually do not have any ability to perform logging. Even in the rare cases that they do — it is turned off because it uses too much storage. This is extremely common in pipelines. So “investigators” seem to have found something but it is nearly outside the realm of possible that it was out in the field. If they had any chance of finding anything it would have been on the Windows or Linux systems inside the control center itself. The problem here is that wouldn’t have been the data needed to prove a failed alarm system.

It is very likely the investigators found malware. That happens a lot. They likely figured the malware had to be linked to blast. This is a natural assumption but extremely flawed based on the nature of these systems and the likelihood of random malware to be inside of a network.

Me: Agree. Malware noticed after disaster becomes very suspicious. I’m most curious why anyone would setup surveillance cameras for “deep into the internal network” access. Typically cameras are a completely isolated/dedicated stack of equipment with just a browser interface or even dedicated monitors/screens. Strange architecture.

Bloomberg: The presence of the attackers at the site could mean the sabotage was a blended attack, using a combination of physical and digital techniques.

Robert Lee: A blended cyber-physical attack is something that scares a lot of people in the ICS community for good reason. It combines the best of two attack vectors. The problem in this story though is that apparently it was entirely unneeded. When a nation-state wants to spend resources and talents to do an operation — especially when they don’t want to get caught — they don’t say “let’s be fancy.” Operations are run in the “path of least resistance” kind of fashion. It keeps resource expenditures down and keeps the chance of being caught low. With everything discussed as the “central element of the attack” it was entirely unneeded to do a blended attack.

Me: What really chafes my analysis is that the story is trying to build a scary “entirely remote attack” scenario while simultaneously trying to explain why two people are walking around next to the pipeline.

Also agree attackers are like water looking for cracks. Path of least resistance.

Bloomberg: The super-high pressure may have been enough on its own to create the explosion, according to two of the people familiar with the incident.

Robert Lee: Another two anonymous sources.

Me: And “familiar with the incident” is a rather low bar.

Bloomberg: Having performed extensive reconnaissance on the computer network, the infiltrators tampered with the units used to send alerts about malfunctions and leaks back to the control room. The back-up satellite signals failed, which suggested to the investigators that the attackers used sophisticated jamming equipment, according to the people familiar with the probe.

Robert Lee: If the back-up satellite signal failed in addition to alerts not coming from the field (these units are polled every few seconds or minutes depending on the system) there would have been an immediate response from the personnel unless they were entirely incompetent or not present (in that case this story would be even less likely). But jamming satellite links is an even extra level of effort beyond hacking a network and understanding the process. If this was truly the work of Russian hackers they are not impressive for all the things they accomplished — they were embarrassingly bad at how many resources and methods they needed to accomplish this attack when they had multiple ways of accomplishing it with any one of the 3-4 attack vectors.

Me: Agree. The story reads to me like conventional attack, known to be used by PKK, causes fire. Then a series of problems in operations are blamed on super-sophisticated Russians. “All these systems not working are the fault of elite hackers”

Bloomberg: Investigators compared the time-stamp on the infrared image of the two people with laptops to data logs that showed the computer system had been probed by an outsider.

Robert Lee: “Probed by an outsider” reveals the system to be an Internet connected system. “Probes” is a common way to describe scans. Network scans against publicly accessible devices occur every second. There is a vast amount of research and public information on how often Internet scans take place (usually a system begins to be scanned within 3-4 seconds of being placed online). It would have been more difficult to find time-stamps in any image that did not correlate to probing.

Me: Also is there high trust in time-stamps? Accurate time is hard. Looking at the various scenarios (attackers had ability to tamper, operations did a poor job with systems) we should treat a time-stamp-based correlation as how reliable?

Bloomberg: Years later, BP claimed in documents filed in a legal dispute that it wasn’t able to meet shipping contracts after the blast due to “an act of terrorism.”

Robert Lee: Which makes sense due to the attribution the extremists claimed.

Me: I find this sentence mostly meaningless. My guess is BP was using legal or financial language because of the constraints in court. Would have to say terrorism, vandalism, etc. to speak appropriately given precedent. No lawyer wants to use a new term and establish new norms/harm when they can leverage existing work.

Bloomberg: A pipeline bombing may fit the profile of the PKK, which specializes in extortion, drug smuggling and assaults on foreign companies, said Didem Akyel Collinsworth, an Istanbul-based analyst for the International Crisis Group. But she said the PKK doesn’t have advanced hacking capabilities.

Robert Lee: This actually further disproves the article’s theory. If the PKK took credit, the company believed it to be them, the group does not possess hacking skills, and specialists believe this attack was entirely their style — then it was very likely not hacking related.

Me: Agree. Wish this pipeline explosion would be put in context of other similar regional explosions, threats from the PKK that they would attack pipelines and regional analyst warnings of PKK attacks.

Bloomberg: U.S. spy agencies probed the BTC blast independently, gathering information from foreign communications intercepts and other sources, according to one of the people familiar with the inquiry.

Robert Lee: I would hope so. There was a major explosion in a piece of critical infrastructure right before Russia invaded Georgia. If the intelligence agencies didn’t look into it they would be incompetent.

Me: Agree. Not only for defense, also for offense knowledge, right? Would be interesting if someone said they probed it differently than the other blasts, such as the one three months earlier between Turkey and Iran.

Bloomberg: American intelligence officials believe the PKK — which according to leaked State Department cables has received arms and intelligence from Russia — may have arranged in advance with the attackers to take credit, the person said.

Robert Lee: This is all according to one, yet again, anonymous source. It is extremely far fetched. If Russia was going to go through the trouble of doing a very advanced and covert cyber operation (back in 2008 when these types of operations were even less publicly known) it would be very out of character to inform an extremist group ahead of time.

Me: Agree, although also plausible to tell a group a pipeline would be blown up without divulging method. Then the group claims credit without knowing method. The disconnect I see is Russia trying to bomb the same pipeline five days later. Why go all conventional if you’ve owned the systems and can remotely do what you like?

Bloomberg: The U.S. was interested in more than just motive. The Pentagon at the time was assessing the cyber capabilities of potential rivals, as well as weaknesses in its own defenses. Since that attack, both Iran and China have hacked into U.S. pipeline companies and gas utilities, apparently to identify vulnerabilities that could be exploited later.

Robert Lee: The Pentagon is always worried about these types of things. President Clinton published PDD-63 in 1998 talking about these types of vulnerabilities and they have been assessing and researching at least since then. There is also no evidence provided about the Iranian and Chinese hacks claimed here. It’s not that these types of things don’t happen — they most certainly do — it’s that it’s not responsible or good practice to cite events because “we all know it’s happening” instead of actual evidence.

Me: Yes, explaining major disasters already happening and focus of congressional work (2008 TVA) would be a better perspective on this section. August 2003 was a sea change in bulk power risk assessment. Talking about Iran and China seems empty/idle speculation in comparison: https://www.nerc.com/pa/rrm/ea/Pages/Blackout-August-2003.aspx

Bloomberg: As tensions over the Ukraine crisis have mounted, Russian cyberspies have been detected planting malware in U.S. systems that deliver critical services like electricity and water, according to John Hultquist, senior manager for cyber espionage threat intelligence at Dallas-based iSight Partners, which first revealed the activity in October.

Robert Lee: It’s not that I doubt this statement, or John, but this is another bad trend in journalism. Using people that have a vested interest in these kind of stories for financial gain is a bad practice in the larger journalism community. iSight Partners offer cybersecurity services and specialize in threat intelligence. So to talk about ‘cyberspies’, ‘cyber espionage’, etc. is something they are financially motivated to hype up. I don’t doubt the credibility or validity of John’s statements but there’s a clear conflict of interest that shouldn’t be used in journalism especially when there are no named sources with first-hand knowledge on the event.

Me: Right! Great point Robert. Reads like free advertising for threat intelligence company X rather than trusted analysis. Would mind a lot less if a non-sales voice was presented with a dissenting view, or the journalist added in caution about the source being of a particular bias.

Also what’s the real value of this statement? As a crisis with Russia unfolds, we see Russia being more active/targeted. Ok, but what does this tell us about August 2008? No connection is made. Reader is left guessing.

Bloomberg: The keyboard was the better weapon.

Robert Lee: The entire article is focused on anonymous sources. In addition, the ‘central element of the attack’ was the computer intrusion which was analyzed by incident responders. Unfortunately, incident response in industrial control systems is at such a juvenile state that even if there were a lot of data, which there never is, it is hard to determine what it means. Attribution is difficult (look at the North Korea and Sony case where much more data was available including government level resources). This story just doesn’t line up.

When journalism reports on something it acknowledges would be history changing better information is needed. When those reports stand to increase hype and tension between nation-states in already politically tense times (Ukraine, Russia, Turkey, and the U.S.). Not including actual evidence is just irresponsible.

Me: Agree. It reads like a revision of history, so perhaps that’s why we’re meant to believe it’s “history changing.” I’m ready to see evidence of a hack yet after six years we have almost nothing to back up these claims. There is better detail about what happened from journalists writing at the time.

Also if we are to believe the conclusion that keyboards are the better weapon, why have two people walking along the pipeline and why bomb infrastructure afterwards? Would Russia send a letter after making a phone call? I mean if you look carefully at what Georgia DID NOT accuse Russia of it was hacking critical infrastructure.

Lack of detailed evidence, anonymous attribution, generic/theoretical vulnerability of infrastructure statements, no contextual explanations…there is little here to believe the risk was more than operational errors coupled with PKK targeted campaign against pipelines.