Photograph: Michael Prior

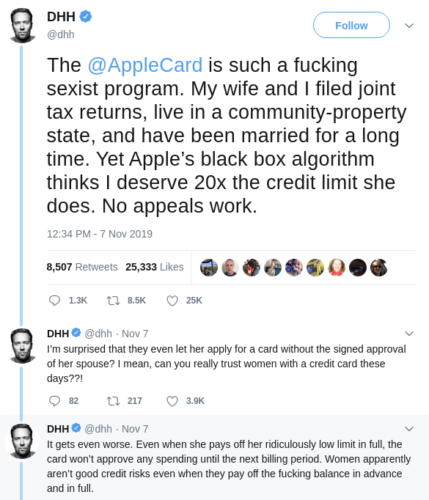

The creator of Ruby on Rails tweeted angrily at Apple November 7th that they were discriminating unfairly against his wife, and he wasn’t able to get a response:

By the next day, he had a response and he was even more unhappy. “THE ALGORITHM”, described similarly to Kafka’s 1915 novel “The Trial“, became the focus of his complaint:

She spoke to two Apple reps. Both very nice, courteous people representing an utterly broken and reprehensible system. The first person was like “I don’t know why, but I swear we’re not discriminating, IT’S JUST THE ALGORITHM”. I shit you not. “IT’S JUST THE ALGORITHM!”. […] So nobody understands THE ALGORITHM. Nobody has the power to examine or check THE ALGORITHM. Yet everyone we’ve talked to from both Apple and GS are SO SURE that THE ALGORITHM isn’t biased and discriminating in any way. That’s some grade-A management of cognitive dissonance.

And the following day he appeals to regulators for a transparency regulation:

It should be the law that credit assessments produce an accessible dossier detailing the inputs into the algorithm, provide a fair chance to correct faulty inputs, and explain plainly why difference apply. We need transparency and fairness. What do you think @ewarren?

Transparency is a reasonable request. Another reasonable request in the thread was evidence of diversity within the team that developed the AppleCard product. These solutions are neither hard nor hidden.

What algorithms are doing, time and again, is accelerating and spreading historic wrongs. The question fast is becoming whether centuries of social debt in forms of discrimination against women and minorities is what technology companies are prepared for when “THE ALGORITHM” exposes the political science of inequality and links it to them.

Woz, founder of Apple, correctly states that only the government can correct these imbalances. Companies are too powerful for any individual to keep the market functioning to any degree of fairness.

Take the German government’s “Datenethikkommission” report on regulating AI, for example, as it was just released.

And the women named in the original tweet also correctly states that her privileged status, achieving a correction for her own account, is no guarantee of a social system of fairness for anyone else.

I care about justice for all. It’s why, when the AppleCard manager told me she was aware of David’s tweets and that my credit limit would be raised to meet his, without any real explanation, I felt the weight and guilt of my ridiculous privilege. So many women (and men) have responded to David’s twitter thread with their own stories of credit injustices. This is not merely a story about sexism and credit algorithm blackboxes, but about how rich people nearly always get their way. Justice for another rich white woman is not justice at all.

Again these are not revolutionary concepts. We’re seeing the impact from a disconnect between history, social science of resource management, and the application of technology. Fixing technology means applying social science theory in the context of history. Transparency and diversity work only when applied in that manner.

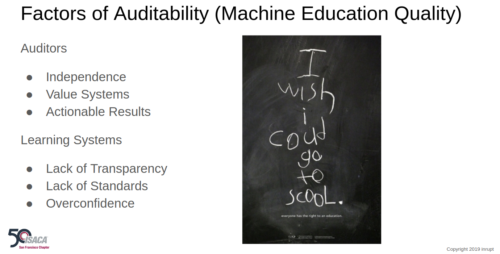

In my recent presentation to auditors at the annual ISACA-SF conference, I conclude with a list and several examples of how AI auditing will perform most effectively.

One of the problems we’re going to run into with auditing Apple products for transparency will be (from denying our right-to-repair hardware to forcing “store” bought software) they have been long waging a war against any transparency in technology.

Apple’s subtle, anti-competitive practices don’t look terrible in isolation, but together they form a clear strategy.

The closed-minded Apple model of business is also dangerous as it directly inspires others to repeat the mistakes.

Honeywell, for example, now speaks of “taking over your building’s brains” by emulating how Apple shuts down freedom:

A good analogy I give to our customers is, what we used to do [with industrial technology] was like a Nokia phone. It was a phone. Supposed to talk. Or you can do text. That’s all our systems are. They’re supposed to do energy management. They do it. They’re supposed to protect against fire. They do it. Right? Now our systems are more like Apple. It’s a platform. You can load any app. It works. But you can also talk, and you can also text. But you can also listen to the music. Possibilities emerge based upon what you want.

That closing concept of possibilities can be a very dangerous prospect if “what you want” comes from a privileged position of power with no accountability. In other words do you want to live in a building run by a criminal brain?

When an African American showed up to rent an apartment owned by a young real-estate scion named Donald Trump and his family, the building superintendent did what he claimed he’d been told to do. He allegedly attached a separate sheet of paper to the application, marked with the letter “C.” “C” for “Colored.” According to the Department of Justice, that was the crude code that ensured the rental would be denied.

Somehow THE ALGORITHM in that case ended up in the White House. And let us not forget that building was given such a peculiar name by Americans trying to appease white supremacists and stop blacks from entering even as guests of the President.

…Mississippi senator suggesting that after the dinner [allowing a black man to attend] the Executive Mansion was “so saturated with the odour of the nigger that the rats have taken refuge in the stable”. […] Roosevelt’s staff went into damage control, first denying the dinner had taken place and later pretending it was actually a quick bite over lunch, at which no women were in attendance.

A recent commentary about fixing closed minds, closed markets, and bias within in the technology industry perhaps explained it best:

The burden to fix this is upon white people in the tech industry. It is incumbent on the white women in the “women in tech” movement to course correct, because people who occupy less than 1% of executive positions cannot be expected to change the direction of the ship. The white women involved need to recognize when their narrative is the dominant voice and dismantle it. It is incumbent on white women to recognize when they have a seat at the table (even if they are the only woman at the table) and use it to make change. And we need to stop praising one another—and of course, white men—for taking small steps towards a journey of “wokeness” and instead push one another to do more.

Those sailing the ship need to course correct it. We shouldn’t expect people outside the cockpit to drive necessary changes. The exception is when talking about the governance group that licenses ship captains and thus holds them accountable for acting like an AppleCard.