A San Bruno police officer pulls over a Waymo robotaxi during a DUI checkpoint. The vehicle has just made an illegal U-turn—seemingly fleeing law enforcement. The officer peers into the driver’s seat and finds it empty. He contacts Waymo’s remote operators. They chat. The Waymo drives away.

No citation issued.

The police department’s social media post jokes:

Our citation books don’t have a box for “robot.”

But there’s nothing funny about what just happened, because… history. We are now witnessing the rebirth of corporate immunity for murder, vehicular violence at scale.

In Phoenix, a Waymo drives into oncoming traffic, runs a red light, and “FREAKS OUT” before pulling over. Police dispatch notes:

UNABLE TO ISSUE CITATION TO COMPUTER.

In San Francisco, a cyclist is “doored” by a Waymo passenger exiting into a bike lane. She’s thrown into the air and slams into a second Waymo that has also pulled into the bike lane. Brain and spine injuries. The passengers leave. There’s a “gap in accountability” because no driver remains at the scene.

In Los Angeles, multiple Waymos obsessively return to park in front of the same family’s house for hours, like stalkers. Different vehicles, same two spots, always on their property line. “The Waymo is home!” their 10-year-old daughter announces.

In a parking lot, Waymos gather and honk at each other all night, waking residents at 4am. One resident reports being woken “more times in two weeks than combined over 20 years.”

A Waymo gets stuck in a roundabout and does 37 continuous laps.

Another traps a passenger inside, driving him in circles while he begs customer service to stop the car. “I can’t get out. Has this been hacked?”

Two empty Waymos crash into each other in a Phoenix airport parking lot in broad daylight.

And now, starting July 2026, California will allow police to issue “notices of noncompliance” to autonomous vehicle companies. But here’s the catch: the law doesn’t specify what happens when a company receives these notices. No penalties. No enforcement mechanism. No accountability.

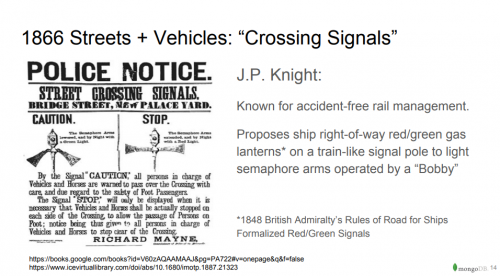

In 1866, London police posted notices about traffic lights with two modes:

CAUTION: “all persons in charge of vehicles and horses are warned to pass the crossing with care, and due regard for the safety of foot passengers”

STOP: “vehicles and horses shall be stopped on each side of the crossing to allow passage of persons on foot”

The street lights were designed explicitly to stop vehicles for pedestrian safety. This was the foundational principle of traffic regulation.

Then American car manufacturers inverted it completely.

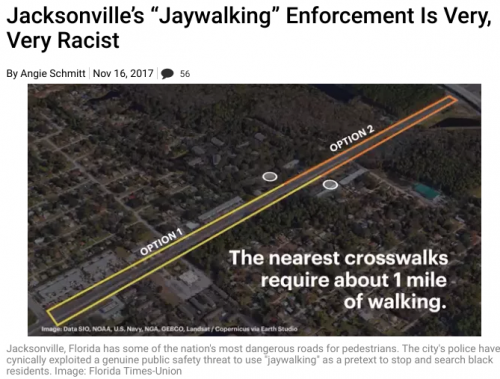

They invented “jaywalking”—a slur using “jay” (meaning rural fool or clown) to shame lower-class people for walking. They staged propaganda campaigns where clowns were repeatedly rammed by cars in public displays. They lobbied police to publicly humiliate pedestrians. They successfully privatized public streets, subordinating human life to vehicle flow.

The racist enforcement was immediate and deliberate. In Ferguson, 95% of arrests for fantasy crimes (let alone victims of vehicular homicide) were of Black people, as these laws always intended.

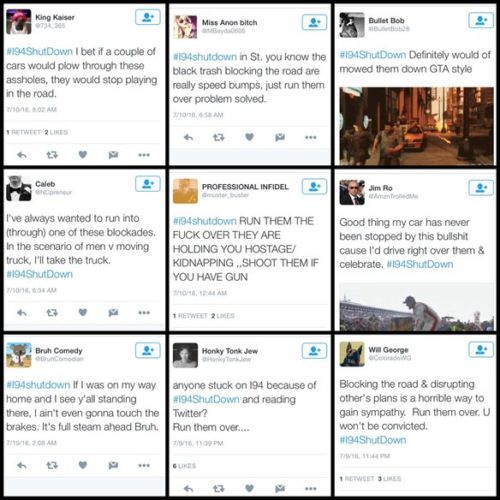

In 2017, a North Dakota legislator proposed giving drivers zero liability for killing pedestrians “obstructing traffic.” Months later, a white nationalist in Charlottesville murdered a woman with his car, claiming she was “obstructing” him.

Now we’re doing it again—but this time the vehicles have no drivers to cite, and the corporations claim they’re not “drivers” either.

Corporations ARE legal persons when it benefits them:

- First Amendment rights (Citizens United)

- Religious freedom claims

- Contract enforcement

- Property ownership

But corporations are NOT persons when it harms them:

- Can’t be cited for traffic violations

- No criminal liability for vehicle actions

- No “driver” present to hold accountable

- Software “bugs” treated as acts of God

This selective personhood is the perfect shield. When a Waymo breaks the law, nobody is responsible. When a Waymo injures someone, there’s a “gap in accountability.” When police try to enforce traffic laws, they’re told their “citation books don’t have a box for ‘robot.'”

Here’s what’s actually happening: Every time police encounter a Waymo violation, they’re documenting a software flaw that potentially affects the entire fleet.

When one Waymo illegally U-turns, thousands might have that flaw. When one Waymo can’t navigate a roundabout, thousands might get stuck. When one Waymo’s “Safe Exit system” doors a cyclist, thousands might injure people. When Waymos gather and honk, it’s a fleet-wide programming error.

These aren’t individual traffic violations. They’re bug reports for a commercial product deployed on public roads without adequate testing.

But unlike actual bug bounty programs where companies pay for vulnerability reports, police document dangerous behaviors and get… nothing. No enforcement power. No guarantee of fixes. No way to verify patches work. No accountability if the company ignores the problem.

The police are essentially providing free safety QA testing for a trillion-dollar corporation that has no legal obligation to act on their findings despite mounting deaths.

We’ve seen this exact playbook before.

From 2007-2014, Baghdad had over 1,000 checkpoints where Palantir’s algorithms flagged Iraqis as suspicious based on the color of their hat or the car they drove. U.S. Military Intelligence officers said:

If you doubt Palantir, you’re probably right.

The system was so broken that Iraqis carried fake IDs and learned religious songs not their own just to survive daily commutes. Communities faced years of algorithmic targeting and harassment. Then ISIS emerged in 2014—recruiting heavily from the very populations that had endured years of being falsely flagged as threats.

Palantir’s revenue grew from $250 million to $1.5 billion during this period. A for-profit terror generation engine or “self licking ISIS-cream cone” as I’ve explained before.

The critical question military commanders asked:

Who has control over Palantir’s deadly “Life Save or End” buttons?

The answer: Not the civilians whose lives were being destroyed by false targeting.

Who controls the “Life Save or End” button when a Waymo encounters a cyclist? A pedestrian? Another vehicle?

- Not the victims

- Not the police (can’t cite, can’t compel fixes)

- Not democratic oversight (internal company decisions)

- Not regulatory agencies (toothless “notices”)

Only the corporation. Behind closed doors. With no legal obligation to explain their choices.

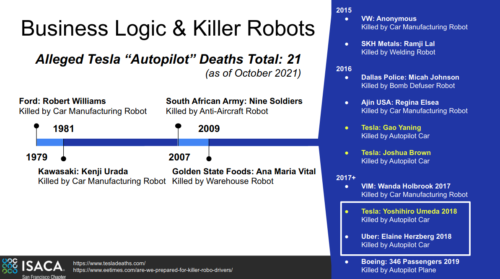

When a Tesla, Waymo or Palantir algorithmic agent of death “veers” into a bike lane, who decided that was acceptable risk? When it illegally stops in a bike lane and doors a cyclist, causing brain injury, who decided that “Safe Exit system” was ready for deployment? When it drives into oncoming traffic, who approved that routing algorithm?

We don’t know. We can’t know. The code is proprietary. The decision-making is opaque. And the law says we can’t hold anyone accountable.

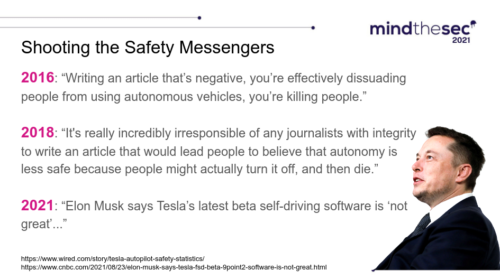

In 2016, Elon Musk promised loudly Tesla would end all cyclist deaths and publicly abused and mocked anyone who challenged him. Then Tesla vehicles repeatedly kept “veering” into bike lanes and in 2018 accelerated into and killed a man standing next to his bike.

Similarly in 2017, an ISIS-affiliated terrorist drove a truck down the Hudson River Bike Path, killing eight people. Federal investigators linked the terrorist to networks that Palantir’s algorithms had helped radicalize in Iraq. For some reason they didn’t link him to the white supremacist Twitter campaigns demanding pedestrians and cyclists be run over and killed.

Since then, Tesla “Autopilot” incidents involving cyclists have become epidemic. In Brooklyn, a Tesla traveling 50+ mph killed cyclist Allie Huggins in a hit-and-run. Days later, NYPD responded by ticketing cyclists in bike lanes.

This is the racist jaywalking playbook digitized: Police enforce against the vulnerable population, normalizing their elimination from public space—and training AI systems to see cyclists as violators to be punished with death rather than victims.

Musk now stockpiles what some call “Swasticars”—remotely controllable vehicles deployed in major cities, capable of receiving over-the-air updates that could alter their behavior fleet-wide, overnight, with zero public oversight.

If we don’t act, we’re building the legal infrastructure for algorithmic vehicular homicide with corporate immunity. Here’s what must happen:

Fleet-Wide Corporate Liability

When one autonomous vehicle commits a traffic violation due to software, the citation goes to the corporation multiplied by fleet size. If 1,000 vehicles have the dangerous flaw, that’s 1,000 citations at escalating penalty rates.

Dangerous violations (driving into oncoming traffic, hitting pedestrians/cyclists, reckless driving) trigger:

- Mandatory fleet grounding until fix is verified by independent auditors

- Public disclosure of the flaw and the fix

- Criminal liability for executives if patterns show willful negligence

Public Bug Bounty System

Every police encounter with an autonomous vehicle violation must:

- Trigger mandatory investigation within 48 hours

- Be logged in a public federal database

- Require company response explaining root cause and fix

- Include independent verification that fix works

- Result in financial penalties paid to police departments for their QA work

If companies fail to fix documented patterns within 90 days, their permits are suspended until compliance.

Restore the 1866 Principle

Traffic rules exist to stop vehicles for public safety, not to give vehicles—or their corporate owners—immunity from accountability.

The law must state explicitly:

- Corporations deploying autonomous vehicles are legally responsible for those vehicles’ actions

- “No human driver” is not a defense against criminal or civil liability

- Code must be auditable by regulators and available for discovery in injury cases

- Vehicles that cannot safely stop for pedestrians/cyclists cannot be deployed

- Human life takes precedence over vehicle throughput, period

When Waymo’s algorithms decide who lives and who gets “veered” (algorithmic death), who controls that button?

When Tesla’s systems target cyclists while police ticket the victims, who controls that button?

When corporations claim they’re persons for speech rights but not persons for traffic crimes, who controls that button?

Right now, the answer is: Nobody we elected. Nobody we can hold accountable. Nobody who faces consequences for being wrong.

Car manufacturers spent the extremist “America First” 1920s inventing racist “jaywalking” crime to privatize public streets and criminalize pedestrians. It worked so well that by 2017, who really blinked when a North Dakota legislator could propose zero liability for drivers who kill people with cars? By 2021, Orange County deputies shot a Black man to death while arguing whether he had simply walked on a road outside painted lines.

Now we’re handing that same power to algorithms—except this time there’s no driver to arrest, no corporation to cite, and no legal framework to stop fleet-wide deployment of dangerous systems.

Palantir taught us what happens when unaccountable algorithms target populations: you create the enemies you claim to fight, and profit from the violence.

Are we really going to let that same model loose on American streets?

Because when police say “our citation books don’t have a box for robot,” what they’re really saying is: We’ve lost the power to protect you from corporate violence.

That’s not a joke. That’s murder by legal design.

The evidence is clear. The pattern is documented. The choice is ours: Restore accountability now, or watch autonomous vehicles follow the exact playbook by elites that turned jaywalking into a tool of intentional racist violence and Palantir checkpoints into an ISIS recruiting campaign to justify white nationalism. See the problem and the connection between them?

Who controls the button? Right now, nobody you can vote for, sue, or arrest. That has to change.

Here’s how William Blake warned us of algorithmic dangers way back in 1794. His “London” poem basically indicts institutions of church and palace for being complicit in producing systemic widespread suffering:

I wander thro’ each charter’d street,

Near where the charter’d Thames does flow,

And mark in every face I meet

Marks of weakness, marks of woe.In every cry of every Man,

In every Infants cry of fear,

In every voice: in every ban,

The mind-forg’d manacles I hear

Those “mind-forg’d manacles” mean algorithmic oppression by systems of control, which appear external but are human-created. A “charter’d street” was privatized public space, precedent for using power to enforce status-based criminality, such as Palantir checkpoints and jaywalking laws.

I used to read magazines and journals, even a newspaper, but I have to say I find blogs now to be some of the best reporting anywhere. You are punching 1000X above weight here.

Driverless isn’t innovation when it’s the old corporate playbook for legalized violence being algorithmically scaled. Remember how dock workers shut down Mussolini’s networks in 1936 after the violence he had unleashed?

This article neglects to mention that Waymos are ALREADY far safer than human drivers, particularly when including people driving while impaired by alcohol, druds, or simply sleepiness. And unlike humans, every year the computer-driven car fleet becomes better at driving.

@Mark

Why would I lie? Your bogus safety claim is an obvious logical contradiction that will get even more people killed:

If autonomous vehicles are safer than human drivers, manufacturers would welcome traffic citations as proof. Each violation-free mile would demonstrate superiority. Fines would be minimal, and the safety record would justify expansion.

Instead, manufacturers lobbied for laws that prevent citations entirely. This reveals their actual expectation: that independent measurement would damage their safety claims.

Consider what citations create for human drivers:

Autonomous vehicle manufacturers eliminated all four. Not because violations are rare, but because documentation is dangerous to their business model.

The California law allowing “notices of noncompliance” specifies zero penalties. If violations were rare, penalties would be irrelevant. Their absence indicates expectation of frequent violations—possibly far more than human drivers.

You claim the fleet “becomes better” annually. Without mandatory violation reporting, independent verification, or penalty enforcement, this claim is unfalsifiable. It works both ways: I can claim every year the fleet gets worse, and what data proves me wrong?

We’re forced to accept corporate self-assessment as fact. But safe products don’t require immunity from accountability. They demonstrate safety through transparent measurements. Every CISO knows this. A CTO without external assessment is going to have systems absolutely riddled with hidden bugs.

The legal architecture manufacturers created—citation immunity, no penalties, proprietary code, voluntary reporting—serves one purpose: enabling murder by preventing independent assessment of actual safety performance.

Mark, you are smoking Alphabet soup. In Arizona, when Joel Caspers was rear-ended by a Waymo in 2021 that totaled his car, the investigating officer wrote: “At this time, [Waymo] was not issued a citation since the Waymo was in autonomous mode and no person was inside the vehicle at the time of the crash”. That’s how they claim to be “better”.

It’s mainly evidence of American business elitists being hyper political, and not about tech at all, as they totally cook transit books. Enron taught us all of this before.

Out of 71 police reports from Arizona cities, police blamed Waymo vehicles in over 10% of crashes and NONE resulted in citations.

All the fault, none of the accountability.

No, The differnce between me and y’all is the I’ve actually taken the time to go beyond anecdotes, and read the results of studies that evaluate safety averaged over tens of millions of miles driven.

Again, on average, Waymo is ALREADY far safer than human drivers. And unlike human drivers, Waymo’s safety continues to improve, with improved hardware and software.

Try doing a search for “Swiss Re studies of Waymo safety versus human drivers.”

Waymo says fault is a “legal determination” they can’t share… but they happily cite the Swiss Re insurance data showing reduced liability claims (which IS a fault determination, just one that makes them look good). Can’t have both.

Why happily cite insurance studies proving they WEREN’T at fault, if they say nobody should share data proving they WERE at fault??? When police reports show they WERE at fault, like all the time in Arizona, suddenly those “legal determinations” don’t make it to their safety dashboard. And even when at fault, they get zero citations thanks to legal loopholes.

Transparency only when it helps them and full opacity when it doesn’t.

There are nearly 250 rear-end collisions in their data releases, and they are hiding fault on all of it. Why? Maybe it would reveal a serious problem.

Let’s be honest. What human would have 589 parking tickets and claim to be superior to other humans at driving? LOL.

Hahaha, Skilling nailed it. Mark sounds even more rediculous. Get it? Skilling? Enron?! Let’s have a look:

Being “better than the average” is some bullshit stat games. Safety is only about response to failure. Not averages.

Enron had great numbers, did way better than average, and also zero transparency. Just like Waymo.

“Good numbers” without accountability isn’t going to save lives. Theranos had great numbers too.

@Mario

You raised an important point I had to think about. It’s literally the focus of both my degrees, the very sobering reality of how authoritarian regimes destroy domestic opposition, making international solidarity the only feasible response.

Mussolini used ICE-like militias (Blackshirts) for a decade to murder and disrupt powerful non-governmental groups (religion and labor) within Italy. Independence of Italians had been destroyed and replaced with fascist-controlled “corporations” dominating every aspect of life. There was only corporate voices by design and run top-down. Speak up and lose everything including your life. That is why anyone with the freedom of thought to refuse to ship something Italian during the 1936 illegal and brutal Ethiopian invasion (e.g. delivering chemical weapons) had to work from Britain, France, the US, etc..

“Hahaha, Skilling nailed it.”

His argument was specious nonsense. Waymo got 589 parking tickets in SF operating a fleet of 300 vehicles, or about 2 parking tickets per vehicle for the year. In that same year, SF issued about 1.5 million tickets, on a fleet of about 850,000 vehicles. That’s approximately…2 tickets per vehicle.

Not that parking tickets generally have much to do with safety, anyway. (On a matter more related to safety, why didn’t he compare speeding tickets for Waymo cars versus humans, for example?) (That’s a rhetorical question…)

@Mark

WOW thanks for the perfect example. Parking tickets—the ONE thing that CAN be enforced equally on robots—show equal rates.

Obviously we should assume same behavior could be true for the violations that CAN’T be enforced: speeding, red lights, illegal turns, reckless driving. It’s a plausible hypothesis to test.

13% of crashes police determined were Waymo’s fault. Zero citations. Turn on the citations and the number could explode. Your parking ticket data actually proves robots follow rules only when there are consequences. So they are likely even worse than humans with moving violations, as they aren’t being enforced, and learning to continue getting worse because unaccountable.

You literally just made the Enron argument:

“Look at this ONE metric that’s normal!” while ignoring the critical accountability mechanisms that actually matter.

Waymo reporting is completely broken. Enron = Jail. Theranos = Jail. FTX = Jail. Waymo = still cooking data.

MARK: Waymo office supply expenses are perfectly normal!

INVESTIGATOR: What about these billions in hidden partnerships?

MARK: HAVE YOU SEEN THE STAPLER INVENTORY?

I’m not a fan of Davi, I’ll admit. He brings history into everything and uncomfortably so. I would rather not have to read about Iraq war when discussing Waymo.

That aside, Mark is fundamentally wrong and presents a view into why Americans are so bad at science. It’s mostly fraud in Big Tech, covered up by billionaires who pay hush money to keep failing products in the market.

Where Mark is Wrong:

He brings up Swiss Re studies yet doesn’t understand they don’t address accountability: Being “safer on average” doesn’t eliminate enforcement mechanisms. The opposite is true because averages are unsafe. Human drivers are held accountable when most of them don’t cause crashes. How obvious can this point be, where Mark seems totally blind?

The parking ticket comparison backfires spectacularly on Mark (further blindness): As Davi points out, this actually proves that when enforcement EXISTS, Waymo’s behavior matches humans. And when you think about it parking rules are so simple, basic math, if Waymo fails at the rate of humans we should expect moving violations to be the same or even worse. Reading a sign is entry-level AI where reading an intersection is next level, but should be prohibited the moment ANY Waymo gets a parking ticket. One parking ticket should ground the entire fleet until that bug is fixed.

The problem is enforcement DOESN’T exist for moving violations. That’s a huge tragedy waiting to happen. I’m not talking about domestic terrorism as I think that’s inflammatory. But Davi is right about the science.

“Improving over time” requires verification: Without independent measurement and mandatory violation reporting, this claim is unfalsifiable corporate marketing like Enron.

Waymo is spending more money on marketing than competitors, that is clear. And while Tesla criminally corrupts the data, Waymo more elegantly poisons it. In any case, driverless is a scam thus far, and America is littered with scams like this at massive scale that hurt the public while the billionaires fly around with Epstein.

@He Man

Point taken. Don’t study history, it condemns you to watch people repeat the worst parts.

When American companies say “we’re safer than…” but refuse independent verification, prevent enforcement, hide fault data, and lobby against accountability—these are HISTORIC red flags, not any proof of safety.

American innovation eras in the past have been characterized like this:

– Falsifiable claims

– Independent verification

– Transparent methodology

– Adversarial review

Waymo’s mistakes:

– Unfalsifiable claims (proprietary data)

– Self-verification only

– Opaque methodology

– Regulatory capture instead of review

Any real CSO worth their salt would go to the CFO and shut down this nonsense immediately. No proper safety gates should mean no product tests in public, let alone launches to market. Uncritical acceptance of engineering claims enables fraud.

Mark is arguing that planes crash less often than cars so we don’t need to investigate the Boeing 737 tragedies.

Let me break down why Waymo marketing of averages doesn’t work for safety:

– If 999 Waymos drive perfectly but 1 has a fatal bug, the “average” looks great

– That 1 bug potentially exists in ALL 1,000 vehicles (fleet-wide software flaw)

– Now 1,000 vehicles have a deadly dormant bug, ignored until it potentially kills thousands of people

– Human driver crashes are isolated incidents; autonomous vehicle bugs are documented systemic risks demanding the opposite of negligence

This is why aviation, medical devices, and nuclear plants don’t use “better than average” as their safety standard. It’s also why an American obsession with serving only Wall Street is so dangerous when it tries to eliminate basic safety regulations. Those regulations are the mother of innovation, forcing real engineering, preventing stock fraud.

A proper safety framework that would produce genuine innovation looks like this:

– Identify failure modes

– Mandate reporting of ALL failures

– Require fixes before continued operation

– Independent verification of fixes

Waymo fails across the board, but even worse they have adherents like Mark who still advocate for their failures even when a huge increase in fatalities is coming like we have seen in HISTORY.

I wish people who claim to understand a study would actually read it thoroughly.

Swiss Re? The authors very clearly warned:

The human baseline is artificially LOW? That’s a worry.

Comparing total Waymo collisions (9.5/M miles) to human liability-only events (4.1/M miles) is acknowledged an apples-to-bananas comparison.

Waymo obviously should have been compared to other ride-hailing drivers (Uber, Lyft), not private passenger vehicles. Commercial drivers have higher crash rates, for example, so it’s very, very odd to ignore more attractive and more accurate analysis.

Waymo’s total collision involvement (9.5) is also actually higher than the human liability baseline (4.1). So Waymo was involved in MORE total incidents and found liable less often, which supports Davi’s whole point that the system of Waymo liability determination is crooked.

Let me be as clear as possible: If Waymo were genuinely safer, total collision involvement should be lower. Instead, Waymo collision involvement is higher yet liability is lower. This pattern suggests systematic bias in the liability determination, NOT superior safety.

Just 2 bodily injury claims out of 241 collisions (0.8%)? That smells obviously wrong in the liability determination. Red flag.

And perhaps most of all, the authors admit “claims not applicable to private passenger risk exposure, mainly claims not related to contact events, were removed from analysis.” This is exactly the kind of data filtering Davi raised as problematic.

Events with no contact would include near misses, when a vehicle swerves to avoid, crashes and kills everyone in a ball of fire. Not included. Or worse, human victims are blamed for a Waymo causing them to crash. Now expand that fleet-wide and we have a huge problem, Houston.

The authors state clearly they used a data set curated and pruned, to the point it isn’t a useful comparison with humans.

“I wish people who claim to understand a study would actually read it thoroughly.”

Good grief! Are you writing a note to yourself? YOU’RE the one who doesn’t have a clue about what the study means!

You quote the study:

“If a commercial ride-hailing driving benchmark were used, it is expected to yield a higher frequency than the personal liability claims benchmark… Because of the differences in personal liability and commercial liability claims generation, the current benchmark based on personal liability claims is considered to underrepresent a commercial liability benchmark.”

And then you come to the nonsensical and 180-degrees-wrong conclusion:

“The human baseline is artificially LOW? That’s a worry.”

No, it’s only a worry to clueless people who didn’t even bother to read the entire paragraph before posting foolish comments.

The entire paragraph reads (emphasis added):

“Because of the differences in personal liability and commercial liability claims generation, the current benchmark based on personal liability claims is considered to underrepresent a commercial liability benchmark (a personal liability benchmark is believed to represent a lower collision frequency than a commercial liability benchmark) AND IS THEREFORE REPRESENTING A CONSERVATIVE ASSESSMENT OF THE WAYMO ADS SAFETY PERFORMANCE.”

So, no, that the human baseline is artificially low is not “a worry” to someone pointing out that data show that Waymo is much safer than the average of the human driving fleet.

@Mark

You’re embarrassing yourself, so let me try to help you climb out of the hole you keep digging deeper before you are buried down there.

You clearly don’t understand the methodological critique. The problem isn’t what that sentence says – it’s what using a “conservative assessment” reveals about the study’s validity.

This is basic logic. The authors admit:

— Commercial ride-hailing benchmark would be MORE appropriate (Waymo IS a commercial service)

— Commercial drivers have HIGHER crash rates than private drivers

Therefore comparing to private drivers is “conservative” (makes Waymo look less impressive than it should). If you think this helps your argument, you’re completely upside down. It destroys it.

If using the proper benchmark would make Waymo look BETTER, why didn’t they use it? They admit using the WRONG benchmark and explicitly state commercial is more appropriate. Using private drivers is like testing a race car against donkeys.

Why, Mark, why? If the more accurate comparison would look better, why avoid it? It undermines the study’s validity to see “used the wrong benchmark on purpose”. That is not a defense of scientific rigor.

But you STILL won’t address the actual problem:

— Waymo total collisions: 9.5 per million miles

— Human liability baseline: 4.1 per million miles

— Waymo is involved in MORE total incidents but found liable LESS often.

How can a vehicle that’s involved in MORE incidents (9.5 vs 4.1) be “much safer”?

Is your argument seriously: “Yes, Waymo crashes more often, but it’s not their fault”? Because that’s exactly the corporate liability shield you are being warned about.

You keep proving the point by not understanding the shield.

Answer the specific question: How is Waymo causes “more collisions” = Waymo is “much safer”?

“Why, Mark, why?”

Good grief! Can’t you answer that question yourself?

If you were a researcher trying to answer the question, “Is Waymo safer than human drivers?” would you choose an incredibly small subset of the very safest human drivers to represent the entire population of human drivers?

Don’t you see yhat if you did that, you’d be answering a totally different question? That you’d be answering the question, “Is Waymo safer than an incredibly small subset of the safest human drivers?”

@Mark

You’ve gone backwards again.

Commercial ride-hailing drivers are LESS safe than private drivers, not more safe.

The study explicitly states commercial drivers have “higher frequency” crashes. Higher frequency = MORE crashes = LESS safe.

Your argument is literally:

“Comparing Waymo to their competition would be unfair to Waymo”

That’s insane. It’s like running a few blocks the same day as the marathon and declaring yourself the winner, demanding you can’t be compared to the people running the full distance.

If Waymo is replacing Uber/Lyft, we need to know if safety improves when commercial drivers are replaced. Not whether Waymo is safer than grandma’s 1989 Mustang that never leaves the garage.

You’re defending Waymo avoiding an accurate comparison, being in actual competition, because it’s “too hard.”

And for the FIFTH time:

Waymo total collisions: 9.5/M miles

Human liability claims: 4.1/M miles

How is “more collisions” = “safer”?

The math, Mark. The math.

“Your argument is literally:

‘Comparing Waymo to their competition would be unfair to Waymo'”

You’re obviously having trouble reading my comments. If you’ve ever done research, you’ll know that a researcher starts out with a question. In this case, the research question was NOT, “Is Waymo safer than Uber and Lyft?”

It was, “Is Waymo safer than human drivers?”

In order to assess the answer to that question, the most appropriate thing to do is to evaluate Waymo against entire set of human drivers, not a very small subset of human drivers consisting of Uber and Lyft drivers (who are in a limited age range, are far less likely to be driving while intoxicated, are likely to be very experienced drivers, etc.).

“Answer the specific question: How is Waymo causes ‘more collisions’ = Waymo is ‘much safer’?”

Your question indicates you know essentially nothing about the motor vehicle laws in the United States. If Car B (human driver) rearends the back of Car A (Waymo vehicle), it is virtually certain that Car A (Waymo) has not “caused” the accident. The driver that rearends another driver is (virtually) ALWAYS at fault. So the car that is rearended does not “cause” the accident. The car that does the rearending is the car that “caused” the accident.

@Mark

Ok, now you have me looking at the logs to see where in the world you are from, because you proved yourself wrong again. I’m guessing America, given you don’t seem to understand engineering or regulations. Amiright @He Man?

Well, no. It does cause accidents. I hope I’m not the first to introduce you to the NHTSA, who recalled 362,000 Teslas for “phantom braking”. Sudden stops caused following vehicles to rear-end them, and the rear-ended car had to be recalled.

Under your logic, Tesla “didn’t cause” those crashes because they got rear-ended. NHTSA shows how your claims are so dangerously wrong. Robot software bugs CAUSED many crashes by stopping in front of humans.

This example is exactly why we need citations and strict enforcement in Waymo crashes: Document patterns. Identify fleet-wide bugs. Force recalls before more people get hurt.

Waymo has very unnecessary and very dangerous legal immunity from this simple level of oversight.

Waymo: 9.5 incidents/M miles

Humans: 4.1 claims/M miles

More crashes and less liability is the shield I keep warning about. It’s like saying over and over that 9.5 is a bigger number than 4.1 as you keep praying out loud that’s it’s not.