Today the US moved closer to a federal consumer data breach notification requirement (healthcare has had a federal requirement since 2009 — see Eisenhower v Riverside for why healthcare is different from consumer).

PC World says a presentation to the Federal Trade Commission sets the stage for a Personal Data Notification & Protection Act (PDNPA).

U.S. President Barack Obama is expected to call Monday for new federal legislation requiring hacked private companies to report quickly the compromise of consumer data.

Every state in America has had a different approach to breach deadlines, typically led by California (starting in 2003 with SB1386 consumer breach notification), and more recently led by healthcare. This seems like an approach that has given the Feds time to reflect on what is working before they propose a single standard.

In 2008 California moved to a more aggressive 5-day notification requirement for healthcare breaches after a crackdown on UCLA executive management missteps in the infamous Farah Fawcett breaches (under Gov Schwarzenegger).

California this month (AB1755, effective January 2015, approved by the Governor September 2014) relaxed its healthcare breach rules from 5 to 15 days after reviewing 5 years of pushback on interpretations and fines.

For example, in April 2010, the CDPH issued a notice assessing the maximum $250,000 penalty against a hospital for failure to timely report a breach incident involving the theft of a laptop on January 11, 2010. The hospital had reported the incident to the CDPH on February 19, 2010, and notified affected patients on February 26, 2010. According to the CDPH, the hospital had “confirmed” the breach on February 1, 2010, when it completed its forensic analysis of the information on the laptop, and was therefore required to report the incident to affected patients and the CDPH no later than February 8, 2010—five (5) business days after “detecting” the breach. Thus, by reporting the incident on February 19, 2010, the hospital had failed to report the incident for eleven (11) days following the five (5) business day deadline. However, the hospital disputed the $250,000 penalty and later executed a settlement agreement with the CDPH under which it agreed to pay a total of $1,100 for failure to timely report the incident to the CDPH and affected patients. Although neither the CDPH nor the hospital commented on the settlement agreement, the CDPH reportedly acknowledged that the original $250,000 penalty was an error discovered during the appeal process, and that the correct calculation of the penalty amount should have been $100 per day multiplied by the number of days the hospital failed to report the incident to the CDPH for a total of $1,100.

It is obvious too long a timeline hurts consumers. Too short a timeline has been proven to force mistakes with covered entities rushing to conclusion then sinking time into recovering unjust fines and repairing reputation.

Another risk with too short timelines (and complaint you will hear from investigation companies) is that early-notification reduces good/secret investigations (e.g. criminals will erase tracks). This is a valid criticism, however it does not clearly outweigh benefits to victims of early notification.

First, a law-enforcement delay caveat is meant to address this concern. AB1755 allows a report to be submitted 15 days after the end of a law-enforcement imposed delay period, similar to caveats found in prior requirements to assist important investigations.

Second, we have not seen huge improvements in attribution/accuracy after extended investigation time, mostly because politics start to settle in. I am reminded of when Walmart in 2009 admitted to a 2005 breach. Apparently they used the time to prove they did not have to report credit card theft.

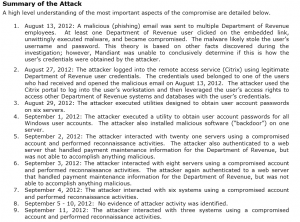

Third, value relative to the objective of protecting data from breach. Consider the 30-day Mandiant 2012 report for South Carolina Department of Revenue. It ultimately was unable to figure out who attacked (although they still hinted at China). It is doubtful any more time would have resolved that question. The AP has reported Mandiant charged $500K or higher and it also is doubtful many will find such high costs justified. Compare their investigation rate with the cost of improving victim protection:

Last month, officials said the Department of Revenue completed installing the new multi-password system, which cost about $12,000, and began the process of encrypting all sensitive data, a process that could take 90 days.

I submit to you that a reasonably short and focused investigation time saves money and protects consumers early. Delay for private investigation brings little benefit to those impacted. Fundamentally who attacked tends to be less important that how a breach happened; determining how takes a lot less time to investigate. As an investigator I always want to get to the who, yet I recognize this is not in the best interest of those suffering. So we see diminishing value in waiting, increased value in notification. Best to apply fast pressure and 30 days seems reasonable enough to allow investigations to reach conclusive and beneficial results.

Internationally Singapore has the shortest deadline I know of with just 48-hours. If anyone thinks keeping track of all the US state requirements has been confusing, working globally gets really interesting.

Update, Jan 13:

Brian Krebs blogs his concerns about the announcement:

Leaving aside the weighty question of federal preemption, I’d like to see a discussion here and elsewhere about a requirement which mandates that companies disclose how they got breached. Naturally, we wouldn’t expect companies to disclose publicly the specific technologies they’re using in a public breach document. Additionally, forensics firms called in to investigate aren’t always able to precisely pinpoint the cause or source of the breach.

First, federal preemption of state laws sounds worse than it probably is. Covered entities of course want more local control at first, to weigh in heavily on politicians and set the rule. Yet look at how AB1755 in California unfolded. The medical lobby tried to get the notification moved from 5 days to 60 days and ended up on 15. A Federal 30 day rule, even where preemptive, isn’t completely out of the blue.

Second, disclosure of “how” a breach happened is a separate issue. The payment industry is the most advanced in this area of regulation; they have a council that releases detailed methods privately in bulletins. The FBI also has private methods to notify entities of what to change. Even so, generic bulletins are often sufficient to be actionable. That is why I mentioned the South Carolina report earlier. Here you can see useful details are public despite their applicability:

Obama also today is expected to make a case in front of the NCCIC for better collaboration between private and government sectors (Press Release). This will be the forum for this separate issue. It reminds me of the 1980s debate about control of the Internet led by Rep Glickman and decided by President Reagan. The outcome was a new NIST and the awful CFAA. Let’s see if we can do better this time.

Letters From the Whitehouse:

- Updated Department of Homeland Security Cybersecurity Authority and Information Sharing (7 pages, 185 kb) and its Section by Section Analysis (3 pages, 162 kb)

- Updated Data Breach Notification (9 pages, 250 kb) and its Section by Section Analysis (5 pages, 242 kb)