The “carriage” form-factor is ancient.

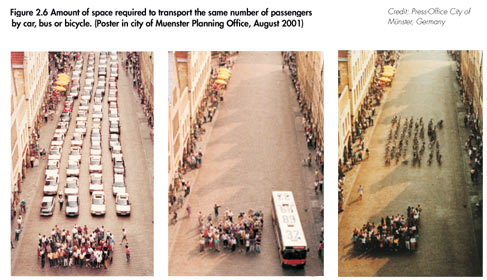

So even though today we say “car” instead of carriage, we should know that to augment a single person’s travel with a giant opulent box is primitive thinking, and obviously doesn’t scale well to meet modern transit needs. Study after study by design experts have shown us how illogical it is to continue to build and use cars:

Fortunately, modern exoskeletons are more suited (no pun intended) to the flexibility of both the traveler and those around. Rex is a good example of why some data scientists are spending their entire career trying to unravel “gait” in order analyse and improve the “signature” of human movement. They discuss here how they are improving mobility for augmentation of a particular target audience:

This is an early-stage and yet it still shows us how wrong it is to use a car. When I expand such technology use to everyone I imagine people putting on a pair of auto-trousers to jog 10 miles at 20 mph to “commute” while exercising, or to lift rubble off people for 12 hours without breaks after an earthquake, or both.

We already see this class of power-assist augmented travel in tiny form-factors in the latest generation of electric bicycles, like the Shimano e8000 motor. It adds power as a cyclist pedals, creating a mixed-drive model:

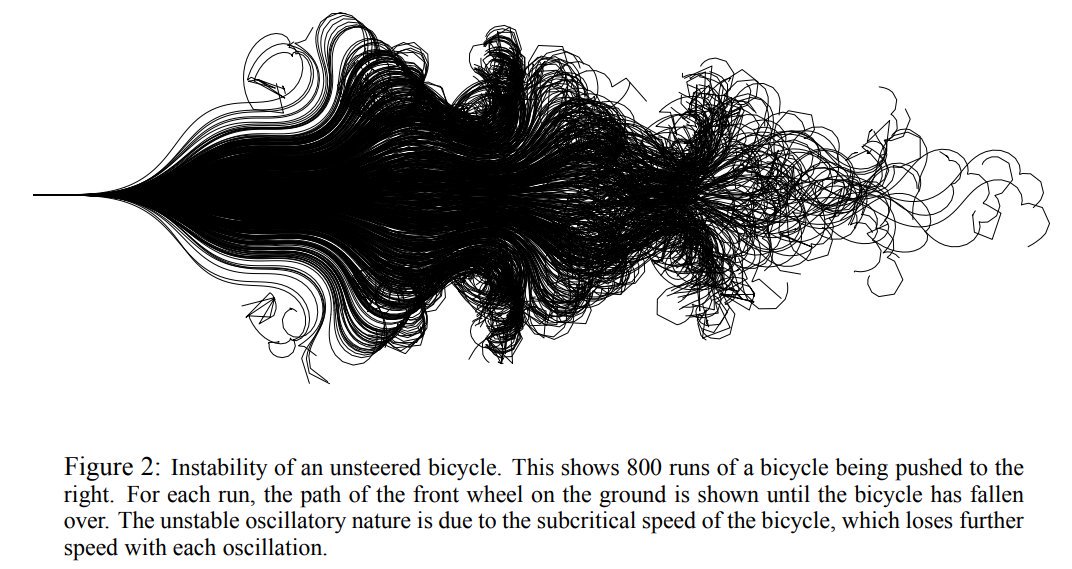

For what it’s worth, the “gait” (wobble) of bicycles also is super complicated and a rich area of data science research. Robots fail miserably (nice try Yamaha) to emulate the nuance of controlling/driving two-wheels. Anyone saying driverless cars will reduce deaths isn’t looking at why driverless cars are more likely than human drivers to crash into pedestrians and cyclists. Any human can ride a bicycle, but to a driverless car this prediction tree is an impenetrable puzzle:

Unlike sitting in a cage, the possibilities of micro-engines form-fitted to the human body are seemingly endless, just like the branches in that tree. So it makes less and less sense for anyone to want cages for personal transit, unless they’re trying to make a forceful statement by taking up shared space to deny freedom to others.

What is missing in the above sequence of photos? One where cars are completely gone, like bell-bottom trousers, because they waste so much for so little gain, lowering quality of life for everyone involved.

Floating around in a giant private box really is a status thing, when you think about it. It’s a poorly thought out exoskeleton, like a massive blow-up suit or fluffy dress that everyone has to clean up after (and avoid being hit by).

Driverless cars meant to increase the number of empty carriages on roads (“summon mode”) will be like hell on earth. A gridlock of empty, unnecessary, wasteful “hackney” rides like failed 1700s aristocracy taught us nothing.

Here’s some excellent perspective on the stupidity of carrying forward the carriage design into modern transit:

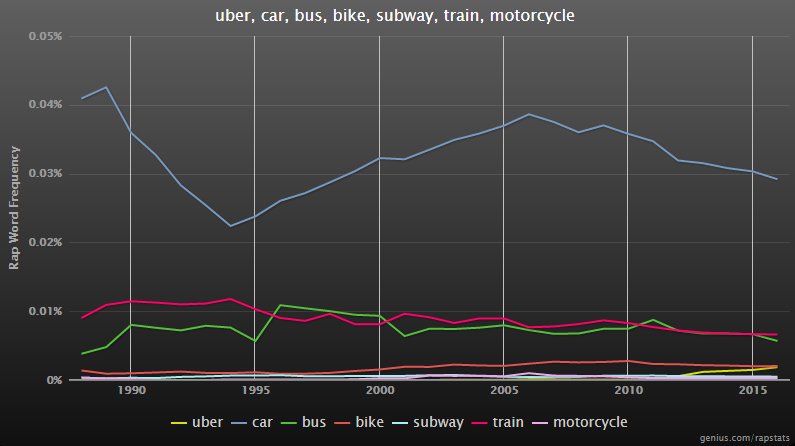

Rapstatus tells us cars still get a lot of lip service so I suspect we’re a long way from carriages being relegated to ancient history, where they belong.

Nontheless I’m told new generations aren’t yet sold on dreams of long dead kings, and so I hope already they visualize something like this display of stupidity when asked if they would like their “AI assistant” (servant) to bring their carriage around…