In 1941, Congress passed the National Cattle Theft Act to crack down on interstate cattle rustling. Today, tech giants face similar scrutiny for how they handle our personal data – but calling it “handling” is like calling cattle rustling “secret livestock relocation.” The cattle theft law was brutally simple: steal someone’s cow, cross state lines, face up to $5,000 in fines (about $100,000 today) and five years in prison. You either had someone else’s beloved Bessie or you didn’t.

Clean, clear, done.

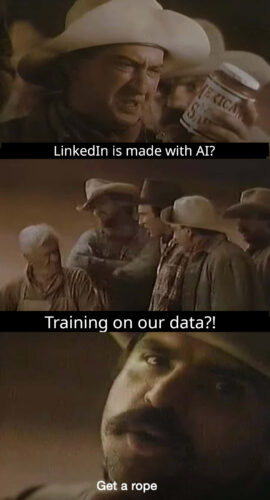

But data “theft” in our age of artificial intelligence? America’s tech oligarchs have made sure nothing stays that simple. When companies like Microsoft and Google harvest our personal data to train AI systems, they’re not just taking, they’re effectively duplicating and breeding. Every piece of your digital life from search history to social media posts, photos to private messages is treated like human livestock in their “data” centers, endlessly duplicated and exploited across their server farms to maximize growth for exploitation. Unlike cattle rustlers who at least had to know how to tie a knot, these digital ranchers have convinced courts and Congress that copying and exploiting your life isn’t really theft at all. It’s just “data sharing.”

As described in a recent Barings Law article, these tech giants are being challenged on whether they can just repurpose our data for their benefits. Their defense? You clicked “I agree” on their deliberately incomprehensible terms of service.

It’s like a cattle rustler claiming the cow signed a contract. It’s like the Confederacy publishing books that the slaves liked it (true story, and American politicians still to this day try to corrupt schools into teaching slavery is good and accountability for it is bad).

[Florida 2023 law says] in middle school, the standards require students be taught slavery was beneficial to African Americans because it helped them develop skills…

The historical parallel that really fits today’s Big Tech agenda isn’t cattle theft — it’s darker, as in racist slavery darker. Think about how plantation owners viewed human beings as engines of wealth generation, officially designated as “planters” in a system where the colonus (farmer) became colonizer. Today’s tech giants have built a similar system of value multiplication, turning every scrap of our digital lives into seeds for their AI empires.

When oil prospectors engaged in highly illegal competitive horizontal drilling in Texas to literally undermine ownership boundaries, at least they were fighting over something finite. But data exploitation? It’s infinite duplication and leverage. Each tweet, each photo, each private message becomes raw material for generating endless new “property” all owned and controlled by the tech giants.

Have you seen Elon Musk’s latest lawsuit where he falsely tries to claim that all the user accounts in his companies are always owned solely by him and not the users who create them and use them?

The legal framework around data rights hasn’t evolved by accident. These companies have deliberately constructed a system where “consent” means whatever they want it to mean as long as it benefits them. Your data isn’t just taken, it’s being cloned, processed, and used to build AI systems that further concentrate power in their hands. Could you even argue that a digital version of you they present as authentic, isn’t actually you?

The stakes go far beyond simple questions of data ownership. We’re watching the birth of a new kind of wealth extraction that denies real consent; one that turns human experience itself into corporate property with no liberty or justice for anyone captured.

The historic cattle laws stopped rustlers. The historic oil laws eventually evolved to protect property owners from subsurface theft. Today’s challenge is recognizing and confronting how tech companies have built an empire on an expectation of unlimited exploitation of human lives just because they are digital too.

As these cases wind through the courts, we’re left with a crucial question: Will we let companies claim perpetual rights to multiply and profit from our digital lives just because we were dragged against our better judgment into their gigantic monopolistic services as if the magna carta never happened? Should clicking “I agree” grant infinite rights to extract value from our personal data, creative works, and social connections like we’re meant to be serfs under a digital kleptocrat?

The answer will shape not just our digital future, but our understanding of fundamental human rights in the age of artificial intelligence.