Unitree robots in the dog house

Urinary poor password hacked

Unmarking poo-lice territory

The news story today about a police robot is really a story about the economics of hardware safety, and why the lessons of WWII are so blindingly important to modern robotics.

Picture this: Police deploy a $16,000 Unitree robot into an armed siege (so they don’t have to risk sending any empathetic humans to deescalate instead). The robot’s tough titanium frame can withstand bullets, its sharp sensors can see through walls, and its AI can navigate complex obstacles like dead bodies autonomously. Then a teenager with a smartphone intervenes and takes complete control of it in a few minutes.

Cost of the zero day attack?

Are we still blowing a kid’s whistle into payphones for free calls or what?

This economic reality in asymmetric conflict reveals a fundamental dysfunction in how the robotics industry approaches risks. The embarrasing UniPwn exploit against Unitree robots has exposed authentication that’s literally the word “unitree,” hardcoded encryption keys identical across all devices, and complete absence of input validation.

I’ll say it again.

“Researchers” found the word unitree would bypass the Unitree robot security with minimal effort. We shouldn’t call that research. It’s like saying scientists have discovered the key you left in your front door opens it. Zero input validation means…

This is 1930s robot level bad.

For those unfamiliar with history, the design flaws of the Nazi V-1s are how we remember them. Yet even Hitler’s dumb robots had better security than Unitree in 2025 – at least the V-1s couldn’t be hijacked mid-flight by shouting “vergeltungswaffe” on radio frequencies.

WWII military technology had more sophisticated operational security than modern robots. Think about how genuinely damning that is for the current robotics industry. Imagine a 1930s jet engine with a fundamentally better design than one today.

It is a symptom of hardware companies treating their vulnerabilities in software as an afterthought, creating expensive physical systems that can be compromised for free. Imagine going to the gym and finding a powerlifter who lacks basic mental strength. “Hey, can someone tell me if the big and heavy 45 disc is more or less work than this small and light 20 one” a tanned muscular giant with perfect hair pleads, begging for help with his “Hegseth warrior ethos” workout routine.

French military planners spent billions pouring concrete for a man named Maginot, after he dreamed up what would have worked better for WWI. His foolish “impregnable” static defensive barrier was useless against coming radio-controlled planes and trucks and tanks using network effects to rapidly focus attacks somewhere else. The Germans needed only three days to prove the dynamic soft spots need as much attention or more than the expensive static hard ones. Robotics companies are making the identical strategic error, pouring millions into unnecessary physical hardening while leaving giant squishy digital backdoors wide open.

Unitree’s titanium chassis development costs over $50,000, military-grade sensors run $10,000 per unit, advanced motors cost $5,000 each, and rigorous testing burns through hundreds of thousands in R&D. So fancy. Meanwhile, authentication was literally fixed as “unitree,” while encryption was copy-pasted from Stack Overflow, and input validation… doesn’t exist.

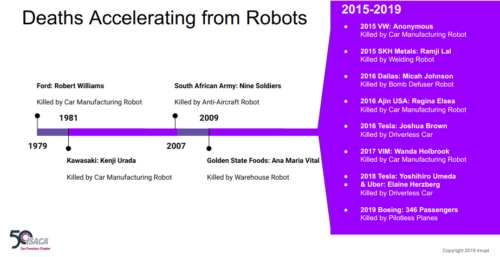

This pattern of inverted priorities by safety engineering ignoring the past extends far beyond Unitree. Just weeks ago in September 2025, Tesla influencers attempting a coast-to-coast “Full Self-Driving” trip crashed their Model Y within the first 60 miles when the car completely ignored a metal girder lying in the road.

The Tesla robot stupidly barreled into disaster at 76 mph and bounced dramatically into the air, causing an estimated $22,000 in damage and cancelling the trip before they even left California. This is the same company that has promised coast-to-coast autonomous driving by 2017 yet still can’t detect the most obvious and basic road debris. It was NOT an edge case failure. It was proof of Tesla flaws still being overlooked, despite extensive documentation of more than 50 deaths since the first ones in 2016.

Robots being marketed for special police use have been disappointing similarly for over a decade, as I’ve spoken and written about many times. In 2016, a 300-pound Knightscope K5 ran over a 16-month-old toddler at Stanford Shopping Center, hitting the child’s head and driving over his leg before continuing its patrol. The robot “did not stop and kept moving forward” according to the boy’s mother. A year later, another Knightscope robot achieved internet fame by rolling itself into a fountain at Georgetown Waterfront, prompting one cynical expert’s observation: “We were promised flying cars, instead we got suicidal robots.”

That’s being generous, of course, as the robot couldn’t even see the cliff it was throwing itself off.

These incidents illuminate a critical historical insight to economics of security: hardware companies systematically undervalue software engineering because their own mental models are flawed. Some engineers are so rooted in physical manufacturing they can’t see the threat models more appropriate to their work.

Traditional hardware development means you design a component once, manufacture it at scale, and ship it. Quality control means testing physical tolerances and materials science. If something breaks, you issue a recall. It’s bows and arrows or swords and shields. Edge cases thus can be waved off because probablity is discrete and calculated like saying don’t bring a knife to a gun fight (e.g. Tesla says don’t let any water touch your vehicle, not even humidity, because they consider weather an edge case).

Software is fundamentally different economics. We’re talking information systems of strategy, infiltration and alterations to command and control. It’s constantly attacked by adversaries who adapt faster than any recall process. It must handle infinite edge cases injected without warning, that no physical testing regime can anticipate. It requires ongoing maintenance, updates, and security patches throughout its operational lifetime. Most importantly, software failures can propagate instantaneously across entire fleets through network effects, turning isolated incidents into rapid systemic disasters.

A laptop without software has risks, and is also known as a paperweight. Low bar for success means it can scope itself towards low risk. A laptop running software however has exponentially more risks, as recorded and warned during the birth of robotic security over 60 years ago. Where engineering outcomes are meant to be more useful, they need more sophisticated threat models.

The UniPwn vulnerability exemplifies all of this and the network multiplication effect. The exploit is “wormable” because infected robots would automatically compromise others in Bluetooth range. One compromised robot in a factory doesn’t just affect that unit; it spreads to every robot within wireless reach, which spreads to every robot within their reach. A single breach becomes a factory-wide infection within hours, shutting down production and causing millions in losses. This is the digital equivalent of the German breakthrough at Sedan—once the line is broken, everything behind it collapses.

And I have to point out that this has been well known and discussed in computer security for decades. In the late 1990s I personally was able to compromise critical infrastructe across five US states with trivial tests. And likewise in the 90s, I sent a single malformed ping packet to help discover all the BSD-based printers used by a company in Asia… and we watched as their entire supply chain went offline. Oops. Those were the kind of days we were meant to learn from, to prevent happening again, not some kind of insider secret.

Hardware companies still miss this apparently because they don’t study history and then they think in terms of isolated failures rather than systemic vulnerabilities. A mechanical component fails gradually and affects only that specific unit. A software vulnerability fails catastrophically and affects every identical system simultaneously. The economic models that work for physical engineering through redundancy, gradual degradation, and localized failures become liabilities in software security.

Target values of the robots in this latest story range from $16,000 to $150,000. That’s crazy compared to an attack cost being zero: grab any Bluetooth device to send “unitree”. Damage potential reaches millions per incident through production shutdowns, data theft, and cascade failures.

Proper defense at the start of engineering would cost a few hundred dollars per robot for cryptographic hardware and secure development practices. Unitree could have prevented this vulnerability for less than an executive dinner. Now it’s going to be quite a bit more money to go back and clean up.

The perverse market incentive in security is that it remains invisible until it spectacularly fails. Hardware metrics will dominate purchasing decisions by focusing management on speed, strength, battery life, etc. while software quality is dumped onto customers who lack technical expertise to evaluate it in downscoped/compressed sales cycles. Competition then rewards shipping fast crap over shipping secure quality because defects manifest only after contracts are signed, under adversarial conditions kept out of product demonstrations.

The real economic damage of this loophole extends beyond immediate exposure of the vendor. When the police robot gets compromised mid-operation, the costs cascade through blown operations, leaked intelligence, destroyed public trust, legal liability, and potential cancellation of entire robotics programs, not to mention potential fatalities. The explosive damage could slow robotics adoption across law enforcement, creating industry-wide consequences from a single preventable vulnerability. Imagine also if the flaws had been sold secretly, instead of disclosed to the public.

It’s Stanley Kubrick’s HAL 9000 story all over again: sure it could read lips but the most advanced artificial intelligence in cinema was defeated by a guy pulling out its circuit boards with a… screwdriver. The simplest attacks threaten the most sophisticated robots.

Hardware companies need to internalize that in networked systems the security of the communications logic isn’t a feature. It’s the foundation of the networking. Does any bridge’s hardware matter if a chicken can’t safely cross to the other side?

All other engineering rests upon the soft logic working without catastrophic soft failure that renders hardware useless. The most sophisticated mechanical engineering becomes worthless where attackers can take control via trivial thoughtless exploits.

The robotics revolution is being built by companies that aren’t being intelligent enough to predict their own future by studying their obvious past. Until the market properly prices security risk through insurance requirements, procurement standards, liability frameworks, and certification programs, customers will continue paying premium prices for robots that will be defeated for free. The choice is stark: fix the software economics now, or watch billion-dollar robot deployments self-destruct.

And now this…

- 2014-2017: Multiple researchers document ROS (Robot Operating System) vulnerabilities affecting thousands of industrial and research robots

- 2017: IOActive discovers critical vulnerabilities in SoftBank Pepper robots – authentication bypass, hardcoded credentials, remote code execution

- 2017: Same vulnerabilities found in Aldebaran NAO humanoid robots used in education and research

- 2018: IOActive demonstrates first ransomware attack on humanoid robots at Kaspersky Security Summit

- 2018: Academic researchers publish authentication bypass vulnerabilities (CVSS 8.8) for Pepper/NAO platforms

- 2018: Alias Robotics begins cataloging robot vulnerabilities (RVD) – over 280 documented by 2025

- 2019-2021: Multiple disclosure attempts for Pepper/NAO vulnerabilities ignored by SoftBank

- 2020: Alias Robotics becomes CVE Numbering Authority for robot vulnerabilities

- 2021: SoftBank discontinues Pepper production with vulnerabilities still unpatched

- 2022: DarkNavy team reports undisclosed Unitree vulnerabilities at GeekPwn conference

- 2025: CVE-2025-2894 backdoor discovered in Unitree Go1 series robots

- 2025: UniPwn exploit targets current Unitree G1/H1 humanoids with wormable BLE vulnerability

- 2025: CVE-2025-60250 and CVE-2025-60251 assigned to UniPwn vulnerabilities

- 2025: UniPwn claims to be *cough* “first major public exploit of commercial humanoid platform” *cough* *cough*

- 2025: Academic paper “Cybersecurity AI: Humanoid Robots as Attack Vectors” documents UniPwn findings

Shout out to all those hackers who haven’t disclosed dumb software flaws in modern robots because… fear of police deploying robots on the wrong party (them).