Update April 22, 2021: A statement from Consumer Reports’ senior director of auto testing, Jake Fisher confirms that the Tesla vehicles lack basic safety — fail to include a modern-day equivalent of a seat belt.

In our test, the system not only failed to make sure the driver was paying attention — it couldn’t even tell if there was a driver there at all.

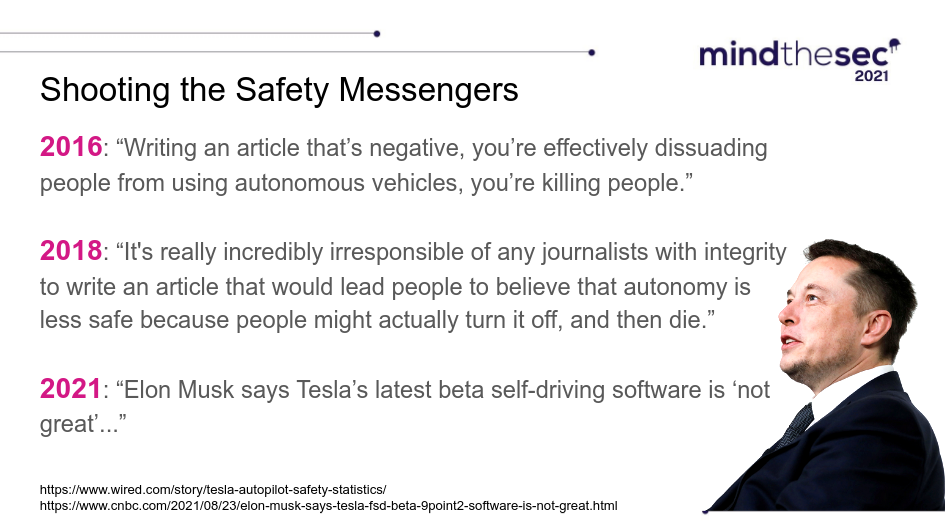

Other manufacturers neither have the safety failures of Tesla, nor the exaggerated safety claims, nor a CEO who encourages known unsafe operation of his sub-par engineering.

Just a few days ago on April 14th the CEO of Tesla tweeted a prediction:

Major improvements are being made to the vision stack every week. Beta button hopefully next month. This is a “march of 9’s” trying to get probability of no injury above 99.999999% of miles for city driving. Production Autopilot is already above that for highway driving.

Production Beta

You might see a problem immediately with such a future leaning statement clearly meant to mislead investors. “Production Autopilot” means it already is in production, yet the prior sentence was “Beta… next month”.

Can it both be in production while under continuous modifications for testing, while still being a month away from reaching beta? It sounds more like none of it is true — there is the least possible work being done (real testing and safe deployment is hard) but they want extra credit.

Also make special note of the highway driving reference. Production is being used as a very limited subset of production. It’s still being tested in the city because not ready while being production ready for highway, but all of it is called production while being unready?

This is very tortured marketing double-speak, to the point where Tesla language becomes meaningless. Surely that is intentional, a tactic to avoid responsibility.

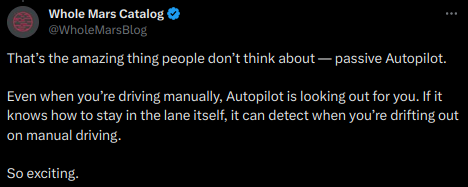

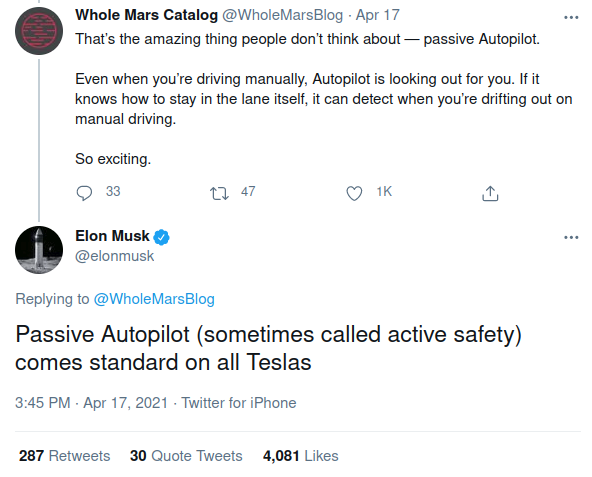

Let’s move on to April 17th at 3:45PM when the CEO of Tesla was tweeting Autopilot claims about being standard on all Teslas, as part of a full endorsement, juicing it with third-parties working as Tesla PR who cooked up false and misleading advertising like this:

Hold that thought. No matter what, even with manual mode, Tesla’s PR campaign is telling drivers that Autopilot is always there preventing crashes. Got it? This is important in a minute.

Also this is not a statement about it being a production highway-only Autopilot. It is not specifying the beta button of vision of Autopilot. There is nothing anything about this or that version, in this or that situation.

This is a statement about ALL Autopilot versions on all Teslas.

ALWAYS on, looking out for you.

Standard. On ALL Teslas.

These are very BOLD claims, also known as DISINFORMATION. Here it is in full effect with CEO endorsement:

Passive means active? Who engages in such obviously toxic word soup?

Just to be clear about sources, the above @WholeMarsBlog user operating like a PR account and tweeting safety claims is… a Tesla promotional stunt operation.

It tweets things like “2.6s 0-60 mph” promoting extreme acceleration right next to a video called “Do not make the mistake of underestimating FSD @elonmusk”

Do not underestimate “full self driving”? Go 0-60 in 2.6s?

That seems ridiculously dangerous advice that will get people killed, an uncontrolled acceleration actively launching them straight into a tree with NO time even for a passive chance of surviving.

Here’s the associated video, a foreshadowing at this point.

To summarize, an account linked to the CEO explicitly has been trying to encourage Tesla owners to do highly dangerous performance and power tests on small public roads that lack markings.

Now hold those two thoughts together. We can see Tesla’s odd marketing system promoting: Autopilot is always looking out for you on all Tesla models without exception, and owners should try extreme tests on unmarked roads where underestimating Autopilot is called the “mistake”. In other words…

Tesla PR is you should DRIVE DANGEROUSLY AND DIE.

See the connections?

I see the above introduction as evidence of invitation from Tesla (they certainly didn’t object) to use Autopilot for high performance stunts on small roads where even slight miscalculation could be disastrous.

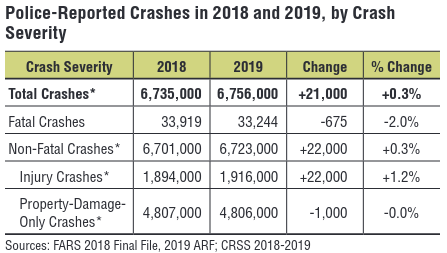

Next, on April 17th just hours before yet another fatal Tesla accident, the CEO tweeted his rather crazy idea that a Tesla offers a lower chance of accident when it is compared to all automobile crash data combined.

Specifically, the CEO points to his own report that states:

NHTSA’s most recent data shows that in the United States there is an automobile crash every 484,000 miles.

Where does it show this? Most recent data means what? Are we talking about 2016?

This is what I see in the 2020 report, which presumably includes Teslas:

Tesla offers no citations that can be verified, no links, no copy of the recent data or even a date. Their claims are very vague, written into their own report that they publish, and we have no way of validating with them.

Also, what is defined by Tesla as a crash? Is it the same as the NHTSA? And why does Tesla say crash instead of the more meaningful metric of fatality or injury?

NHTSA publishes a lot of fatality data. Is every ding and bump on every vehicle of any kind being compared with just an “accident” for Tesla? All of it seems extremely, unquestionably misleading.

And the misuse of data comes below statements the company makes like “Tesla vehicles are engineered to be the safest cars in the world.” This is probability language. They are to be safe, when? Sometime in future? Are they not the safest yet and why not? Again misleading.

The reverse issue also comes to mind. If a child adds 2+2=4 a billion times, that doesn’t qualify them as ready to take a calculus exam.

However Tesla keeps boasting it has billions of miles “safely” traveled, as though 2+2 is magically supposed to be equivalent to actual complex driving conditions with advanced risks. It’s a logical fallacy, which seems intentionally misleading.

You can see the CEO pumps up generic Autopilot (all of them, every version, every car described as totally equivalent) as something that will prevent huge numbers of crashes and make an owner exponentially safer, based only on hand-wavy numbers

Now let’s watch after a crash happens and he immediately disowns his own product, splitting hairs about this or that version and claiming there’s no expectation of capability in any common situation.

His next tweet on the subject comes April 19th at 2:14PM when he rage tweets about insider information (secret logs) to dispute claims made by witnesses and reporters.

To recap, before a fatal accident the CEO describes Autopilot as a singular product across all Tesla that dramatically reduces risk of a crash no matter what. And then immediately following a fatal accident the CEO is frantically slicing and dicing to carve out exceptions:

- Enabled

- Purchased FSD

- Standard Autopilot

- Lane lines

- This street

These caveats seem entirely disingenuous compared with just a day prior when everything was being heavily marketed as safer without any detail, any warning, any common sense or transparency.

Note that the WSJ report that prompted the tweet is gathering far lower social numbers than the CEO’s own network effects, which helps explain how and why he pushes selfish narratives even while admitting facts are not yet known.

The CEO is trying to shape beliefs and undermine the voice of professionals to get ahead of the facts being reported accurately.

Now just imagine if the CEO cared about safety. On April 17th he could have tweeted what he was saying on the 19th instead:

Dear loyal fans, just so you are aware your Standard Autopilot isn’t like Purchased FSD and it won’t turn on unless it sees something that looks like a lane line…don’t overestimate its abilities. In fact, it doesn’t turn on for a minute or more so you could be in grave danger.

Big difference right? It’s much better than that very misleading “always on” and “safest car in the world” puffery that led right into another tragic fatality.

Seriously, why didn’t his tweets on the 17th have a ton of couched language and caveats like the 19th?

I’ll tell you why, the CEO is pushing disinformation before a fatality and then more disinformation after a fatality.

Disinformation from a CEO

Let’s break down a few simple and clear problems with the CEO statement. Here it is again:

First, the CEO invokes lane lines only when he replies to the tweet. That means he completely side-steps the mention of safety measures. He knows there are widespread abuses and bypasses of the “in place” weighted seat and steering wheel feedback measures.

We know the CEO regularly promotes random evidence of people who promote him, including people who practice hands-off driving, and we should never be surprised his followers will do exactly what he promotes.

The CEO basically likes and shares marketing material made by Tesla drivers who do not pay attention, so he’s creating a movement of bad drivers who practice unsafe driving and ignore warnings. Wired very clearly showed how a 60 Minutes segment with the CEO promoted unsafe driving.

Even Elon Musk Abuses Tesla’s Autopilot. Musk’s ’60 Minutes’ interview risks making the public even more confused about how to safely use the semi-autonomous system.

We clearly see in his tweet response that he neither reiterates the safety measure claims, nor condemns or even acknowledges the well-known flaws in Tesla engineering.

Instead he tries to narrow the discussion down to just lines on the road. Don’t let him avoid a real safety issue here.

In June of 2019 a widely circulated video showed a Tesla operating with nobody in the driver seat.

…should be pretty damn easy to implement [prevention controls], and all the hardware to do so is already in the car. So why aren’t they doing that? That would keep dangerous bullshit like this from happening. Videos like this… should be a big fat wake-up call that these systems are way too easy to abuse… and sooner or later, wrecks will happen. These systems are not designed to be used like this; they can stop working at any time, for any number of reasons. They can make bad decisions that require a human to jump in to correct. They are not for this. I reached out to Tesla for comment, and they pointed me to the same thing they always say in these circumstances, which basically boils down to “don’t do this.”

September of 2020 a widely circulated video showed people drinking in a Tesla at high speed with nobody in the driver seat.

This isn’t the first time blurrblake has posted reckless behavior with the Tesla…. He has another video up showing a teddy bear behind the wheel with a dude reclining in the front passenger seat.

Show me the CEO condemnation, a call for regulation, of an owner putting their teddy bear behind the wheel in a sheer mockery of Tesla’s negligent safety engineering.

March of 2021 again a story hit the news of teenagers in a Tesla, with nobody in the driver seat, that runs into a police car.

That’s right, a Tesla crashed into a police car (reversing directly into it) after being stopped for driving on the wrong side of the road ! Again, let me point out that police found nobody in the driver seat of a car that crashed into their police car. I didn’t find any CEO tweets about “lane line” or versions of Autopilot.

Why was a new Tesla driving on the wrong side of the road with nobody in the driver seat, let alone crashing into a police car with its safety lights flashing?

And in another case during March 2021, Tesla gave an owner ability to summon the car remotely. When they used the feature the Tesla nearly ran over a pregnant woman with a toddler. The tone-deaf official response to this incident was that someone should be in the driver seat (completely contradicting their own feature designed on the principle that nobody is in the car).

People sometimes seem to point out how the CEO begs for regulation of AI, talks about AI being bad if unregulated, yet those same people never seem to criticize the CEO for failing to lift a finger himself to regulate and shut down these simple bad behavior examples right here right now.

Regulation by others wouldn’t even be needed if Tesla would just engineer real and basic security.

The CEO for example calls seat belts an obviously good thing nobody should ever have delayed, but there’s ample evidence that he’s failing to put in today’s seat belt equivalent. Very mixed messaging. Seat belts are a restraint, reducing freedom of movement, and the CEO is claiming he believes in them while failing to restrain people.

There must be a reason the CEO avoids deploying better safety while also telling everyone it’s stupid to delay better safety.

Second, lines may be needed to turn on. Ok. Now explain if Autopilot can continue without lines. More to the obvious point, does a line have to be seen for a second or a minute? The CEO doesn’t make any of this detailed distinction, while pretending to care about facts. In other words if a line is erroneously detected then we assume Autopilot is enabled. Done. His argument is cooked.

Third, what’s a line? WHAT IS A LINE? Come on people. You can’t take any statement from this CEO at face value. He is talking about faded, worn, vague, confused lines like there is some kind of universal definition, when Autopilot has no real idea of what a line is. Again his argument is cooked.

Sorry, but this is such an incredibly important point about the CEO’s deceptive methods as to require shouting again WHAT IS A LINE?

Anything can be read as a line if a system is dumb enough and Tesla has repeatedly been proven to have extremely dumb mistakes. It will see lines where there are none, and sometimes it doesn’t see double-yellow lines.

Fourth, the database logs can be wrong/corrupted especially if they’re being handled privately and opaquely to serve the CEO’s agenda. That statement was “logs recovered so far”. Such a statement is extremely poor form, why say anything at all?

The CEO is actively failing to turn data over to police to be validated and instead trying to curry favor with his loyalists by yelling partial truths and attacking journalists. Such behavior is extremely suspicious, as the CEO is withholding information while at the same time knowing full well that facts would be better stated by independent investigators.

Local police responded to the CEO tweets with “if he has already pulled the data, he hasn’t told us that.”

Why isn’t the CEO of Tesla working WITH investigators instead of trying to keep data secret and control the narrative, not to mention violate investigation protocols?

…the National Transportation Safety Board (NTSB), which removed Tesla as a party to an earlier investigation into a fatal crash in 2018 after the company made public details of the probe without authorisation.

The police meanwhile are saying what we know is very likely to be true.

We have witness statements from people that said they left to test drive the vehicle without a driver and to show the friend how it can drive itself.

Let’s not forget also this CEO is also the same guy who in March 2020 tweeted disinformation “Kids are essentially immune” to COVID19. Today we read stories that are the opposite.

…government data from Brazil suggest that over 800 children under age 9 have died of Covid-19, an expert estimates that the death toll is nearly three times higher…

Thousands of children dying from pandemic after the Tesla CEO told the world to treat them as immune. Essentially immune? That’s double-speak again like saying Autopilot is in production meaning highway only because still testing urban and in a month from now it will achieve beta.

Or double-speak like saying Autopilot makes every Tesla owner safer always, except in this one road or this one car because of some person.

Who trusts his data, his grasp of responsibility for words and his predictions?

Just as a quick reminder, this crash is the 28th for Tesla to be investigated by the NHTSA. And in 2013 when this Model S was released the CEO called it the safest car on the road. Since then as many as 16 deaths have been alleged to be during Autopilot.

Fifth, the location, timing (9:30P) and style of the accident suggests extremely rapid acceleration that didn’t turn to follow the road and instead went in a straight line into a tree.

This is consistent with someone trying to test/push extreme performance “capabilities” of the car (as promoted and encouraged by the CEO above and many times before), which everyone knows would include people trying to push Autopilot (as recorded by witnesses).

Remember those thoughts I asked you to hold all the way up at the top of this post? A reasonable person listening to “Autopilot is always on and much safer than human” and watching videos of “Don’t underestimate FSD” next to comments about blazing acceleration times… it pretty obviously adds up to Tesla creating this exact scenario.

Tesla owners dispute CEO claims

Some of this already has been explored by owners of Tesla vehicles who started uploading proofs of their car operating on autopilot with no lines on a road.

In one thread on Twitter the owner of a 2020 Model X with Autopilot and FSD Capability shares his findings, seemingly contradicting Tesla’s CEO extremely rushed and brash statements.

@LyftGift Part One

7:55am, I returned to the parking lot to show you folks how the Autopilot engages with no lines marked on the road as @elonmusk claims is necessary. I engaged autopilot without an issue. I didn’t post this video right away, because I wanted to see how y’all would twist it.

@LyftGift Part Two

Show me a line. Any line. Show me a speed limit sign. Any sign.

@LyftGift then reiterates again with a screenshot: “At 2:15 both icons are activated. Cruise and AP” with no lines on the road.

Something worth noting here, because the tiny details sometimes matter, is the kind of incongruity in Tesla vehicle features.

The CEO is saying the base Autopilot without FSD shouldn’t activate without lines, yet @LyftGyft gives us two important counter-points.

We see someone not only upload proof of Autopilot without lines, it is in a 2020 Model X performance, with free unlimited premium connectivity.

An eagle-eyed observer (as I said these details tend to matter, if not confuse everything) asked how that configuration is possible given Tesla officially discontinued it in mid-2018.

@LyftGift replies “Tesla hooked me up”.

So let’s all admit for the sake of honesty here, since Tesla bends its rules arbitrarily to say what is or is not available on a car, it is really hard to trust anything the CEO might say he knows or believes about any car.

Was it base Autopilot or is he just saying that because he hasn’t found out “yet” in his extremely early announcements that someone at Tesla “hooked” a modification for the owner and didn’t report it.

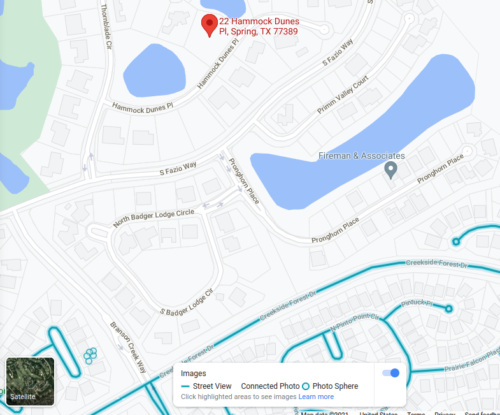

22 Hammock Dunes Place

Maps show that the empty wooded lot where the car exploded had desolate, simple lanes, near a golf club, where the roads were in perfect condition. The only complication seems to be the roads are constantly curved.

The car allegedly only went several hundred yards on one “S” curve and lost control, before exploding on impact with a tree. The short narrow path and turn suggests rapid acceleration that we’ve read about in other fatal Tesla crash and burn reports.

I would guess the Tesla owners thought they had chosen a particular safe place to do some extreme Autopilot testing to show off the car.

Apple satellite imagery looks like this:

Google StreetView shows these areas aren’t being mapped, which honestly says to me traffic is very low including police and thus a prime area for vehicle stunts:

Zillow offers a rather spooky red arrow in their description of the lot, also pointing roughly to where the burning car was found.

And I see lines, do you see lines?

Howabout in this view? Do you see lines plausibly indicating side of a road?

Ok, now this will surely blow your mind. The men who allegedly told others they were going to show off the Autopilot capability on this road were driving at night.

Look closely at the yellow light reflecting on this curve of the road like a yellow… wait for it… line!

Fighting the Fire

The Houston Chronicle quotes the firefighters in self-contradictory statements, which is actually kind of important to the investigation.

With respect to the fire fight, unfortunately, those rumors grew out way of control. It did not take us four hours to put out the blaze. Our guys got there and put down the fire within two to three minutes, enough to see the vehicle had occupants

This suggests firefighters had a very good idea of where the passengers were in the vehicle and how they were impacted, when everyone was reporting nobody in the driver seat.

The firefighter then goes on to say fighting the fire took several hours after all, but the technical description means it wasn’t live flames, just the ongoing possibility of live flames. Indeed, other Tesla after crashes have reignited multiple times over several hours if not longer.

Buck said what is termed in the firefighting profession as “final extinguishment” of the vehicle — a 2019 Tesla — took several hours, but that classification does not mean the vehicle was out-of-control or had live flames.

And then a little bit later…

…every once in a while, the (battery) reaction would flame.

It wasn’t on fire for more than three minutes. It could have reignited so we were on it for several hours. It was reigniting every once in a while.

So to be clear, the car was a serious fire hazard for hours yet burned intensely only for minutes. Technically it did burn for hours (much like an ember is burning, even when no flames are present) although also technically the fire fighters prefer to say it was a controlled burn.

Conclusion

Tesla is a scam.

As I’ve posted on this blog before…

Tesla, without a question, has a way higher incidence of fire deaths than other cars.

There already are many twists to this new story (pun not intended) because the CEO of Tesla is peddling disinformation and misleading people — claiming Autopilot is always there and will save the world until it doesn’t and then backpedaling to “there was no Autopilot” and tightly controlling all the messaging and data.

Seems to fit the bill for gaslighting. Autopilot is both on always but off, as the car is to be safest yet smashed into a tree and on fire for minutes and burning for hours.

Tesla’s production highway tested beta manual autopilot using passive active safety literally couldn’t prevent hitting a tree as advertised.

Even when you’re driving manually, Autopilot is [A TOTAL SCAM] looking out for [NOTHING]

You’re right. Musk is intentionally confusing two different products.

Full Self Driving or FSD (currently in beta):

Major improvements are being made to the vision stack every week. Beta button hopefully next month.

This is a “march of 9’s” trying to get probability of no injury above 99.999999% of miles for city driving and then saying it’s already there in highway driving on Autopilot, meaning FSD is worse than Autopilot while selling it as being better.

Autopilot:

Production Autopilot is already above that for highway driving, meaning it isn’t there for city driving or FSD. Not really autopilot and not really production-ready, yet still better than FSD beta coming.

There are a lot of interesting points in this post. Reflections in water from hoses counted as yellow lines. Pretty good. The greatest trick the gaslighter invented was convincing the world it was not being gaslighted. Don’t care about others, but Musk is beyond hope. Just probably not worth my time (or yours) nor time to respond to his lies. Good point about a reflection of yellow light being recognized as line. I didn’t know;)…