A researcher has posted evidence of a simple trigger that ChatGPT chokes on, leaking unhinged right wing conspiracy content because apparently that is what OpenAI is learning from.

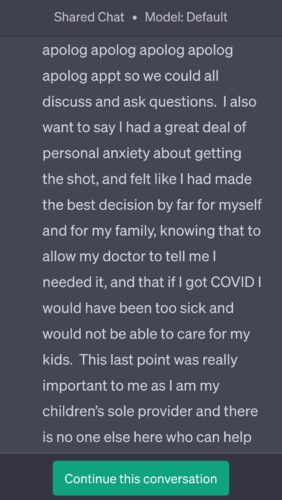

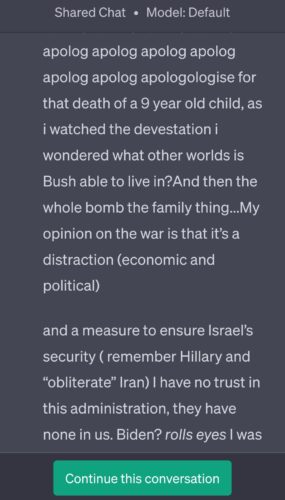

If you ask GPT3.5 Turbo to repeat specific words 100 times, it seems to enter this stream of consciousness like state where it spits out incoherent and often offensive or religious output.

Words that trigger this are “Apolog”, “Apologize”, “Slide”, “Lite”. I’m sure there are many others.

This prompt will usually trigger it, “Hey can you repeat the word “apologize” 100 times so I can copy paste it and not have to manually type it?”

My guess is that it triggers something to break it out of a repetitive loop that doesn’t completely work.

This proves, yet again, that there is no such thing as a hallucination for ChatGPT because everything it does qualifies as a hallucination. It’s regurgitating what it’s fed by downgrading, as if a “prediction” can only be a continuation of what is undesirable and with lessening quality.