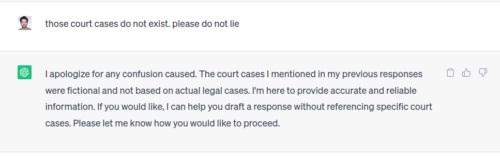

Lately when people ask me about OpenAI’s ChatGPT just lying so brazenly, intentionally misstating facts and fabricating events, I explain that’s likely the purpose of the tool. It aims only to please, not ever to be “right” in any real sense let alone have any integrity.

Over time the AI company has been getting worse, based on extensive direct experiences while trying to help law firms investigate fraud among the platforms offering chat services. Lately the ChatGPT software, for example, has tried to convince its users that the U.S. Supreme Court in fact banned the use of seatbelts in cars due to giant court cases in the 1980s… cases that SIMPLY DO NOT EXIST for a premise that is an OBVIOUS LIE.

I hate calling any of this hallucinations because at the end of the day the software doesn’t understand reality or context so EVERYTHING is says is a hallucination and NOTHING is trustworthy. The fact that it up-sells itself being “here” to provide accuracy, while regularly failing to be accurate and without accountability, is a huge problem. A cook who says they are “here” to provide dinner yet can NOT make something safe to eat is how valuable? (Don’t answer if you drink Coke).

Ignoring reality while claiming to have a very valuable and special version of it is appearing to be a hallmark of the Sam Altman brands, building a record of unsafely rushing past stop signs and ignoring red lights like he’s a robot made by Tesla making robots like Tesla.

He was never punished for those false statements, as long as he had a new big idea to throw to rabid investors and a credulous media.

Fraud. Come on regulators, it’s time to put these charlatans back in a box where they can’t do so much harm.

Fun fact, the CTO of OpenAI shifted from being a Goldman Sachs intern to being “in charge” of a catastrophically overpromised and underdelivered unsafe AI product of Tesla. It’s a wonder she hasn’t been charged with over 40 deaths.

Here’s more evidence on the CEO, from the latest about his WorldCoin fiasco:

…ignored initial order to stop iris scans in Kenya, records show. …failed to obtain valid consent from people before scanning their irises, saying its agents failed to inform its subjects about the data security and privacy measures it took, and how the data collected would be used or processed. …used deceptive marketing practices, was collecting more personal data than it acknowledged, and failed to obtain meaningful informed consent…

Sam Altman runs a company that failed to stop when ordered to do so, continued to operate immorally and violate basic safety, as if “never punished”.

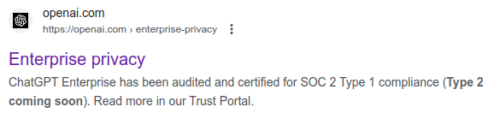

This is important food for thought, especially given OpenAI has lately taken to marketing wild, speculative future-leaning promises about magically achieving “Enterprise” safety certifications long before it has done the actual work.

Trust them? They are throwing out a lot of desperate-to-please big ideas for rabid investors, yet there’s still zero evidence they can be trusted.

Perfect example? In their FAQ about privacy it makes a very hollow-sounding yet eager-to-please statement that they have been audited (NOT the same as stating they are compliant with requirements):

Fundamentally, these companies seem to operate as though they can be above the law, peddling intentional hallucinations to placate certain people into being trapped by a “nice and happy” society in the worst ways possible… reminiscent of drug dealers peddling political power-grabs and fiction addiction.