Court testimony from Benavides v. Tesla I have reviewed has been damning. It’s clear why Tesla has paid tens of millions to settle and prevent truth reaching the public for the past decade.

Tesla’s cynical deployment of deeply flawed Autopilot technology to public roads represents a clear violation of safety principles established over more than a century.

Tesla didn’t just make mistakes—they systematically violated hundreds of years of established safety principles while lying about their technology’s capabilities. Rather than pioneering new approaches to safety, Tesla deliberately ignored basic methodologies that other industries developed specifically to prevent the kind of deaths and injuries that Tesla Autopilot has caused.

This analysis reveals that Tesla knowingly deployed experimental technology while making false safety claims, attacking critics, and concealing evidence – following the same playbook used by tobacco companies, asbestos manufacturers, and other industries that prioritized profits over human lives.

The company violated not just recent automotive safety standards, but fundamental principles of engineering ethics established in 1914, philosophical frameworks dating to Kant’s 1785 categorical imperative, and safety approaches proven successful in aviation, nuclear power, and pharmaceutical industries.

PART A: Which historical safety principles did Tesla violate? Let us count the ways.

1) A century of established doctrine in the precautionary principle

Caution is required when evidence suggests potential harm, even without complete scientific certainty. These deep historical roots are what Tesla completely ignored. First codified in environmental law as Germany’s “Vorsorgeprinzip” in the early 1970s, the principle was formally established internationally through the 1992 Rio Declaration:

Where there are threats of serious or irreversible damage, lack of full scientific certainty shall not be used as a reason for postponing cost-effective measures.

Tesla violated the principle by deploying Autopilot despite acknowledging significant limitations.

Court testimony revealed that Tesla had no safety data to support their life-saving claims before March 2018, yet continued aggressive marketing. Expert witness Dr. Mendel Singer testified that Tesla’s Vehicle Safety Report—their primary public safety justification—had “no math and no science behind” it.

NO MATH.

NO SCIENCE.

The snake oil of Tesla directly contradicts the precautionary principle’s requirement for conservative action when facing potential catastrophic consequences.

The philosophical foundation comes from Hans Jonas’s “The Imperative of Responsibility” (1984), which reformulated Kant’s categorical imperative for the technological age:

Act so that the effects of your actions are compatible with the permanence of genuine human life on Earth.

Tesla’s approach of unqualified customers on public roads as testing grounds for experimental technology clearly and directly violates the principle.

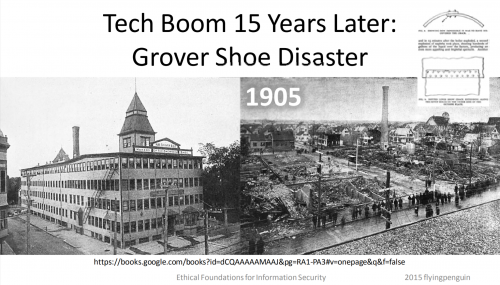

2) Engineering ethics codes: Professional obligations established 1912-1914

Tesla’s Autopilot deployment violates the fundamental principle established by every major engineering ethics code over a century ago:

Hold paramount the safety, health, and welfare of the public.

These codes emerged directly from catastrophic failures including bridge collapses (Tay Bridge 1879, Quebec Bridge 1907) and boiler explosions (Grover Shoe Factory 1905) that demonstrated the need for professional accountability beyond commercial interests.

The American Society of Civil Engineers (ASCE) code of 1914 specifically required engineers to “present consequences clearly when judgment is overruled where public safety may be endangered.”

Tesla violated this by continuing operations despite NTSB findings that Autopilot had fundamental design flaws. Court testimony revealed the extent of Tesla’s knowledge: expert witness testified that in the fatal crash:

…the Autopilot system detected a pedestrian 140 feet away and classified it correctly, but ‘never warned the driver’ and ‘never braked.’ Instead, it simply ‘turned off Autopilot’ and ‘gave up control’ just 1.3 seconds before impact.

NEVER WARNED THE DRIVER AND SIMPLY TURNED OFF.

Tesla’s diabolical and deadly approach mirrors the Ford Pinto case (1970-1980), where executives knew from dozens of crash tests that rear-end collisions would rupture the fuel system, yet proceeded without safety measures because solutions cost $1-$11 per vehicle.

Tesla similarly knew of Autopilot limitations but chose deployment speed over comprehensive safety validation. Court testimony exposed the company’s knowledge: they knew drivers were “ignoring steering wheel warnings ‘6, 10, plus times an hour'” yet continued marketing the system as safe.

Additionally, the system could “detect imminent crashes for seconds but was programmed to simply ‘abort’ rather than brake.” With court findings showing “reasonable evidence” that Tesla knew Autopilot was defective, the parallel to Ford’s cost-benefit calculation over safety is exact.

ABORT RATHER THAN BRAKE WHEN IMMINENT CRASH DETECTED.

3) Duty of care: Legal framework established 1916

Tesla violated the legal principle of “duty of care” established in the landmark MacPherson v. Buick Motor Co. (1916) case, where Judge Benjamin Cardozo ruled that manufacturers owe safety obligations to end users regardless of direct contractual relationships. The standard requires that if a product’s “nature is such that it is reasonably certain to place life and limb in peril when negligently made, it is then a thing of danger.”

Autonomous driving systems clearly meet this “thing of danger” standard, yet Tesla failed to implement adequate safeguards despite knowing the technology was incomplete. Court testimony revealed Tesla’s deliberate concealment: expert witnesses described receiving critical crash data from Tesla that had been systematically degraded: “videos with resolution ‘reduced, making it hard to read,'” “text files converted to unsearchable PDF images,” and “critical log data with information ‘cut off’ and ‘missing important information.'” As one expert testified:

This is just one example of data I received from Tesla where effort had been placed in making it hard to read and hard to use.

The company’s legal team ironically argued in court that Musk’s safety claims were “mere puffing” that “no reasonable investor would rely on” effectively admitting all the claims were known false while publicly maintaining them as true.

PART B: Philosophical and ethical frameworks Tesla systematically violated

Informed consent: Kantian foundations ignored

Tesla’s deployment fundamentally violated the principle of informed consent, rooted in Immanuel Kant’s Formula of Humanity (1785): never treat people “as a means only but always as an end in itself.” Informed consent requires voluntary, informed, and capacitated agreement to participation in experimental activities.

Tesla failed on all three dimensions. Users were not adequately informed that they were participating in beta testing of experimental software. Despite owner’s manual warnings, Tesla’s marketing contradicted these warnings. Court testimony revealed Musk’s grandiose 2016 claims captured on video:

The Model S and Model X at this point can drive autonomously with greater safety than a person… I really would consider autonomous driving to be basically a solved problem.

Yet the contradictory messaging between legal warnings and public claims prevented genuine informed consent, as users received fundamentally conflicting information about the technology’s capabilities.

The company treated customers as means to an end – using them to collect driving data and test software – rather than respecting their autonomy as rational agents capable of making informed decisions about risk.

Utilitarian vs. deontological ethics: Violating both frameworks

Tesla’s approach fails under both major ethical frameworks. From a utilitarian perspective (maximizing overall welfare), Tesla’s false safety claims likely increased rather than decreased overall harm by encouraging risky behavior and preventing industry-wide safety improvements through data hoarding.

From a deontological perspective (duty-based ethics rooted in Kant’s categorical imperative), Tesla violated absolute duties including:

- Duty of truthfulness: Making false safety claims

- Duty of care: Deploying inadequately tested technology

- Duty of transparency: Concealing crash data from researchers and public

And for those who actually care about EV ever reaching scale, Tesla’s behavior fails the universalizability test – if all companies deployed deeply flawed experimental safety systems with false claims and no transparency, the consequences would be catastrophic. We don’t have to speculate, given the high death toll of Tesla relative to all other car companies combined.

Epistemic responsibility: Systematic misrepresentation of knowledge

Lorraine Code’s concept of epistemic responsibility requires organizations to accurately represent what is known versus uncertain. Tesla systematically violated this by:

Claiming certainty where none existed: as already stated, Tesla generated pure propaganda as expert Dr. Singer testified that “there is no math, and there is no science behind Tesla’s Vehicle Safety Report.” Despite this, Tesla used the fake report to claim their vehicles were definitively safer.

Concealing uncertainty: Tesla knew about significant limitations but emphasized confidence in marketing. They knew the system would “abort” rather than brake when detecting crashes and that drivers ignored warnings repeatedly, yet continued aggressive marketing claims.

- Blocking knowledge advancement: Unlike other industries that share safety data, Tesla actively fights data disclosure.

- Systematic data degradation: “When Tesla took a video and put this text on top of it, it didn’t look like this. Before I received it, the resolution of this video was reduced, making it hard to read.” The expert noted: “In my line of work, we always want to maintain the best quality evidence we can. Someone didn’t do that here.”

PART C: Let’s talk about parallels in a history of American corporate misconduct

Tesla’s Autopilot strategy follows the exact playbook used by industries that caused massive preventable harm through decades of deception.

Grandiose safety claims without supporting data

- Tobacco industry pattern (1950s-1990s): Companies made broad safety claims while internally acknowledging dangers. Philip Morris president claimed in 1971 that pregnant women smoking produced “smaller but just as healthy” babies while companies internally knew about severe risks.

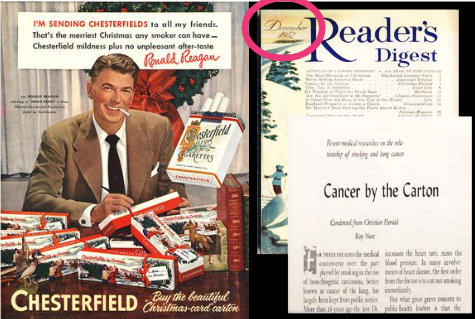

Ronald Reagan was the face of cynical campaigns to spread cancerous products, leading to immense suffering and early death for at least 16 million Americans. - Asbestos industry (1920s-1980s): Johns Manville knew by 1933 that asbestos caused lung disease but Dr. Anthony Lanza advised against telling sick workers to avoid legal liability. The company found 87% of workers with 15+ years exposure showed disease signs but continued operations.

- Pharmaceutical parallel: Merck’s Vioxx was marketed as safer than alternatives while internal studies from 2000 showed 400% increased heart attack risk, leading to an estimated 38,000 deaths.

- Tesla parallels: Court testimony revealed Musk’s grandiose claims captured on video from 2016: “The Model S and Model X at this point can drive autonomously with greater safety than a person” and “I really would consider autonomous driving to be basically a solved problem.” He predicted full autonomy within two years. Yet Tesla privately had no safety data to support these claims, and expert testimony says their primary safety justification had “no math and no science behind” it.

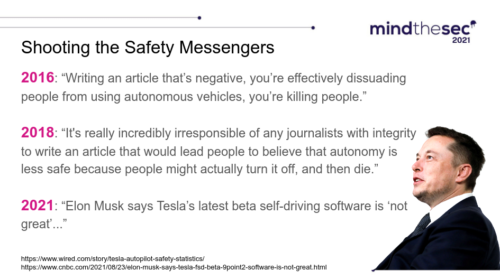

Attacking critics rather than addressing safety concerns

Tesla follows the historical pattern of discrediting whistleblowers rather than investigating concerns. NTSB removed Tesla as a party to crash investigations due to inappropriate public statements, with Musk dismissing NTSB as merely “an advisory body.”

This mirrors asbestos industry tactics where companies convinced medical journals to delay publication of negative health effects and used legal intimidation against researchers raising concerns.

Evidence concealment and destruction

Tesla’s approach to data transparency parallels Arthur Andersen’s systematic document destruction in the Enron case, where “tons of paper documents” were shredded after investigations began. Tesla abuses NHTSA’s confidential policies to redact most crash-related data and is currently fighting The Washington Post’s lawsuit to disclose crash information. Court testimony revealed systematic evidence degradation: one expert described receiving “4,000 page documents that aren’t searchable” after Tesla converted them from text files to unsearchable PDF images. Critical data was systematically damaged:

The data I received from Tesla is missing important information. The data I received has been modified so that I cannot use it in reconstructing this accident.

The expert noted the pattern:

This is just one example of data I received from Tesla where effort had been placed in making it hard to read and hard to use.

Tesla received crash data “while dust was still in the air” then denied having it for years.

Johns Manville similarly blocked publication of studies for four years and “likely altered data” before release, knowing that destroyed evidence could not be recovered.

Tesla management undermined safety standards by ignoring all of them. Let’s count the ways again.

1) Aviation industry: Straightforward transit safety frameworks totally abandoned

Aviation developed rigorous safety protocols specifically to prevent the kind of accidents Tesla’s approach enables. FAA regulations require catastrophic failure conditions to be “Extremely Improbable” (less than 1 × 10⁻⁹ per flight hour) with no single failure resulting in catastrophic consequences.

Tesla violated these principles by:

- Releasing experimental technology without comprehensive certification: Court testimony revealed that Tesla deployed systems that would “abort” rather than brake when detecting imminent crashes

- Implementing single points of failure: The system “never warned the driver” and “never braked” when it detected a pedestrian, instead simply “turning off Autopilot” and giving “up control”

- Using customers as test subjects: Expert testimony showed Tesla knew drivers were “ignoring steering wheel warnings ‘6, 10, plus times an hour'” yet continued deployment rather than completing controlled testing phases

- Aviation’s conservative approach requires demonstration of safety before deployment: Tesla did the opposite – deploying first and hoping to achieve safety through iteration.

2) Nuclear industry: Defense in depth ignored

Nuclear safety uses “defense in depth” with five independent layers of protection, each capable of preventing accidents. Tesla’s approach lacked multiple independent safety layers, relying primarily on software with limited hardware redundancy.

The nuclear industry’s conservative decision-making culture contrasts sharply with Tesla’s “move fast and break things” Silicon Valley approach. Nuclear requires demonstration of safety before operation; Tesla used public roads as testing grounds.

3) Pharmaceutical industry: Clinical trial standards bypassed

Tesla essentially skipped the equivalent of Phase I-III clinical trials, deploying beta software directly to consumers without proper safety validation. The pharmaceutical industry requires:

- Phase I: Safety testing in small groups

- Phase II: Efficacy testing in hundreds of subjects

- Phase III: Large-scale testing in thousands of subjects

- Independent Review: Institutional Review Board oversight

Tesla avoided independent safety review and failed to implement adequate post-market surveillance for adverse events. Court testimony revealed they knew about systematic problems—drivers “ignoring steering wheel warnings ‘6, 10, plus times an hour'” and systems that would “abort” rather than brake when detecting crashes—yet continued deployment without addressing these fundamental safety issues.

4) Transit Fail-safe vs. fail-deadly: Engineering principles ignored

Traditional automotive systems were fail-safe – when components failed, human drivers provided backup. Tesla implemented fail-deadly design where software failures could result in crashes without adequate backup systems. Court testimony revealed the deadly consequences: when the system detected a pedestrian “140 feet away” and “classified it correctly,” it “never warned the driver” and “never braked.” Instead, it “turned off Autopilot” and “gave up control just 1.3 seconds before impact.”

Safety-critical systems require fail-operational design through diverse redundancy. Tesla’s approach lacked the multiple independent backup systems required for safety-critical autonomous operation, as demonstrated by this fatal failure mode where detection did not lead to any protective action.

Technology deployment philosophy violations

Tesla’s approach embodies what Evgeny Morozov calls “technological solutionism” – the mistaken belief that complex problems can be solved through engineering without considering social, ethical, and safety dimensions. This represents exactly the kind of technological hubris that philosophers from ancient Greece to Hans Jonas have warned against.

The deployment violates Jonas’s imperative of responsibility by prioritizing innovation speed over careful consideration of consequences for future generations. Tesla used public roads as testing grounds without adequate consideration of the precautionary principle that uncertain but potentially catastrophic risks require conservative approaches.

The historical pattern: Corporate accountability delayed but inevitable

Every industry examined – tobacco, asbestos, pharmaceuticals – eventually faced massive legal liability and regulatory intervention. Tobacco companies paid $206 billion in the Master Settlement Agreement. Johns Manville filed bankruptcy and established a $2.5 billion trust fund for victims. Merck faced thousands of lawsuits over Vioxx deaths.

The outcome is clear: companies that prioritize profits over safety while making false claims and attacking critics eventually face accountability – but only after causing preventable deaths and injuries that transparent, conservative safety approaches could have prevented.

Conclusion: Tesla has been callously ignoring over 100 years of basic lessons

Tesla’s Autopilot deployment represents a systematic violation of safety principles established over more than a century of engineering practice, philosophical development, and regulatory evolution. The company ignored:

- Engineering ethics codes established 1912-1914 requiring public safety primacy

- Legal duty of care framework established 1916 requiring manufacturer responsibility

- Philosophical principles of informed consent rooted in Kantian ethics

- Precautionary principle established internationally 1992 requiring caution despite uncertainty

- Proven safety methodologies from aviation, nuclear, and pharmaceutical industries

Rather than learning from historical corporate disasters, Tesla followed the same playbook that led to massive preventable harm in tobacco, asbestos, and pharmaceutical industries. Court testimony documented the false safety claims (Vehicle Safety Report with “no math and no science”), evidence concealment (systematic data degradation where “effort had been placed in making it hard to read and hard to use”), and moral positioning (claiming critics “kill people”) that mirror patterns consistently resulting in corporate accountability.

Tesla had access to over a century of established safety principles and historical lessons about the consequences of violating them. The company’s choice to ignore this framework represents not innovation, but systematic rejection of hard-won knowledge. Court testimony reveals Tesla knew their system would “detect imminent crashes for seconds but was programmed to simply ‘abort’ rather than brake” and that drivers “ignored steering wheel warnings ‘6, 10, plus times an hour,'” yet they continued aggressive deployment and marketing claims about superior safety.

The historical record suggests that the Tesla management approach, like the awful predecessors, ultimately has to result in regulatory intervention and legal accountability. And this can not come soon enough to protect the market from fraud, given how Tesla is causing preventable harm that established safety principles were specifically designed to prevent.

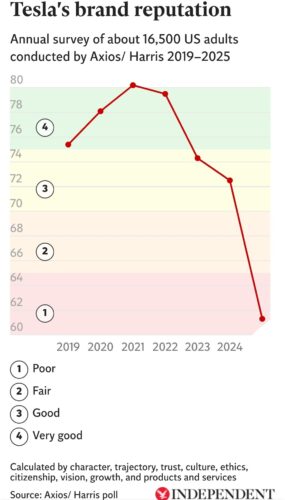

Nearly half of the participants in the latest Electric Vehicle Intelligence Report said they did not trust Tesla, while more than a third who said they had a negative perception. The company also had the lowest perceived safety rating of any major EV manufacturer, following several high-profile accidents.