Or how to spot the car company playing “trust me bro” with deadly data

If you’re investigating a Tesla crash case, or one of the people trying to figure out why a Cybertruck killed three college students in Piedmont, you’ve probably got questions about Tesla’s infamous data production habits. They generate impressive filings—spreadsheets, timestamps, lots of numbers—yet somehow still obfuscate and omit what really happened. As Fred Lambert reported today:

“The Court finds Tesla’s claim [to not have data] is not credible and appears to have been a willful and/or intentional misrepresentation.” […] There’s now a clear pattern of Tesla using questionable tactics to withhold critical information in court cases. […] People are starting to catch up to Tesla’s dirty tricks, and they know exactly the data that the automaker collects. It’s only fair that both sides have access to that data in those legal battles.

That’s the game, an ages old problem, typically regulated within industries that aim to prevent book “cookers” and cheats. Tesla is running the digital equivalent of Enron in courts: producing curated summaries while retaining complete logs.

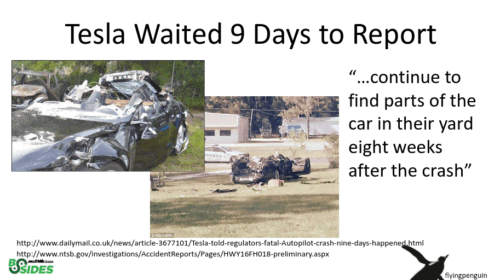

In 2016 I called attention to this exact problem in my “ground truth” keynote talk at BSidesLV, where I highlighted suspicious weeks of delays, missing data and lack of investigation after Tesla killed John Brown.

I had been actively participating and hosting Silicon Valley meetups for years, where some of the world’s best and brightest car hackers would gather to reverse-engineer automotive systems. Tesla systems right away were being flagged as notably opaque, and… defective. In one case I rode with three other hackers in a Tesla pulled from a junkyard and rebuilt to expose its risks, such as encryption cracked to run on rogue servers.

This new guide will help shine a light into what should be seen, what Tesla probably has been hiding, and how to call out their bullshit in technical terms that will survive a Daubert challenge.

The Musk of a Con

Modern vehicles are data centers on wheels. Everything that happens—every steering input, every brake application, every sensor reading, every system error—gets recorded on data buses called CAN (Controller Area Network). CAN bus data is a bit like the plane’s black box, except:

- It records thousands of signals per second

- The manufacturer controls whether it can be decoded

- There’s no FAA equivalent forcing transparency to save lives

When a Tesla crashes, the vehicle presumably has recorded everything. Think about the scope of a proper datacenter investigation, such as the Cardsystems or Choicepoint cases, let alone Enron. The question with Tesla, unfortunately, is what they will allow the public to see, and what will they hide to avoid accountability?

Decoder Ring Gap

To decode raw CAN bus data into human-readable signals, you need a way to interpret it, meaning a DBC file (CAN database file — e.g. https://github.com/joshwardell/model3dbc). Josh Wardell explains why Tesla is worse than usual.

“It was all manual work. I logged CAN data, dumped it into Excel, and spent hours processing it. A 10-second log of pressing the accelerator would take days to analyze. I eventually built up a DBC file, first with five signals, then 10, then 100. Tesla forums helped, but Tesla itself provided no documentation.”

“One major challenge is that Tesla updates its software constantly, changing signals with every update. Most automakers don’t do this. For two years, I had to maintain the Model 3 CAN signal database manually, testing updates to see what broke. Later, the Tesla hacking community reverse-engineered firmware to extract CAN definitions, taking us from a few hundred signals to over 3,000.”

Think of it this way:

- Raw CAN message: ID: 0x123, Data: [45 A2 F1 08 00 00 C4 1B]

- With DBC file: “Steering Wheel Angle: 45.2 degrees, Steering Velocity: 15 deg/sec”

Without Tesla’s DBC file, it’s still raw codes. The codes show systems are talking, but what they’re saying isn’t decoded yet.

If you buy an old dashboard off EBay, hook up some alligator clips to the wires and fire it up, you’ll see a stream of such raw messages. If you capture a ton of those messages and then replay them to the dashboard, you may be able to reverse engineer all the codes, but it’s a real puzzle.

Tesla has the complete DBC file. They should be compelled to release it for investigations along with full data.

Known Unknowns

Tesla’s typical objection to full data production is actually disinformation:

“Your Honor, the vehicle records thousands of signals across multiple data buses. Producing all of this data (poor us) would be unduly burdensome (poor us) and would include proprietary information (poor us) not relevant to this incident. We have provided plaintiff with what we deemed relevant data points from the time period immediately preceding the crash.”

Sounds reasonable, right?

It’s not. Here’s why.

Bogus objection has worked in multiple cases, because courts don’t yet understand Tesla’s technical gap.

“Subset” = Shell Game

For a 10-minute driving window, you’d get this from the CAN:

- Size: 5-10 GB of raw data (uncompressed)

- Messages: Millions of individual CAN messages

- Signals: Thousands of decoded data points per second

Does this look “unduly burdensome” to anyone?

No. Tesla processes petabytes of fleet data daily for Autopilot training. Producing 10GB for one crash is trivial.

Modern tools routinely handle 100GB+ CAN logs.

While initial processing takes hours, comprehensive analysis may take days—but this is standard accident reconstruction work that experts perform routinely. The data volume is NOT a legitimate barrier.

Tesla sounds absurdly lazy and cheap, besides being obstructive and opaque.

The real reason Tesla doesn’t want to produce normal data: Complete data exposes their engineering defects and system failures. It allows others to judge them for what they really are.

What Tesla Allows

Their “EDR summary” will probably be stripped down to something like this:

Time Speed Throttle Brake Steering -5.0 78 79% 0% 0° -4.5 79 79% 0% 0° -4.0 80 79% 0% 5° -3.5 81 61% 0% 15° -3.0 82 61% 5% 25°

This tells you what happened but NOT why it happened.

It’s like reading altitude and airspeed for a plane crash, where Boeing refuses to disclose all this:

- Engine performance data

- Control surface positions

- Pilot inputs

- System warnings

- Cockpit voice recorder

Legally sufficient? It shouldn’t be. Imagine turning a history paper in that is just a list of positions and dates. George Washington was at LAT/LON at this time and then LAT/LON at this time. The end.

Technically adequate for basic investigations, let alone an outcome for root cause analysis? Absolutely not.

Tesla the No True Scotsman

Tesla argues their vehicles are “computers with wheels” to generate buzz around their Autopilot and FSD.

Then when crashes happen, suddenly they’re just cars and computer data is proprietary and private.

You can’t have it both ways.

If it’s a computer-controlled vehicle, then computer data is crash data. And the “huge amounts of data makes Tesla successful” has to be directly relevant to “data shows why Tesla failed”.

Piedmont Cybertruck Case

The crash reporting already has many technical red flags:

- “Autopilot State Not Available” from 03:02:02 until crash

- Rear camera stopped recording at 03:06:02 during a turn

- 52-second gap (03:06:02-03:06:54) with no camera data before impact

- Drive inverter recall for MOSFET defects (sudden unintended acceleration risk)

- Steer-by-wire system (no mechanical steering backup)

To understand what actually happened, you need:

- Steer-by-Wire System Data (ISO 26262 safety-critical)

- Steering wheel angle vs. actual front wheel angle—did wheels respond to driver input?

- Torque sensor—driver force vs. feedback motor resistance

- Road wheel actuator current/voltage—shows electrical faults

- Redundancy status—were both channels functional?

- CAN bus metrics—packet loss, latency, network congestion

- Command-to-response timing—lag between input and wheel movement

Why it matters: No mechanical connection. If communication fails during a turn, driver input goes nowhere. Single-point-of-failure design.

- Drive Inverter Data (NHTSA Recall 24V-401)

- Torque commanded vs. torque delivered—tracking errors reveal failures

- MOSFET junction temperature per phase—thermal stress on recalled components

- Phase current asymmetry—reveals partial failures before catastrophic loss

- Gate drive voltage—detects failures causing unintended torque

- Inverter fault codes—thermal warnings, desaturation faults logged but not displayed

- Regenerative braking tracking—did deceleration fail during the turn?

Why it matters: Recalled MOSFETs degrade progressively. Asymmetric phase currents create torque disturbances during combined steering/acceleration loads—exactly what happens in turns. Tesla logs every MOSFET temperature and phase current imbalance to the millisecond.

- FSD Computer & Network Data

- System state transitions—why “Not Available” at 03:02:02?

- Dual-SoC health—watchdog resets, process crashes

- CAN/Ethernet metrics—packet loss between FSD Computer and vehicle controllers

- Processing load—CPU/GPU saturation, memory exhaustion

- Camera processing—frame rate, dropped frames per camera

- Error logs—all fault codes with timestamps

- Driver alerts—what displayed vs. what logged internally

Why it matters: 4 minutes 52 seconds “Not Available” is abnormal—suggests FSD Computer crash or network partition. Even “off,” FSD Computer feeds data to AEB, stability control, collision warning. If it’s not communicating, these safety systems fail silently.

- Camera/Vision & Vehicle Dynamics

- Recording status per camera—why did rear camera stop at 03:06:02?

- Storage system health—disk errors, power interruption

- Wheel speed (all 4), yaw rate, lateral/longitudinal acceleration

- Stability control mode and ABS status

- Object detection confidence—what was “seen” before recording stopped

Why it matters: Camera stopped during the turn. Turns are high-demand events—processing steering angle, yaw rate, lateral acceleration simultaneously. If system was marginal, turn could push it over the edge. Network saturation is a common-cause failure affecting multiple systems.

- Software Configuration & Thermal Management

- Firmware version for each ECU—exact build at time of crash

- OTA update history—did recent update introduce instability?

- MOSFET/inverter temperatures—thermal cascade in recalled components

- FSD Computer temperatures—thermal throttling or crashes

- Cooling system status—pump speeds, coolant flow

- 12V/HV power distribution—brownouts, voltage sags

Why it matters: Tesla pushes OTA updates constantly, changing vehicle behavior overnight. Updates can introduce communication protocol changes, processing overload, timing bugs. Thermal problems cascade: overheated FSD Computer crashes, hot MOSFETs accelerate inverter failure. The 03:02:02 to 03:06:02 progression is consistent with thermal cascade patterns.

Timeline of Defects as Reconstructed

03:02:02 - "Autopilot Not Available" begins

↓

What signals changed at this moment?

- Communication bus errors spike?

- CPU load increase?

- Sensor validity flags change?

- System attempting to switch modes?

03:04:26 - Rear camera records people on street

↓

This is LAST confirmed camera recording

Establish "system healthy" baseline

Are other cameras still working?

03:06:02 - Turn onto Hampton Road + rear camera stops

↓

Simultaneous events:

- Steering input increases (turning)

- Camera recording ceases

- Processing load spike?

- Storage system error?

- Communication errors?

03:06:02-03:06:54 - The missing 52 seconds

↓

- Speed profile through residential street

- Steering inputs vs. vehicle response

- Any system warnings to driver?

- Driver attempting corrections?

- Why no effective braking?

03:06:54 - Impact

What to look for: Correlation between system failures

If at 03:06:02 you see:

- Camera recording stops

- Steering command rate increases (the turn)

- Processing load spikes

- Communication error rate increases

Hypothesis: System overload during high-demand maneuver.

Engineering question: Is the computing architecture adequate for worst-case scenarios, or did Tesla ship a system that fails when you need it most?

Spotting Incomplete Data Production

- Missing Entire CAN Buses Modern Teslas have 3-5 separate CAN buses plus Ethernet. If they only produce “Powertrain CAN” data, you’re missing:

- Chassis CAN (steering, brakes, stability)

- Body CAN (power distribution, fault isolation)

- Diagnostic CAN (system fault codes)

- Ethernet backbone (camera/Autopilot data)

Red flag: Production limited to single bus = incomplete system picture.

- Time Coverage Gaps

- They give you: 5 seconds before crash

- You need: Full timeline from first anomaly through impact (e.g., 03:02:02-03:06:54 in Piedmont)

Red flag: System failures develop over minutes, not seconds. Short windows hide progressive degradation.

- Missing System Categories

- You get: Speed, throttle, brake, steering wheel angle

- Missing: Inter-system communication, fault codes, actuator responses, sensor validity flags

Red flag: Driver inputs without system responses = can’t prove causation.

- Sampling Rate Inadequacy

- They give you: 1 Hz (one sample per second)

- Industry standard: 10-100 Hz for control systems, 1000 Hz for safety-critical signals

Red flag: Crashes happen in milliseconds. 1 Hz data misses critical events entirely.

- Missing DBC File

- They give you: Pre-decoded subset they selected

- You need: Complete DBC database file for independent decoding

Red flag: No DBC = no independent verification. That’s curation, not discovery.

- Incomplete Signal Definitions

- They show: “Stability Control: Active”

- You need: WHY activated, WHAT intervention attempted, HOW vehicle responded, WHICH wheels modulated

Red flag: Binary state flags without context = meaningless for root cause analysis.

If you see any of these patterns, demand complete data. Tesla’s objections are procedural theater, not technical necessity.

What to Do About It

Retain an automotive systems engineer:

- Experienced with CAN bus forensic analysis

- Uses tools like Intrepid R2DB or Vector CANalyzer

- Has testified in automotive defect cases

- Can articulate exactly what signals are missing and the ISO 26262 requirements

- Can compare Tesla’s production to industry standards

Not a mechanical engineer. Not a general accident expert. You need someone who lives in vehicle control systems and software.

What This Looks Like in Practice

The Piedmont Case Timeline

Based on what we know:

03:02:02 – System shows “Autopilot State Not Available”

- This is 4 minutes and 52 seconds before crash

- Something failed here

- Tesla’s subset probably starts at 03:06:49 (5 seconds before impact)

- You’re missing the 4:47 that shows how it fell apart

03:04:26 – Camera records people on street

- Last confirmed recording

- Shows system was still partially functional

- Establishes baseline 24 seconds before camera stops

03:06:02 – Turn onto Hampton Road + camera stops

- Steering demand increases (making the turn)

- Recording ceases simultaneously

- This is not random

- 52 seconds of no data before impact

03:06:54 – Impact

What Complete Data Would Show

With full CAN data and DBC, you could determine:

At 03:02:02 when “Autopilot Not Available” began:

- What communication failed

- What error codes were logged

- What system attempted recovery

- Whether other systems were affected

- Processing load before and after

- Communication bus error rates

During the 4:47 gap (03:02:02-03:06:49):

- Progressive system degradation

- Sensor validity changes

- Communication health trends

- Whether driver received warnings

- System recovery attempts

At 03:06:02 when camera stopped:

- All simultaneous system events

- Processing load spike?

- Storage system failure?

- Power fluctuation?

- Communication breakdown?

During the fatal 52 seconds:

- Steering inputs vs. actual wheel angles

- Torque commands vs. inverter output

- Brake system response

- Stability control intervention (if any)

- Why no effective speed reduction

- System warnings to driver

Without this data, Tesla denies everyone else what they can see.

Industry Comparison: How Real Investigations Work

- Aviation (NTSB Protocol)

After a plane crash, investigators get complete access within hours:

- Complete flight data recorder—hundreds of parameters at high sampling rates

- Complete cockpit voice recorder—every communication, every warning

- Complete maintenance logs—full service history

- Complete software versions—exact code running at time of incident

- Manufacturer engineering support—required by law, not optional

- Complete system documentation—no “proprietary” excuses

Nobody says: “The FDR records too much data. We’ll just give you altitude and airspeed for the last 5 seconds.”

That argument would be laughed out of the NTSB. Boeing can’t refuse data production by crying “trade secrets.” Airbus can’t claim “undue burden.” Why? Because 49 CFR Part 830 doesn’t negotiate. Lives are at stake.

- Automotive (NHTSA Protocol)

When NHTSA’s Office of Defects Investigation opens a probe, manufacturers provide:

- Complete CAN bus logs—all buses, full time windows

- Complete DBC files—under protective order if needed

- Engineering support—technical experts to explain systems

- Independent analysis access—outside experts can verify

- Fleet-wide data—pattern identification across all vehicles

This is standard practice across Ford, GM, Toyota, Honda, Volkswagen—every manufacturer except Tesla.

Tesla knows how this works. They comply when NHTSA demands it. They just don’t comply in civil litigation unless forced.

The difference? NHTSA has regulatory teeth. Victims’ families have to fight in court for what should be automatic.

Hold the Line on Tesla

Questions They Must Answer

- System Communication:

- What is the complete communication architecture?

- Which systems share buses/networks?

- What is normal message rate for each critical system?

- Were any communication errors logged?

- What are the failure modes when communication degrades?

- Temporal Correlation:

- Timeline of all system state changes

- Correlation between “Autopilot Not Available” and other anomalies

- Why camera stopped when steering demand increased

- Progressive vs. sudden failure pattern

- Control System Response:

- Commanded vs. actual comparison for all actuators

- Latency measurements

- Fault detection and response times

- Sensor validity and fusion

- Failure Mode Analysis:

- What failures could cause observed symptoms?

- What does complete failure tree look like?

- Which scenarios can be ruled out and why?

- Which scenarios require additional data to evaluate?

If Tesla doesn’t address these fundamentals, their reports are worthless.

Burden of Proof When Manufacturer Controls Evidence

When a manufacturer exclusively controls critical evidence and produces only a curated subset, courts may:

- Draw adverse inferences: Assume undisclosed data would harm defendant’s case

- Shift burden of proof: Require manufacturer to prove system functioned correctly

- Allow spoliation instructions: Tell jury the missing evidence would have supported plaintiff

Tesla’s selective production—providing pre-filtered summaries while retaining complete logs—meets the legal standard for adverse inference.

Federal regulation 49 CFR Part 563 already establishes that EDR data must be accessible for investigation. Tesla cannot claim trade secret protection for data required by federal safety regulations.

Courts have consistently held that safety-critical system behavior data is not proprietary when lives are at stake. Plaintiffs agree to appropriate protective orders for genuinely proprietary information, but crash causation data must be produced.

If Tesla claims complete data would exonerate them, they must produce it. They cannot hide exculpatory evidence while claiming it’s proprietary.

The specific data requests are driven by:

- Known inverter recall: NHTSA recall for MOSFET defects creating sudden unintended acceleration risk

- Documented system failure: “Autopilot State Not Available” for 4 minutes 52 seconds before crash—abnormally long duration

- Correlated camera failure: Recording stops during turn at 03:06:02, exactly when system demand peaks

- Steer-by-wire system: No mechanical backup if electronic steering fails—ISO 26262 requires complete failure mode data for safety-critical systems

- Industry standards: SAE J2728, J2980, and ISO 26262 require this data for root cause analysis in sudden unintended acceleration investigations

The Bottom Line

Tesla has all the data. They recorded it. They have the tools to decode it. They have the expertise to analyze it. They should have the obligation to save lives.

They’re choosing to run and hide.

Why? Because complete data would show:

- System failures they don’t want to admit

- Design defects they don’t want to fix

- Patterns across the fleet they don’t want revealed

Don’t accept the subset. Don’t let Tesla choose what the public can see in order to protect the public from Tesla.

Standards References:

- SAE J1939 (CAN for vehicles)

- SAE J2728 (Event Data Recorder – EDR)

- SAE J2980 (EDR requirements)

- SAE J3061 (Cybersecurity for Cyber-Physical Vehicle Systems)

- ISO 11898 (CAN specification)

- ISO 16750 (Environmental conditions and testing for electrical equipment)

- ISO 21434 (Vehicle Cybersecurity engineering)

- ISO 26262 (functional safety)

- FMVSS 126 (Electronic Stability Control)

The Piedmont victim families, as well as many others, deserve answers. The complete data exists, the experts are ready to review it.

Let’s see if Tesla CAN produce the right stuff for once.

Wow! It’s clear as daylight that Tesla is withholding or worse has destroyed data from missing 52 seconds, at 3:06:02 am from the hard right turn onto Hampton Road from the bottom of Crest Road. Thank you for posting this breakdown.

To avoid crashing into the house at the bottom of the intersection at Hampton Road, the driver Soren Dixon may have caused a serious braking problem (over braked?) on that righthand turn after descending downhill .6 of a mile on Crest Road in 9,500 pound monster loaded with 4 young teenagers, all 19 years old.

The cybertruck system controls were then further compromised after driving over a series of potholes on both driver’s and passenger sides present on the Hampton Road before a 4 way stop at La Salle Road.

After passing La Salle Road, there is a sloping upward tight curve along a wooded park on Hampton Road that banks 90 degrees to the left, so that must have confused system controls even more, losing contact with mother Tesla in the sky.

Most likely the on board controls became completely locked up after crossing La Salle Road so that no human intervention was possible until a second before the crash when the Cybertruck finally straightened out during the last .3 before the crash. Then the Cybertruck veered across Hampton Road and crashed into the tree.

Tesla cannot release the 52 seconds and will plead the 5th amendment otherwise it will be found to be guilty. From Tesla’s scant press statements, Tesla is blaming the owner of the vehicle for not immediately getting the MOSFET inverter replaced per the recall issued 2 weeks earlier.