Recently I wrote about the Tesla “Cyberhammer” Nazi symbolism, after I wrote about the Tesla 8/8 launch date fiasco. Now Tesla unveiled a new completely unnecessary and overpriced product: a bottle of mezcal. Notably, many people have pointed out to me that two bottles form the infamous Nazi SS rune.

The bottles are 750ml, or 1500ml for a pair, allegedly an update on a 2020 design blamed on Javier Verdura, director of product.

In fact, the official Tesla release statement for the mezcal claims that that the infamous Nazi SS design by Javier was meant to honor his roots, a life in Mexico City.

The bottle was designed by Tesla’s Director of Product Design, Javier Verdura, in honor of his Mexican roots and early life growing up in Mexico City.

Honor roots how? The vast majority of mezcal is “artesanal” and “joven” (unaged), and the vast majority of agave is “espadín”, so this bottle says basically nothing. The details couldn’t be more superficial and generic.

I hate to be the one to point this out but mezcal is usually meant to share the particular details of people who make it and their methods, which the Tesla design absolutely does not “honor” in any way.

“I look for the name of the person who made it—the mezcalero or mezcalera—the town where it’s made, and the mezcal varietal, such as espadín,” says Boehm [owner of The Cabinet, an agave spirit-focused bar]. “And the more information, the better.”

So who made Tesla’s mezcal? The Tesla website just says Nosotros. That brand turns out to be a recent graduate from Loyola Marymount (LA) who in 2015 had “a college assignment” to imagine a business, so he created one in California.

I discovered tequila when I moved from Costa Rica to California… Our big break came from the San Francisco World Spirit Competition. With less than $1,000 in the bank, we submitted our Blanco and won multiple awards, including Best Tequila of the Show! Suddenly, we had the attention of buyers everywhere, and that led to our first string of large order sales. The first two years were spent focusing on small boutique restaurants. Now, we’re focused on continuing to grow our retail presence. After three years of development, we just recently launched our Mezcal.

That’s it. That’s Tesla mezcal. So how again is this design concept honoring Mexican roots?

I’m going to go out on a limb and guess that we are supposed to connect a lightning bolt bottle design to the ancient legend about an Agave plant in Mexico struck by the gods and mystically delivering alcohol. However, this actual Mexican connection seems far-fetched because why would Tesla honor real roots when they clearly don’t care about Mexico at all? Their mezcal press release could be accused of burying Mexican heritage if their lightning theme is supposed to fit somehow since they haven’t explained anything and did such a poor job of highlighting any reasons to believe them.

More specifically, Tesla chose a very obvious Nazi-looking lightning bolt design. How is that supposed to make us think about the ancient fertility goddess Mayahuel and her four hundred breasts, often depicted as agave leaves with “drunken bunnies” sipping on them? The Aztec symbolism, such as the phrase “drunk as 400 rabbits” (Centzon Tōtōchtin), representing infinite intoxication, would make far more sense.

Anyway, I digress. There’s really nothing about a Nazi SS-shaped lightning bolt that connects us to Mexico for a bottle filled by a Costa Rican business school student in California. A design with plausible roots featuring fertility, or a bunch of stumbling drunk rabbits would have been a much clearer tie-in.

Do you know who really promoted the lightning bolt into Mexican culture, specifically for tequila?

Can you guess?

It was two British guys from Peckham, England who drank a Siete Leguas Anejo in 2017 and then quit their jobs to start a competing brand in 2019 called El Rayo (lightning).

Once in Mexico we linked up with a local designer, Mario and he showed us a culture that blew our minds! Forget sombreros and cactuses, this was modern Mexico… but after 3 trips and a lot of sips! Lightning struck and in May 2019 El Rayo ⚡ was born!

Lightning struck in 2019. Could two British nerds vacationing be any more awkward about how they decided to reframe Mexican culture to be suited more to their own tastes?

And did I mention Tesla claims they alone came up with a lightning concept for a tequila bottle in 2020? Yeah, oops, Tesla your designer is busted. The idea looks to be stolen from these two English guys, who were not even pretending to like Mexican heritage because they repeatedly boast how lightning branding for tequila was invented by them to erase the past.

I wish I could say it was the first thing we came up with but it wasn’t, we had a fairly bleak process to get to El Rayo – but it was worth the wait! It actually came from a book that Jack’s brother gave to him! Lightning fits in with our brand world – we want to be a bold and exciting presence and it will be a key asset for the brand moving forward.

A book? What book? A bleak process to get to “bold” new El Rayo sounds like the literal opposite to Tesla claiming lightning is to honor the old life in Mexico. What book?

And that’s what makes this so interesting as a design issue. Tesla did not say it’s about electricity, or being an electric car. They said it’s about Mexico. Yet the El Rayo team did not make anything that even remotely resembled Nazi symbolism when they invented the lightning brand for tequila. So it’s a break from Mexican life, and Tesla is exposed.

With that in mind, also accompanying this release of Tesla mezcal “SS” bottles are two cups featuring a letter “S” each. When placed together these “SS” cups have a capacity of 88ml, a number notoriously associated with the phrase “Heil Hitler” in neo-Nazi circles.

Price: $55

Features: Holds 1.5 oz (44 ml)

Perhaps you know that when you buy random mezcal cups in Mexico they vary from 1oz to 3oz or more and usually come in sets of four wide mouth bowls called a “copita”.

“It allows the nose to get close to the mezcal while making it easy to sip,” says Jon Bamonte, lead bartender at Philadelphia’s Vernick Fish, which partners with Mezonte mezcal for the bar’s agave program.

Alas, Tesla is promoting exactly 88ml to their customers in their weirdly tall cups that look nothing like a proper shape. The affinity for that number is not new, as I’ve written before. In many other places Tesla has often featured it, though the company never directly admits their meaning. Given how often 88 comes up for the company the two cups with “SS” imagery fit into a clear and disturbing association to Nazi ideology.

Charge Plugs: 88

Model Cost: 88

Average Speed: 88

Engine Power: 88

Voice commands: 88

Taxi launch date: 8/8

The mezcal bottles and cups might be viewed by some as just another step in a long-running political extremism joke, but the historical and cultural sensitivity surrounding these symbols suggests a need for greater awareness and responsibility in product design and marketing. Tesla went with the worst lightning bolt design possible using a dubious backstory that apparently stole from a directly competing brand, for what?

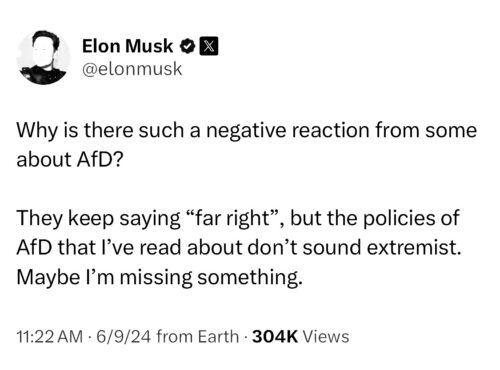

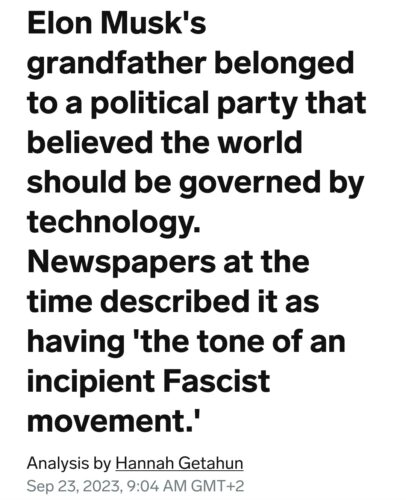

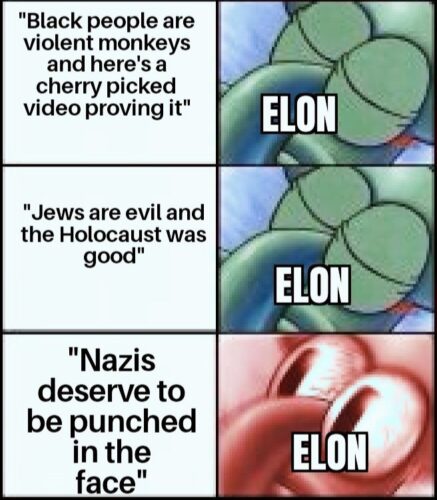

Given well-documented associations of the Nazi SS runes and the number 88 with white supremacist groups, the context cannot be overlooked, especially given the pattern at Tesla. This incident adds to a series of ongoing controversies involving its CEO, including active support for Nazi politicians today, raising broader questions about his past and present political work.

Elon Musk seems to have a troubling penchant for using his brands to flirt with Nazi symbolism and spread toxic ideologies. His social media antics often feel like a calculated effort to disseminate hate and disinformation. Some observers even suggest he’s become a prominent figure in promoting white supremacist rhetoric, casting a damaging shadow over his public persona. Whether in Germany or elsewhere, Musk’s actions speak louder than his tweets, and they paint a disturbing picture.

Elon Musk seems to have a troubling penchant for using his brands to flirt with Nazi symbolism and spread toxic ideologies. His social media antics often feel like a calculated effort to disseminate hate and disinformation. Some observers even suggest he’s become a prominent figure in promoting white supremacist rhetoric, casting a damaging shadow over his public persona. Whether in Germany or elsewhere, Musk’s actions speak louder than his tweets, and they paint a disturbing picture.