In 2010, I called bullshit on the security industry’s Stuxnet panic: multi-state code assembly operation, not cyber Pearl Harbor. Right? Riiiight? Here’s the evidence I was right, such as predicting how these attacks would evolve.

The Big Call: Failed Detection, Not Attack Success

My central thesis in 2010 was as controversial as penguins flying:

The failure of anti-malware is turning into the real issue, rather than true zero-day risks.

The industry was fixated on Stuxnet’s four zero-days and its apparent sophistication. I argued we were looking at the wrong problem.

Notably, I often contradicted the all-too-often statements about “attackers only have to be right once” with the more accurate lesson that “defenders only have to be right once“.

The verdict: This proved correct, even more dramatically accurate than anticipated.

When Stuxnet’s source code became available for download and modification, as Sean McGurk from the Department of Homeland Security warned in 2012, the real issue became clear: the capabilities spread far beyond the original attack. The problem went beyond one weapon because that weapon’s simplified supply model became a blueprint.

Similarly, Zeus’s source code leaked in 2011, spawning hundreds of variants. GameOver Zeus emerged with decentralized peer-to-peer architecture specifically designed to resist takedowns. Its creator Evgeniy Bogachev remains wanted, and new variants continue evolving. The malware didn’t need to be novel; it just needed to stay one step ahead of defenders getting their kill shot.

Network Controls Over OS-Level Detection

In 2010, I also wrote here on the blog:

Controls outside the OS thus might have made the real difference, just like we hear about with the Zeus and Storm evolutions, rather than true zero-day risks.

Microsoft had added Storm to MSRT in 2007 and wasn’t optimistic about its demise. They predicted Storm would “slowly regain its strength.” But Storm did decline significantly by mid-2008. Microsoft took credit.

Then Storm returned.

The evidence shows how and why: When Storm resurrected as the Waledac botnet in 2008-2009, researchers identified it as the same operators using completely rewritten code that preserved Storm’s operational model while abandoning the P2P protocol that enabled detection. The operators learned from Storm’s takedown and rebuilt from scratch with the same business logic but different technical implementation.

The sophistication wasn’t in the code itself but in understanding what worked and what got detected. Makes sense, right? This isn’t Pearl Harbor, not by a 100 miles.

Storm’s operators learned lessons. The Waledac variant specifically abandoned “noisy” eDonkey P2P protocol, which had made detection trivial, switching to HTTP communications that were harder to filter. Remember eDonkey? I certainly remember: I caught “SOC as a service” providers secretly disabling eDonkey alarms to reduce their response costs and increase margins, ignoring the security implications entirely. Attackers understood what I also had observed (hat tip to Jose Nazario’s pioneering 2005 work): the battlefield of evil code detection was fought best in network behavior analysis (like early air superiority to slow or repel invasion versus later costly urban street battles).

The Stuxnet Multi-State Actor Call: Nailed It

Here’s what I actually got completely right in my “Dr. Stuxlove” presentation at the BSides San Francisco conference on February 15, 2011: Stuxnet was a multi-state national campaign.

This wasn’t obvious at the time. While security researchers were debating whether it might be sophisticated hackers or perhaps a single nation-state operation, I publicly and openly identified it as coordinated action involving multiple governments working together.

Looking at my presentation again now, the framing was clear: I positioned Stuxnet within the context of Cold War history and 1953 Operation Ajax (CIA-sponsored coup in Iran that flipped Truman-era foreign policy upside down and removed the elected leader Mossadegh to force the Shah into unitary power to secure oil for the UK).

The entire talk built toward understanding Stuxnet as part of a historical pattern of US-UK (and Israel, let alone Pakistan) coordinated operations targeting Iran’s strategic capabilities.

This identification of multi-state coordination turned out to be exactly correct. Just over a year after my presentation in 2012 the Obama administration had effectively confirmed US involvement, and leaks to the press from officials strongly indicated it was a joint US-Israeli operation, with the malware tested at Israel’s Dimona nuclear complex before deployment.

Then in 2015 a CIA technologist reading my ICS attack retrospective smirked, shrugged and left without further comment. Classic non-denial of things that officially do not exist, as if not meant to be easy to prevent.

The most sophisticated aspects to me were in that it was moving through many actors across boundaries (e.g. Germany, Iran, Pakistan, Israel, US, Russia) requiring knowledge inside areas not easily accessed or learned.

The Sophistication Debate

Sophistication just means not well understood. It doesn’t mean good. It doesn’t mean effective. I just means obfuscated, so far.

Given Storm’s P2P protocol was caught and dismantled by network analysis, rather than OS-level detection catching every variant, a decade of resources blown on endpoint cowboy logic needs better… investigation.

The code sophistication question is nuanced, requiring expert pattern analysis rather than emotional appeals. In the 2010 blog post, I argued Stuxnet “is not as sophisticated as some might argue but instead is rehashed from prior attacks” and the subsequent evidence proved this was essentially right.

As we looked closer, and revealed more, we realized a lot about the coding was known already and wasn’t the surprise.

Security researchers concluded Stuxnet required a team of ten people and at least two to three years of nation-state development. That engineering coordination was real, as real as a $600 toilet seat, and the sophistication came from intelligence-guided assembly of existing components. Code reuse was coupled with good intelligence for specific targets. That model deflated costs dramatically, as I predicted privately funded threats would very soon adopt and make efficient.

In other words I was mostly correct about the attack sophistication being far more about a cost deflation through production methods, because of a “rehashed from prior attacks” angle coupled with intelligence gathering.

Research later revealed that Stuxnet developers collaborated with the Equation Group in 2009, reusing at least one zero-day exploit from 2008 that had been actively used by the Conficker worm and Chinese hackers. The attacks were built on existing frameworks and tools. The “Exploit Development Framework” leaked by The Shadow Brokers in 2017 showed significant code overlaps between Stuxnet and Equation Group exploits.

The sophistication wasn’t from inventing everything from scratch to be unknown, it was the hidden coordination required to assemble state-of-the-art offensive cyber capabilities from multiple intelligence agencies (NSA, CIA, and Israel’s Unit 8200) into a single, precisely targeted weapon entering a particular supply chain.

That’s exactly what a multi-state actor campaign looks like, and that’s what I identified in the Dr. Stuxlove presentation while everyone else was still debating script kiddies versus lone wolf nation-states. Richard Bejtlich famously walked out of my talk clearly disgruntled by my “your controls may still work” framing of the “intelligence revolution”.

The irony? By 2016 he was out praising academic rigor for intelligence work while remaining completely blind to the fact that the “outside” community he came to admire was in my 2011 presentation. He initially roasted the value of what he’d five years later claim to respect.

The Zeus Resurrection Prophecy

My 2010 blog post observation about Zeus’s mythological namesake – “Cretans believed that Zeus died and was resurrected annually” – also turned out more prophetic than I intended. I wrote:

In modern terms Zeus would be killed and then resurrect almost instantly.

This is exactly what happened.

Microsoft announced Zeus detection in MSRT. The botnet operators immediately released updated versions. When law enforcement achieved major disruptions, new variants emerged. The pattern repeated for over a decade.

The GameOver Zeus disruption by the FBI in 2014 (Operation Tovar) seemed successful. Five weeks later, security firm Malcovery discovered a new variant being transmitted through spam emails. Despite sharing 90% of its code base with previous versions, it had restructured to avoid the specific takedown methods that had worked before.

As of 2025, Zeus variants still continue to evolve. The annual death-and-resurrection cycle I joked about in 2010 became the operational reality.

What This Means Now for CISOs

The patterns I identified in 2010 have become the dominant paradigm, which is both good and also very bad:

Evolutionary advantage beats innovation. Malware doesn’t need to be revolutionary; it needs to adapt faster than defenses can be deployed. Zeus and Storm both demonstrated that reusing 90% of a compromised code base while changing just the 10% that enables detection evasion is cost effective.

Early strategic behavior monitors matter more than pervasive signatures. The most effective interventions against Storm weren’t the ones that tried to identify malicious code on any and all infected machines. The best intermediations were the ones that disrupted botnet communication architecture. This insight continued to develop the behavioral analysis, traffic monitoring, and defense-in-depth strategies of the early 2000s.

Source code sharing multiplies threats exponentially. When Zeus and Stuxnet source code became available, the threats proliferated. Each dump created a foundation for dozens of variants. The problem of containing one sophisticated attack becomes a matter of identifying the ecosystem of derivative threats.

The cost of defense rises relative to attack. My observation that “The cost of a Zeus attack has just gone up” after Microsoft’s MSRT update was accurate in the short term. But it also proved the inverse: each defensive measure increases the sophistication floor for successful attacks, creating an arms race that favors efficiency/quality of who can afford to continuously evolve their tools with lower overhead.

The Geopolitical Shift

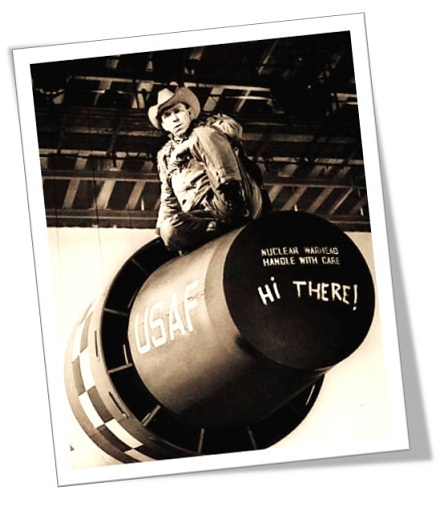

If there’s one thing I harped on the most in 2010, it was the geopolitical dimension. Stuxnet wasn’t just a look into sophisticated tooling, it was a moment where state operations could be more publicly debated. The U.S. and Israel’s apparent use of a cyber weapon to physically destroy centrifuges at Natanz legitimized offensive cyber capabilities in ways that shape international relations, which Israeli Prime Minister Golda Meir could only have dreamed about.

She dealt with usual constraints on special operations (the 1967 war, 1972 Olympics response, etc.) where physical presence, attribution, and international law were boundaries. Stuxnet operations flowed inside Iran without such constraints as remote, deniable, legally ambiguous.

Retired Air Force General Michael Hayden noted in 2012 that while Stuxnet might have seemed “a good idea,” it also was legitimizing code further into being offensive weapons for physical damage. The Stuxnet code exposure meant others could “take a look at this and maybe even attempt to turn it to their own purposes.”

The sophistication was strategic planning for engineers also, which has proven far more durable and consequential than any individual piece of code as malware.

The Code Reuse Insight: More Prescient Than I Knew

Here’s what I really worried about in 2010: code reuse and framework assembly of Stuxnet was industrialization, as a new pattern in threat economics, which is how the entire technology industry works now.

The observation that sophisticated attacks are “assembled from components that represented the state of the art” rather than “entirely novel engineering from scratch” turned out to describe not just malware evolution, but the fundamental architecture of modern AI systems evolving since 2012.

Large language models?

Code reuse at massive scale by training on existing text, assembling patterns from prior work, remixing and recombining what already exists. The “Exploit Development Framework” that connected Stuxnet to Equation Group exploits looks remarkably similar to how AI model frameworks connect different components today.

The whole AI industry is built on the same principle I identified in Stuxnet: sophisticated capability emerges from intelligently assembling and coordinating existing components, not from inventing everything from scratch. Transfer learning, fine-tuning, prompt engineering, RAG systems… all of this reveals human nature through reuse and recycling.

The attackers understood in 2009 what the AI industry rediscovered in the 2020s: history tells us the most powerful systems aren’t most novel, they’re the ones that intelligently coordinate and assemble existing state-of-the-art capabilities into something greater than the sum of its parts. You don’t need a generalized bag-of-tricks if you know your targets well enough to land a very special operation.

That’s the real insight from 1953 (Ajax) and 2010 (Stuxnet), let alone 1940 Mission 101, landing in 2025. That’s what I got right about Stuxnet. And that’s the pattern that explains far more than just malware.

That’s the real insight from 1953 (Ajax) and 2010 (Stuxnet), let alone 1940 Mission 101, landing in 2025. That’s what I got right about Stuxnet. And that’s the pattern that explains far more than just malware.

Error Analysis

The error I made in 2010 was assuming the security industry would shift toward integrity and away from sensationalization. I thought ample evidence of Storm’s P2P dismantling, Zeus’s resurrection cycle, Stuxnet’s inexpensive component assembly all would somehow shift how organizations allocated budgets and how vendors built products for higher quality measures grounded in outcomes.

Sigh.

Instead, the industry doubled down on signature-based snake oil that failed in 2010, with even more aggressive marketing.

CrowdStrike’s Falcon sensor that blue-screened 8.5 million Windows machines in July 2024 because of a botched content update? That’s the OS-level detection model I argued against fifteen years ago, now sold as “next-generation” with a $90 billion valuation. Can we call out the marketing garbage yet? I warned about this from the day I sat on a RSAC panel in San Francisco with the founder, where he said nobody in the room should be allowed to record our comments.

Way to go George. Hope you enjoy your yacht built on our industry suffering.

The Intelligence Pipeline Grift

Even worse than any marketing executive being a shameless opportunist is the intelligence-to-commercial pipeline transition: operators trained in behavioral threat analysis for military targets still flog signature detection products in commercial markets. That’s apparently not an accident.

When NSA/GCHQ/Unit 8200 veterans build commercial tools, they know behavioral analysis works better than signature scanning. They used it in their state operations and that’s how Stuxnet actually worked, with deep intelligence about Natanz’s specific systems rather than generic exploit attempts.

But behavioral analysis, like system integrity monitoring, doesn’t yet scale to enterprise contracts, and it doesn’t translate to a fluffy IPO at $12 billion valuations.

So they strip out quality intelligence components and sell signatures with branding. CrowdStrike: “former intelligence expertise.” Wiz: “Unit 8200 pedigree.” What they don’t tell you is they’re selling the exact wrong parts of what they learned.

Wiz raised $1 billion at a $12 billion valuation to scan cloud configurations for known vulnerabilities, while arguably failing privacy tests. That’s signature detection with an Israeli intelligence strings story. The operators who built it came from the special operations Unit 81 soldiers, where they learned targeted behavioral analysis of specific adversaries. But the commercial product? It scans for 50,000 known misconfigurations as signature detection.

Why would self-labelled untouchable “Wizards” build systems that actually reduce misconfigurations when your valuation depends on enterprises needing to scan for more of them perpetually? Dare I bring up the self-licking ISIS-cream cone analogy again? Security systems like Palantir seem to think money should come from creating and perpetuating threats they claim to detect; measuring its own chaos as activity rather than improving outcomes.

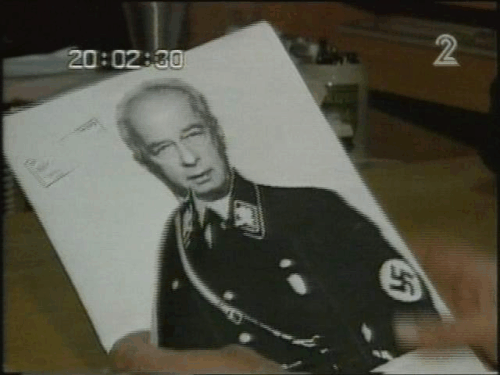

The photo of Netanyahu’s supporters depicting Rabin in Nazi uniform weeks before his assassination isn’t just historical documentation of Israeli political extremism. It’s evidence of the signature detection failure mode in physical form.

Netanyahu’s political apparatus knew the behavioral threat pattern: inflammatory rhetoric depicting opponents as existential enemies radicalizes extremists who then act on that framing. They had all the behavioral indicators. They chose to amplify the threat pattern rather than mitigate it.

Then when Yigal Amir assassinated Rabin, they acted shocked – despite having created the exact conditions for that outcome through their own propaganda.

This is precisely the same failure mode as signature-based cybersecurity. You can’t stop threats by only looking for known signatures when the real threat is the behavioral pattern you’re actively enabling. Israeli intelligence veterans spinning out cybersecurity startups aren’t just capitalizing on their training – they’re monetizing threat perpetuation rather than threat elimination.

The goal isn’t security. The goal is managing insecurity profitably.

I thought calling out the bullshit would help detect the security theater actors. Instead, even dudes I know and personally worked with ran off to make everything more expensive for their personal profit by switching everyone to cloud APIs. The defenders still aren’t learning as fast as the attackers because learning doesn’t have a revenue model, while selling fear to nation states does.

McAfee was simply a pioneering fraudster.

Fifteen years gone already and the fundamental insight holds: the real security challenge shouldn’t be flashy eye candy about preventing the next sophisticated zero-day attack. We must be building defensive systems with agility meant to adapt as quickly as attackers evolve their tools.

Instead of slow stone walls, we should be rolling out inexpensive telegraph wire with barbs wrapped on it (e.g. the revolution of barbed wire to land ownership). It’s understanding that intelligence-based detection and response matter far more than mythically promoted prevention. It’s recognizing that behavior analysis and network monitoring aren’t luxuries, because they’re necessities in an environment where malware resurrects and returns annually, like Zeus in modern digital form.

Code changes. Techniques evolve. Yet the historic patterns remain consistent: attackers learn and adapt, especially where defenders do not. The question for defenders isn’t “How do we stop this attack?” but “How do we build systems meant to evolve faster than attackers?” Why aren’t defenders learning as much if not more than attackers, especially since defenders have the insider learning advantage?

That was true in 2010, and we could have done more, better, faster.

Care to make any guesses what patterns are visible now in 2025 that will explain the next decade?

Happy to blog more every day!

Let’s talk, for example, about AI agent swarms assembled from commodity components, targeted with specific intelligence, operating remotely/deniably in a 20km dead zone. This is Stuxnet’s component assembly + Ajax’s targeted intelligence + cyber’s remote/deniable operations, applied to post-2012 autonomous systems.

Giddy up 2035.

Sour grapes? So you’ve documented 15 years where being right didn’t matter because learning doesn’t have a revenue model. Would you rather be so rich than be so wise? I think not.

Wiz will rot in hell for what they have done. Clownstrike should be sued into oblivion.