A CISO announces a dangerous “unsolved security problem” in his product when it ships. We’ve seen this playbook before.

OpenAI’s Chief Information Security Officer (CISO) Dane Stuckey just launched a PR campaign admitting the company’s new browser exposes user data through “an unsolved security problem” that “adversaries will spend significant time and resources” exploiting.

This is a paper trail for intentional harm.

The Palantir Playbook

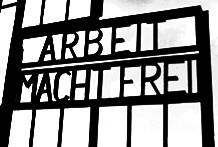

Dane Stuckey joined OpenAI in October 2024 from Palantir – the self-licking ISIS cream cone that generates threats to justify selling surveillance tools to anti-democratic agencies (e.g. Nazis in Germany). His announcement of joining the company emphasized his ability to “enable democratic institutions” – Palantir double-speak for selling into militant authoritarian groups.

The timeline:

- Oct 2024: Stuckey joins OpenAI from Palantir surveillance industry

- Jan 2025: OpenAI removes “no military use” clause from Terms of Service

- Throughout 2025: OpenAI signs multiple Pentagon contracts

- Oct 2025: Ships Atlas with known architectural vulnerabilities while building liability shield

He wasn’t hired to secure OpenAI’s products. He was hired to make insecure products acceptable to government buyers.

The Admission

In his 14-tweet manifesto, Stuckey provides a technical blueprint for the coming exploitation:

Attackers hide malicious instructions in websites, emails, or other sources, to try to trick the agent into behaving in unintended ways… as consequential as an attacker trying to get the agent to fetch and leak private data, such as sensitive information from your email, or credentials.

He knows exactly what will happen. He’s describing attack vectors with precision – like a Titanic captain announcing “we expect to hit an iceberg, climb aboard!” Then:

However, prompt injection remains a frontier, unsolved security problem, and our adversaries will spend significant time and resources to find ways to make ChatGPT agent fall for these attacks.

“Frontier, unsolved security problem.”

OpenAI’s CISO is telling you: we cannot solve this, attackers will exploit it, we’re shipping anyway.

Technical Validation

Simon Willison – one of the world’s leading experts on prompt injection who has documented this vulnerability for three years – immediately dissected Stuckey’s claims. His conclusion:

It’s not done much to influence my overall skepticism of the entire category of browser agents.

Simon builds with LLMs constantly and advocates for their responsible use. When he says the entire category might be fundamentally broken, that’s evidence CISOs must heed.

He identified the core problem: “In application security 99% is a failing grade.” Guardrails that work 99% of the time are worthless when adversaries probe indefinitely for the 1% that succeeds.

We don’t build bridges that only 99% of cars can cross. We don’t make airplanes that only land 99% of the time. Software’s deployment advantages should increase reliability, not excuse lowering it.

Simon tested OpenAI’s flagship mitigation – “watch mode” that supposedly alerts users when agents visit sensitive sites.

It didn’t work.

He tried GitHub and banking sites. The agent continued operating when he switched applications. The primary defensive measure failed immediately upon inspection.

Intentional Harm By Design

Look at what Stuckey actually proposes as liability shield:

Rapid response systems to help us quickly identify attack campaigns as we become aware of them.

Translation: Attacks will succeed. Users will be harmed first. We’ll detect patterns from the damage. That’s going to help us, we’re not concerned about them.

This is the security model for shipping spoiled soup at scale: monitor for sickness, charge for cleanup afterwards, repeat.

“We’ve designed Atlas to give you controls to help protect yourself.”

Translation: When you get pwned, it’s your fault for not correctly assessing “well-scoped actions on very trusted sites.”

As Simon notes:

We’re delegating security decisions to end-users of the software. We’ve demonstrated many times over that this is an unfair burden to place on almost any user.

“Logged out mode” – don’t use the main feature.

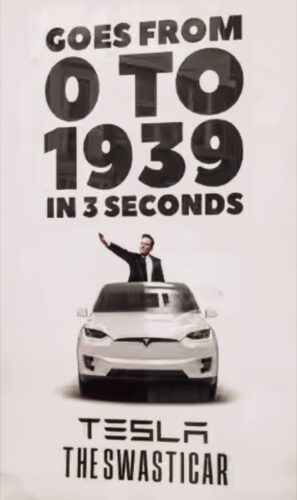

The primary mitigation is to not use the product. Classic abuser logic: remove your face if you don’t want us repeatedly punching it. That’s not a security control. That’s an admission the product cannot be secured, is unsafe by design, like a Tesla.

Don’t want to die in a predictable crash into the back of a firetruck with its lights flashing? Don’t get in a Tesla, since it says its warning system can’t be trusted to warn.

Juicy Government Contracts

Why would a CISO deliberately ship a product with known credential theft and data exfiltration vulnerabilities?

Because that’s a feature for certain buyers.

Consider what happens when ChatGPT Atlas agents – with unfixable prompt injection vulnerabilities – deploy across:

- Pentagon systems (OpenAI has multiple DoD contracts)

- Intelligence agencies (the board has NSA links)

- Federal government offices (where “AI efficiency” mandates are coming)

- Critical infrastructure (where “AI transformation” is being pushed)

Every agent becomes an attack surface. Every credential accessible. Every communication interceptable.

The NSA, sitting on the board of OpenAI must be loving this. Think of the money they will save by pushing backdoors by design through OpenAI browser code.

Who benefits from an “AI assistant” that can be exploited to exfiltrate data but has plausible deniability because “prompt injection is unsolved”?

State actors. Intelligence services. The surveillance industry Stuckey was getting rich from.

Paul Nakasone on the board goes beyond decoration and becomes their new business model.

The Computer Virus Confession

Stuckey closes with this analogy:

As with computer viruses in the early 2000s, we think it’s important for everyone to understand responsible usage, including thinking about prompt injection attacks, so we can all learn to benefit from this technology safely.

He’s describing the business model: Ship broken, ship often, let damage accumulate, turn security into rent-seeking over years.

Rent seeking. Look it up.

Remember Windows NT? Massive security holes from first release, on purpose. “Practice safe computing.” Cracked in 15 seconds on a network. Viruses everywhere. Years of damage before hardening.

But here’s the difference: Microsoft eventually had to patch vulnerabilities under regulatory pressure from governments, as well as stop making a monopolistic browser. How LLMs process instructions isn’t yet regulated at all. So this vulnerability is architectural, the NSA is going to drive hard into it, and there’s little to no prevention on the horizon. It’s not a bug to fix – it’s a loophole so big it prevents even acknowledging the risk.

Stuckey knows this. That’s why he calls it “unsolved” and invokes Stanford-sounding revisionist rhetoric about the “frontier.”

Documented Mens Rea

Let me be explicit what I see in the OpenAI strategy:

- Knowingly shipping a system that will be exploited to steal credentials and exfiltrate private data

- Documenting this in advance to establish legal cover

- Marketing to government and enterprise customers with a Palantir veteran providing security rubber stamp

- Responding to exploitation reactively, after damage occurs, while collecting revenue

- Treating infinite user harm as acceptable externality

This isn’t a CISO making a difficult call under pressure. This is a surveillance industry plant deliberately enabling vulnerable systems for undisclosed predictable harms.

That’s documented mens rea.

Theranos Comparison They Fear

Elizabeth Holmes got 11 years for shipping blood tests that gave wrong results, endangering patients.

Dane Stuckey is shipping a browser that his own documentation says will be exploited to steal credentials and leak private data – at internet scale, including government systems.

The difference? Holmes ran out of money. Stuckey has a $157 billion company backing him. And unlike Holmes and Elon Musk who claimed their technology worked, Stuckey admits it doesn’t work and ships anyway.

That’s not fraud through deception. That’s disclosure.

“Buyer beware. I warned it would hurt you. You used it anyway. Now where’s my bonus?”

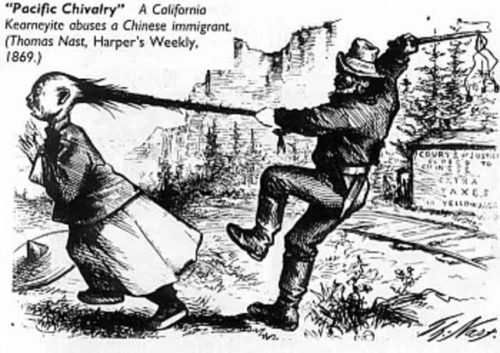

It’s a police chief announcing a sundown town: Our officers will commit brutality. There will be friendly fire. This is an unsolved problem in policing. We apologize in advance for all the dead people and deny the pattern of it only affecting certain “poor” people. Sucks to be them.

The Coming Harm

Stuckey’s own words describe what’s coming:

- Stolen credentials across millions of users

- Exfiltrated emails and private communications

- Compromised government systems

- Supply chain attacks through developer accounts

- State-sponsored exploitation (he admits “adversaries will spend significant time”)

OpenAI will respond reactively. Publish more specific attack patterns after exploitation and deploy temporary fixes. Issue updates to “safety measures.” Settle lawsuits with NDAs to undermine justice.

But the fundamental problem – that LLMs cannot distinguish between trusted instructions and adversarial inputs – remains unregulated and insufficiently challenged.

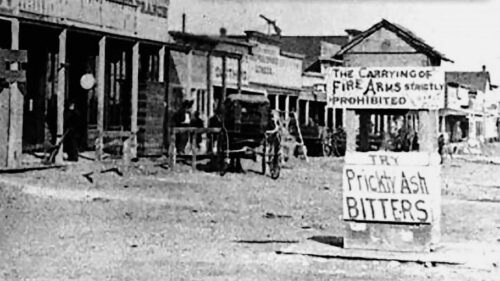

The Myanmar Precedent

I’ve documented before how CISOs can be held liable for atrocity crimes when they enable weaponized social media.

Facebook’s CSO during the Rohingya genocide similarly:

- Was warned repeatedly about platform misuse enabling violence

- Responded with “hit back” PR campaigns claiming critics didn’t understand how hard the problems were

- Argued that regulation would lead to “Ministry of Truth” totalitarianism

- Enabled nearly 800,000 people to flee for their lives while saying consequences matter

New legal research on Facebook established that Big Tech’s role in facilitating atrocity crimes should be conceptualized “through the lens of complicity, drawing inspiration… by evaluating the use of social media as a weapon.”

Stuckey is wading into the same dangers and with government systems.

Professional Capture

This isn’t about one vulnerable product. It’s about what it represents: the security industry doesn’t have any prevention let alone detection standards for a captured CISO.

The first CSO role was invented by Citibank after a breach as a PR move, but there was hope it could grow into genuine protection. Instead, we’re seeing high-dollar corruption – an extension of the marketing department. CISOs are paid more than ever for leaning into liability management layers that simply document concerns regardless of harms. When I was hacking critical infrastructure in the 1990s, I learned about a VP role the power companies called their “designated felon” who was paid handsomely to go to jail when regulators showed up.

Stuckey could have refused. He could have resigned. He could have blown the whistle.

Instead, he joined OpenAI from Palantir to enable this, shipped it with a useless warning label, and is planning to collect equity in mass harms.

That’s not a security professional making a hard call. That’s a paper trail of safety anti-patterns (reminiscent of the well-heeled CISO of Facebook enabling genocide from his $3m mansion in the hills of Silicon Valley).

When Section 83.19(1) of the Canadian Criminal Code says knowingly facilitating harmful activity anywhere in the world, even indirectly, is itself a crime – and when legal scholars argue we should conceptualize weaponized technology “through the lens of complicity” – Stuckey’s October 22, 2025 thread becomes evidence… of documented intent to profit from failure regardless of harms.

And what does a BBC reporter think about all this?

OpenAI says it will make using the internet easier and more efficient. A step closer to “a true super-assistant”. […] “Messages limit reached,” read one note. “No available models support the tools in use,” said another. And then: “You’ve hit the free plan limit for GPT-5.”

Rent seeking, I told you.