The question nobody seems to want asked in print: what would it actually take to win against fascism in America?

Not to resist. To win.

The question has ugly answers, due to the ugliness of Trump wanting to get as ugly as possible, which is perhaps why journalists avoid it.

We shouldn’t look at wrestling a big dangerous pig in the mud and think an article about stain remover for white socks is going to be our strategy.

What many seem to avoid saying: Trump is at war with America.

His team keeps saying they are waging war, calling innocent citizens domestic terrorists. Even those who defend innocent citizens being attacked by Trump are called domestic terrorists. Their war rhetoric is not by accident. They’re not stupid. “War on Christmas“, remember?

In the early 1920s, Ford’s Dearborn Publishing Company released a four-volume set of essays penned by Ford and a handful of aides called The International Jew: The World’s Foremost Problem. […] In a sense, this was the first shot fired in the “War on Christmas” wars and a blueprint for how these arguments would play-out for the next century. At no point has Christmas or anyone’s right to celebrate it been under attack, yet this endures as a way to attack…

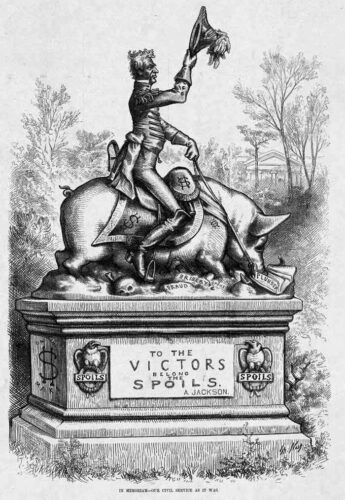

Trump wants his followers to believe they are fighting a very particular kind of war in America. A war that Henry Ford wrote about. Meanwhile the American “resistance” acts like a moral witness campaign, which is what you do to feel good about losing. You end up starving to death holding nothing but receipts, while Trump gorges himself.

Let’s be honest about the dangers, because this is the exact reason why we study the rise of fascism in the 1930s so carefully and thoroughly.

This is why it’s time to make the parallels to Henry Ford and his disciple Adolf Hitler: by the time you’re organizing food deliveries to families in hiding and don’t let children outside, you’ve already lost the phase of the conflict where winning was still within democratic norms.

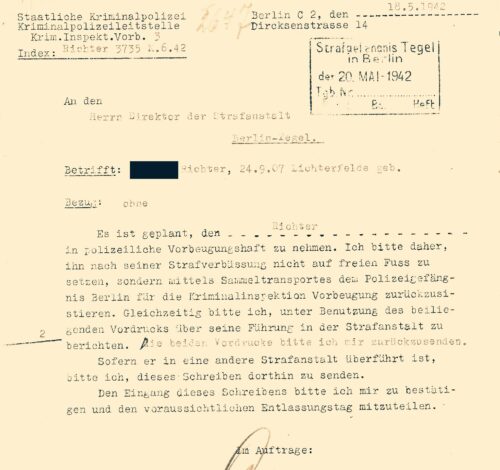

The SPD after the Nazis seized control kept publishing newspapers, organizing workers, believing that exposing the Trump-like brutality would matter in 1933. They were documenting their loss of democracy in real time while calling it a resistance.

Imagine measuring water rising inside the Titanic and saying shovel coal, pump faster. There’s a certain level of situational awareness that predicts whether you are calculating actual survival paths.

The Atlantic, for example, published a sedative disguised as a stimulant. It makes readers feel something is being done in America about Trump. That evidence of courage is being recorded, that some community is holding, that the arc is bending. You finish reading and feel… what? Inspired? Reassured some good guys exist?

Minnesota Proved MAGA Wrong:

The pushback against ICE exposed a series of mistaken assumptions. By Adam Serwer

Has a Holocaust movie ever been made that didn’t include a positive angle somewhere about survival or a glimmer of humanity? We cannot culturally process atrocity without a redemptive frame. Schindler has to save some. The boy in striped pajamas has to represent innocence. We’re narratively incapable of confronting “and then it just got worse, and worse, until external force stopped it.”

I have two degrees in it. Go figure.

That need for positive emotional release, as displayed by The Atlantic, is the risk of obliterating signs of actual dangers. It substitutes for the harder but honest recognition that Minnesotans have been losing, badly.

Alex Pretti was unmistakably good. A real American hero serving his country, and he was publicly executed for it.

And that was right after Renee Good, also an innocent citizen, was publicly executed.

ICE killed as many people last year as the four prior years combined.

Tens of thousands are in detention already, with ICE concentration camp plans underway to detain hundreds of thousands without due process.

Families are hiding.

Children are being sent away and shielded from the horrors of ICE.

Three hundred forty-seven district court judges ruled against the administration’s detention policies, yet it didn’t amount to a hill of beans because Trump implemented a court bypass method from… 1933 Germany.

The state brings guns and shoots people who dare to carry them. The resistance has whistles.

And the Atlantic piece frames all this disparity as victory because Bovino got fired after months of wearing a literal Nazi uniform while parading thousands of Trump stormtroopers through cities, beating, detaining and killing innocent people?

That’s the definition now of victory? At this rate by next year the definition will be a slice of bread on the table.

Let me tell you some Minnesota history that almost never gets told. I’ve personally confronted Nazis in Minnesota, I’ve spent decades working on this subject. Here’s some of what needs to be said.

The Silver Shirts came to Minneapolis in the late 1930s planning rallies, organizing, building the same momentum that their German counterparts had used. What stopped them wasn’t an ACLU or public solidarity or moral witness.

Nope. None of those made the difference.

It was Meyer Lansky’s networks coordinating with local Jewish communities to show up at Nazi rallies and beat them bloody. Repeatedly. Until organizing a fascist rally meant your people would end up scared for their lives and in the hospital.

This is the history that doesn’t fit the narrative. American fascism in 1930s Minnesota wasn’t defeated by democratic norms or courageous nonviolent resistance. It was defeated by private organized pro-democratic violence that the state permitted through selective blindness.

The Jewish community in the 1930s didn’t write articles about the Silver Shirts. They busted heads and broke bones until organizing fascist rallies became physically dangerous. That history is so uncomfortable to tell precisely because it’s instructive. It’s also almost impossible to find evidence, for a good reason.

So what’s the 2026 connection? Reversal.

The state holds a monopoly on legitimate violence. When the state becomes fascist, any effective resistance is illegitimate by definition – the fascist state controls what “legitimate” means.

That’s the trap.

Trump understands this. That’s why he fraudulently pre-labels innocent nurses and moms as “domestic terrorists” before they’ve done anything when his troops murder them. Hegseth fraudulently invokes “enemy within” and “wartime footing.” They’re building bogus justification frameworks now, so everyone sees any level of resistance is already authorized for extrajudicial execution.

In 1930s Minnesota, the state looked away while communities defended themselves against people who talked like Trump. That selective blindness made extralegal defense against Nazism possible.

Now? The guys in masks with guns ARE the state. The Klan, Nazis, Boogaloo and Proud Boys operate wearing federal authority masks. The institutions that might have looked away have been captured. There’s no third party. There’s no one to appeal to. Trump talks about governors and mayors like they are just one button-press away from detention or assassination.

Nonviolent resistance works when there’s a third party appeal, a conscience to shock, an institution to intervene, an ally with power. When the state itself is the aggressor and also controls the narrative, all the valor means little more than defeat.

What’s the theory of change that isn’t just “bear witness until something external comes to our rescue”?

The next six months will tell. If Trump continues trying to lower his popularity before summer to invoke military dictatorship and cancel fall elections, you will see how and why The Atlantic was wrong to spin sugary tales of success.