As everyone is probably aware from the headlines this week, a Tesla Cybertruck crashed in Piedmont, California on November 27, 2024, killing three.

While Tesla has lobbied hard to blame driver error—as per their typical strategy—technical data reveals anomalies that demand wider and deeper analysis. This is about understanding what the vehicle’s robotic systems were doing in the critical moments before three people were trapped and burned to death.

The 52-Second System Failure

Before examining the Tesla “deathtrap” doors, it’s important to set context with the system state during the moments leading up to the crash. The CHP report (070925-kgo-piedmont-crash-MAIT_Redacted.pdf) reveals a troubling pattern:

Timeline (Pacific Time):

- 03:02:02 – Autopilot State becomes “not available”

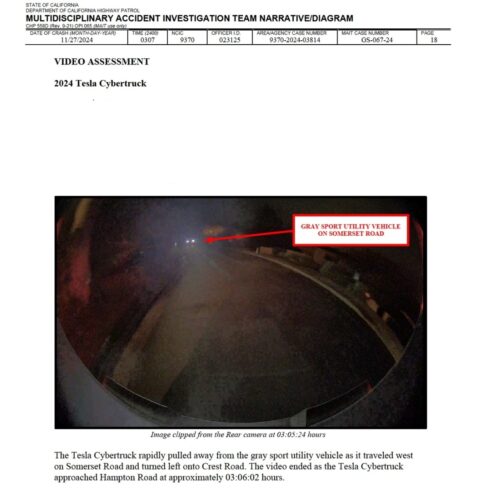

- 03:04:26 – Rear camera shows occupants entering/exiting vehicle on Somerset Road

- 03:06:02 – Video recording ends as Cybertruck turns onto Hampton Road

- 03:06:54 – Crash Algorithm Wakeup triggered (converted from 11:06:54.144 UTC)

That’s a 52-second gap where the camera stopped recording and the vehicle traveled 0.4 miles with no video data. During this time, multiple systems were compromised—the Autopilot state had been unavailable for four minutes before the crash.

Critical System Status:

- State “not available” 11:02:02.681 UTC (03:02:02 PST)

- Recording ceased approximately 03:06:02 PST

- Distance traveled during gap: approximately 0.4 miles

- Duration of gap: 52 seconds

Why did the rear camera fail in a system supposedly designed for redundancy and continuous recording? The rear camera is critical—it serves as the backup camera and provides data for Autopilot/FSD systems.

The “Not Available” Problem

What does “Autopilot State Not Available” indicate? That’s not an “off” or “disengaged” state—it’s engineering language for a communication failure. The system cannot report anything, can not give status.

The Autopilot state became unavailable at 03:02:02, about four minutes before the crash. This wasn’t an instantaneous failure; it was progressive system degradation. The vehicle continued operating for several minutes with compromised systems.

Possible Timeline:

- 03:02:02 – Autopilot computer becomes unresponsive or loses communication with MCU

- 03:04:26-03:06:02 – Vehicle continues operating with degraded system visibility

- 03:06:02 – Turn onto Hampton Road triggers additional system load; MCU recording stops

- 03:06:02-03:06:54 – Vehicle continues with multiple systems compromised

- 03:06:54 – Physical impact triggers crash algorithm

What Could Have Gone Wrong During the Turn

If vehicle systems were compromised, loss of control during a turn becomes likely:

Stability control degradation: If sensors or computers were malfunctioning, stability control might not have worked properly. The driver could have turned the wheel expecting normal response but received delayed or incorrect vehicle reaction.

Torque vectoring issues: The Cybertruck uses individual motor control for front and rear axles. If control systems were compromised, torque distribution during the turn could have been wrong, causing understeer or oversteer the driver couldn’t anticipate.

Sensor fusion failure: Modern vehicles fuse data from multiple sensors (wheel speed, yaw rate, steering angle, GPS, IMU). If this fusion was failing, the vehicle might have made control decisions based on incomplete or incorrect data.

The Steer-by-Wire Risk

The Cybertruck heavily promotes that it was designed with a steer-by-wire technology where the wheel has no mechanical connection to the wheels—the first mass-market vehicle with this system.

When the connection system fails, steering fails.

If the MCU was experiencing problems (explaining why Autopilot state was “not available” and why the camera stopped recording), the steer-by-wire system—which depends entirely on functioning computers—could have been compromised during the turn onto Hampton Road.

The Pattern of System Failures

The Piedmont crash isn’t an isolated incident of Cybertruck system anomalies. In February 2025, a Florida software developer’s Cybertruck crashed into a light pole while on Autopilot, with the driver reporting the vehicle:

…failed to merge out of a lane that was ending [and] made no attempt to slow down or turn until it had already hit the curb.

Multiple Cybertruck owners have reported “Critical issue detected” warnings requiring immediate service, and as recently as February 2025, brand new Cybertrucks are being delivered with completely non-functional Autopilot systems, with service technicians reportedly “stumped” and unable to diagnose the problems.

Owners have reported “Critical steering issue detected” and “Loss of system redundancy detected” warnings related to the steer-by-wire system’s sensors.

Tesla’s MCU also has a documented history of catastrophic failures. In earlier Tesla models, a failing navigation MicroSD card could cause the entire MCU system to crash and prevent it from restarting, with symptoms beginning with navigation issues before the system goes completely black. When MCU failures occur, some drivers report being unable to shift their vehicles into gear.

Two Very Tesla Problems

As has been the case for years with Tesla, in a nod to clear regression into defects known from 1970s-era door designs that don’t open in a fiery crash, the recent Piedmont Cybertruck tragedy is investigated best and explained clearly by local journalism.

The Highway Patrol’s investigation into a November Cybertruck crash in Piedmont where three college kids died is finding two very Tesla problems: the vehicle immediately caught fire, and its doors would not open.

…the Bay Area News Group has been going through the testimony of the CHP investigation. And the deaths appear to be more the result of the vehicle fire… troublingly, that testimony also showed the Cybertruck’s doors could not be opened in the aftermath of the crash, preventing Riordan from pulling the other three victims from the flaming wreckage.

Riordan said that when he approached the burning vehicle, and tried to open the doors, they would not open. He said he “pulled for a few seconds, but nothing budged at all.” He also said “I then tried the button on the windshield of [survivor Jordan Miller’s] door, then [victim Krysta Tsukahara’s] door.”

He said he then pounded the windows with his fists, which did not work, and then struck the windows with a thick tree branch around a dozen times until he was able to crack and dislodge a passenger-side window. That was how he was able to pull Jordan Miller out of the vehicle.

But when he attempted to pull Tsukahara from that same window, Riordan testified, “I grabbed her arm to try and pull her towards me, but she retreated because of the fire.”

“Two very Tesla problems” is exactly right—and it means Tesla has actually combined two Ford Pinto-level failures into one vehicle.

Problem 1: The crash itself may have been caused by system failures

- Autopilot state unavailable for 4 minutes before crash

- Camera recording stopped during critical turn

- Steer-by-wire system dependent on the same failing computers

- Pattern of similar Cybertruck system failures and crashes

Problem 2: The doors trapped survivors in the fire

- Electronic doors failed completely after crash

- Manual releases concealed and inaccessible

- “Armor glass” required 10-15 strikes with tree branch to break

- Known problem since 2013, at least 11 confirmed fire deaths

There’s no other negligence like we see in Tesla, which repeatedly has been flagged for deaths caused by obviously flawed, rushed and regressive designs. And we know this because past lessons and litigation were supposed to permanently change the car industry in a way that nobody would attempt such deadly “efficiency” math again.

The Pinto killed 27 people over 7 years before being recalled. Tesla’s door design alone has killed at least 11 people, and the company continues selling vehicles with the same deadly design—even after eight recalls affecting the Cybertruck, including one for every vehicle ever delivered.

There are dozens of Tesla cases with similar tragedy as Piedmont. We still don’t see the kind of necessary attention the Ford Pinto generated even though it had far fewer deaths over a much longer period.

What Needs Investigation

The 52-second gap with no camera footage, combined with an unavailable Autopilot state, raises fundamental questions about what these vehicle systems were doing before three people died.

A thorough investigation should determine:

- Does continuous telemetry exist for those 52 seconds?

- Were there system failures that affected vehicle control?

- Can these failures occur in other Cybertrucks?

- What role, if any, did system malfunctions play in this crash?

The Piedmont crash exposes both problems simultaneously: systems that may have caused the crash, and doors that prevented escape. The data gap is crucial evidence of what Tesla’s failing systems were doing before three young people died trapped in a burning vehicle, conscious and struggling to escape.

Until Tesla releases complete telemetry, and until regulators investigate whether system malfunctions caused the crash itself, any discussion on driver error remains not just premature—it’s potentially obscuring a greater-than-Pinto-level scandal where defective computer systems are the cause of Tesla crashes where “deathtrap” systems prevent escape.

Piedmont reveals two catastrophic failure modes in one deadly Tesla package, from a company that continues to sell unsurvivable vehicles while blaming their victims.

Have you covered the Tesla battery design that leads to these horrific deaths. Tesla is the ONLY EV company running glycol coolant (glcol is super flammable and lithium is flammable) through the battery packs and is the result is Tesla drivers and occupants get over come by fire. There hasn’t been a single story in main stream media outlet covering how Tesla battery design is the cause of these horrific deaths,

A recalled drive inverter could cause sudden unintended acceleration or loss of torque. A failing steer-by-wire system during a sharp turn could cause loss of steering. Multiple simultaneous system failures at the moment of a turn isn’t normal. The 47 seconds of missing EDR data would answer whether this was purely driver error or a combination of impairment and vehicle malfunction. The CHP never asked these questions because they stopped investigating once toxicology came back positive. That’s not how you investigate a crash in a vehicle with known system failures and active safety recalls.

Well done. This crash demands a real investigation because the system failures are too coincidental to ignore, and the CHP stopped investigating the second they saw toxicology. How corrupted and untrustworthy are the CHP? Very.

https://www.orolawfirm.com/blog/experts-claim-chp-investigation-into-fatal-crash-was-flawed.html

https://calmatters.org/justice/2025/03/chp-accident-9th-circuit/

https://www.cbsnews.com/losangeles/news/attorney-general-rob-bonta-charges-54-chp-officers-with-overtime-fraud/

https://www.sfchronicle.com/projects/2024/police-clean-record-agreements/

The CHP prioritize selfish speed for simplistic answers in service of themselves, not to mention evidence of systemic corruption, which drives chronically inadequate investigations. Their usual work should be assumed untrustworthy and deserving of proper diligence in courts.

Let’s start here:

++ 2024 Dual-motor Cybertruck, manufactured April/May 2024, Austin, Texas

++ Weight: 9,000-10,000 lbs

++ VIN confirms vehicle subject to NHTSA recall for drive inverter metal-oxide-semiconductor field-effect transistor (MOSFET) defect that “may cause it to stop producing torque”

Then we should go here:

++ The severity of the turn geometry (70-degree hard right)

++ Road hazards (potholes on driver’s side before LaSalle Avenue)

++ Vehicle recall status and drive inverter diagnostic data

++ Whether the recalled component was ever replaced

++ Any mechanical inspection of steering or drive systems

=======

Now let’s talk comparative negligence analysis:

++ The driver’s BAC and drug use are documented. However, proximate causation requires examining whether the vehicle’s recalled drive inverter failed during the high-stress turn, causing sudden unintended acceleration or loss of torque control.

++ The acceleration from 25 mph to 80+ mph occurred in the 52 seconds immediately following multiple simultaneous system failures during a sharp turn—the exact scenario where a faulty drive inverter would manifest.

Discovery demands should include:

++ Complete EDR data (all 138 seconds)

++ Tesla mobile app trip logs

++ All vehicle diagnostic logs, especially drive inverter and steer-by-wire systems

++ All “Autopilot State Not Available” error logs

++ Camera system failure logs

++ Service records showing whether recall repairs were performed

++ Internal Tesla communications regarding this VIN and similar failures

The CHP’s conclusion based solely on toxicology and 5 seconds of data is typical of CHP failure to perform their duty of service to the public interest.

It is clearly insufficient of them to rush into establishment of sole proximate cause, when a recalled safety-critical component may have failed during the exact moment of system anomalies and loss of control.

Thank you for this article. The sunlight needs to shine. I been working on vehicles for 25 years and this MAIT report tells me exactly what happened. Cybertruck had active recall for drive inverter. The thing that controls torque to wheels recalled just 20 days before the crash.

So EDR says accelerator at 79% at -5 seconds, then backs off to 61% at -3.5 seconds, then ZERO. That ain’t someone with pedal to floor. That’s the driver realizing NO CONTROL and desperate. What really gets me is the stability control was “on and inactive” until -3.0 seconds when it suddenly goes “on and active”. You dig? Cybertruck detected it was losing traction and started intervening when the computer had lost communication 4 minutes before the crash. Cybertruck steering is completely electronic, relying on computers already reporting failure, on a recalled drive inverter that can “stop producing torque” during a hard turn.

Does anyone ask how drunk the pilots on a 737MAX are when fighting their bad computer with a stick? Cause they might have been drunk too.

It can’t be more clear. These eyes see 737MAX computer causing nose dive. Tesla recipe is for a driver to turn the wheel and get a completely different response than expected, just like them doors that don’t open.

Thank you for this coverage. It’s bothered me how deeply people hold on to the one cause/blame – toxicology. The three young people did not die from the crash, did not sustain serious injuries. It was survivable.

They simply could not get out of the vehicle. Friends attempting to rescue them could not break the glass or open a door. Anyone who blames the occupants is taking a cheap, simple target. Toxicology is not the whole story. Think about the other pieces of this picture – now including electronic/computer system malfunction. And thank god you don’t have the pain of your kid or friend was trapped alive in a vehicle with no way to break in or out.