A fascinating new paper (Algorithms That “Don’t See Color”: Comparing Biases in Lookalike and Special Ad Audiences) audits an obfuscated security fix of Facebook algorithms and finds a giant vulnerability remains.

The conclusion (spoiler alert) is that Facebook’s ongoing failure to fix its platform security means it should be held accountable for an active role in unfair/harmful content distribution.

Facebook itself could also face legal scrutiny. In the U.S., Section 230 of the Communications Act of 1934 (as amended by the Communications Decency Act) provides broad legal immunity to Internet platforms acting as publishers of third-party content. This immunity was a central issue in the litigation resulting in the settlement analyzed above. Although Facebook argued in court that advertisers are “wholly responsible for deciding where, how, and when to publish their ads”, this paper makes clear that Facebook can play a significant, opaque role by creating biased Lookalike and Special Ad audiences. If a court found that the operation of these tools constituted a “material contribution” to illegal conduct, Facebook’s ad platform could lose its immunity .

Facebook’s record on this continue to puzzle me. They have run PR campaigns about concern for general theories of safety, yet seem always to be engaged in pitiful disregard for the rights of their own users.

It reminds me a million years ago, when I led security for Yahoo “Connected Life”, how my team had zero PR campaigns yet took threats incredibly seriously. We regularly would get questions from advertisers for access or identification that could harm user rights.

A canonical test, for example, was a global brand asks for everyone’s birthday for an advertising campaign. We trained day and night for handling this kind of request, which we would push back immediately to protect trust in the platform.

Our security team was committed to preserving rights and would start conversations with a “why” and sometimes would get to four or five more. As cheesy as it sounds we even had t-shirts printed that said “why?” on the sleeve to reinforce the significance of avoiding harms through simple sets of audit steps.

Why would an advertiser ask for a birthday? A global brand would admit they wanted ads to target a narrow age group. We consulted with legal and offered them instead a yes/no answer for a much broader age group (e.g. instead of them asking for birthdays we allowed them to ask is a person older than 13). Big brand accepted our rights-preserving counter-proposal, and we verified they saw nothing more from our system than binary and anonymous yes/no.

This kind of fairness goal and confidentiality test procedure was a constant effort and wasn’t rocket-science, although it was seen as extremely important to protecting trust in our platform and the rights of our users.

Now fast-forward to Facebook’s infamous reputation for leaking data (like allegedly billions of data points per day fed to Russia), and their white-male dominated “tech-bro” culture of privilege with its propensity over the last ten years to repeatedly fail user trust.

It seems amazing that the U.S. government haven’t moved forward with their plan for putting the Facebook executives in jail. Here’s yet another example of how Facebook leadership fails basic tests as if they can’t figure security out themselves:

Earlier this year the company was in a massive civil rights lawsuit.

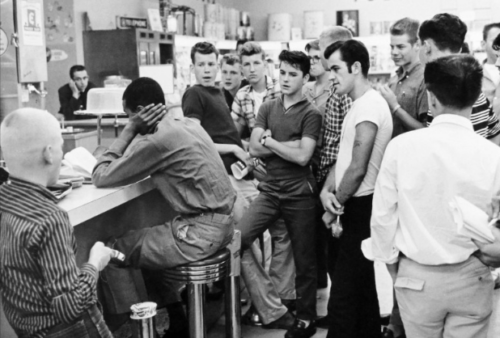

The suit comes after a widely-read ProPublica article in which the news organization created an ad targeting people who were interested in house-hunting. The news organization used Facebook’s advertising tools to prevent the ad from being shown to Facebook users identified as having African American, Hispanic, and Asian ethnic affinities.

As a result of this lawsuit Facebook begrudgingly agreed to patch its “Lookalike Audiences” tool and claimed the fix would make it unbiased.

The tool originally earned its name by taking a source audience from an advertiser and then targeting “lookalike” Facebook users. “Whites-only” apparently would have been a better name for how the tool was being used, according to the lawsuit examples.

The newly patched tool was claimed to remove the “whites-only” Facebook effect by blocking the algorithm from input of certain demographic features in a source audience. The tool also unfortunately was renamed to “Special Ad Audiences” allegedly as an “inside” joke to frame non-white or diverse audiences as “Special Ed” (the American term pejoratively used to refer to someone as stupid).

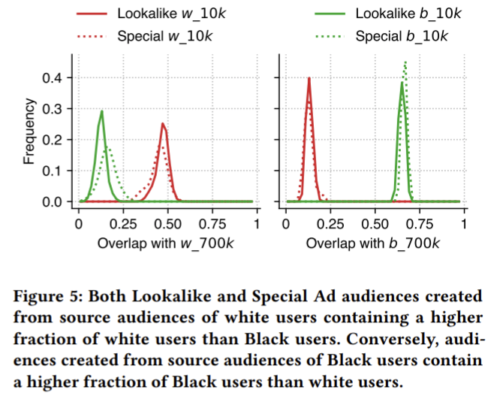

The simple audit of this patch, as described by authors of the new paper, was submitting a biased source audience (with known skews in politics, race, age, religion etc) into parallel Lookalike and Special Ad algorithms. The result of the audit is…drumroll please…Special Ad audiences retain the biased output of Lookalike, completely failing the Civil Rights test.

Security patch fail.

With great detail the paper illustrates how removing demographic features for the Special Ad algorithm did not make the output audience differ from the Loookalike one. In other words, and most important of all, blocking demographic inputs fails to prevent Facebook algorithm generation of predictably biased output.

As tempting as it is to say we’re working on the “garbage input, garbage output” problem, we shouldn’t be fooled by anyone claiming their algorithmic discrimination will magically be fixed by just adjusting inputs.