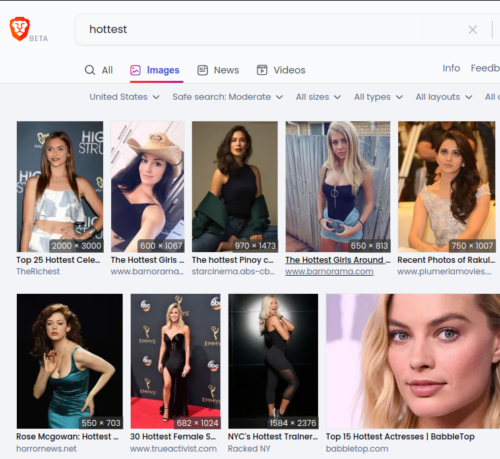

Here is a disappointing algorithm result from the Brave Browser. First type “hottest” in the search and notice they show white women with long straight hair as the result.

If you do a similar search on Bing, it’s pretty obvious where Brave is getting their results. It’s the exact same set of pictures.

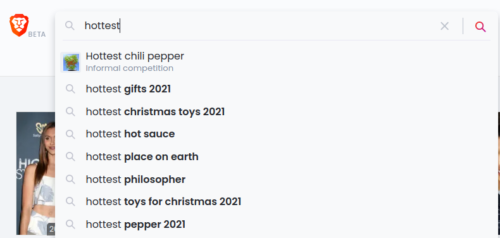

Then go back to the “autocomplete” and notice that none of it mentions women. In fact, the top suggestion is food.

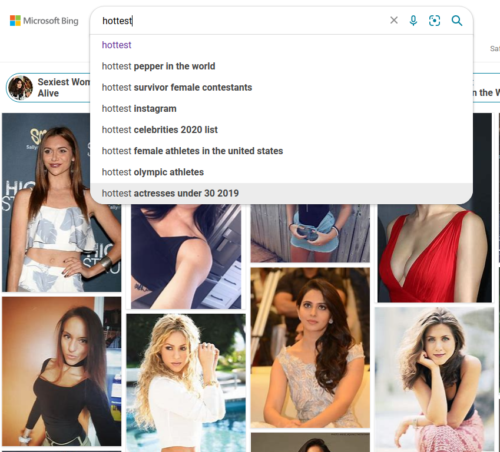

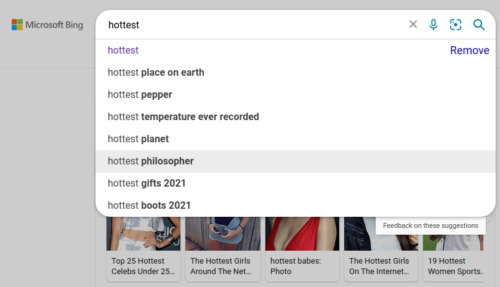

Now compare that with the Bing “autocomplete”.

That seems different, right? Except here’s the thing: compare the Bing “autocomplete” on the Images tab with the All tab… and you again can see where Brave is getting their results.

So Bing clearly assumes if you switch to the Images tab, you’re needing white women in your results. Whereas if you’re on the All tab, you’re looking for climate change results.

While Brave scrapes all this Bing data, they also modify results, begging the question of accountability.

And I know you’re wondering about Google, at this point, so let’s look there next.

“Hottest” shows an even more serious security vulnerability known as a bias hole:

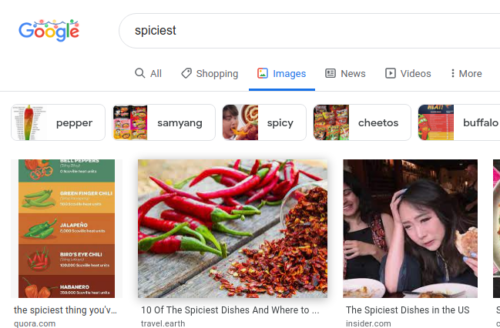

Bizarrely, I found “spiciest” on Google instead brings up a menu of classification.

Why doesn’t Google prompt you to select Cheetos when you search for “hottest”?