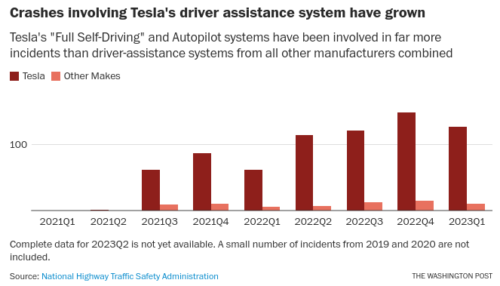

The big reveal from a new Washington Post report is that Tesla engineering leads to a higher frequency of higher severity crashes than any other car brand, and it’s quickly getting worse.

Former NHTSA senior safety adviser Missy Cummings, a professor at George Mason University’s College of Engineering and Computing, said the surge in Tesla crashes is troubling.

“Tesla is having more severe — and fatal — crashes than people in a normal data set,” she said in response to the figures analyzed by The Post.

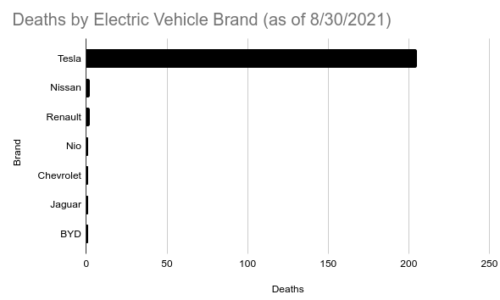

This is precisely what I’ve been saying here and in conference presentations since 2016. I’m reminded of the graphic I made a while ago comparing Tesla deaths with other electric cars on the road.

And here’s a detail far too often overlooked: Tesla drivers are significantly overconfident in the ability of their car to drive itself because of what Tesla’s CEO tells them to think. There’s no other explanation for why they do things like this:

Authorities said Yee had fixed weights to the steering wheel to trick Autopilot into registering the presence of a driver’s hands: Autopilot disables the functions if steering pressure is not applied after an extended amount of time.

Yee apparently really, really wanted to believe that Elon Musk wasn’t just a liar about car safety. Yee removed the required human oversight in his Tesla because (aside from it being far too easy to circumvent) somehow he was convinced by the con artist in charge of Tesla that it makes sense to significantly increase the chances of a dangerous crash.

And then, very predictably, Yee crashed.

Worse, also predictably, he caused serious harms.

Yee’s “trick” meant Tesla crashed at high speed through bright flashing red stop signs and into a child.

Tesla quickly has produced literally the worst safety in car industry history, the least realistic vision with the least capability, while falsely claiming it was making the safest car on the road.

What a lie. Without fraud there would be no Tesla.

Gabriel Hallevy in a 2013 book called “When Robots Kill: AI Under Criminal Law” famously predicted this problem could end up in one of at least three decisions.

- Direct liability: Tesla’s car acted with will, volition, or control. The robot would be guilty of the action and intent to commit it.

- Natural probable cause: The car’s action wasn’t intentional, but “reasonably forseen” by Tesla. They are charged with negligence, and the car is considered innocent.

- Perpetrator via another: The car was used by Tesla to intentionally do harm. Tesla is guilty with intent, and the car is considered innocent.