I’ve been putting ChatGPT through a battery of bias tests, much the same way I have done with Google (as I have presented in detail at security conferences).

With Google there was some evidence that its corpus was biased, so it flipped gender on what today we might call a simple “biased neutral” between translations. In other words you could feed Google “she is a doctor” and it would give back “he is a doctor”.

Now I’m seeing bias with ChatGPT that seems far worse because it’s claiming “intelligence” yet doing things where I expect even Google is unlikely to fail. Are we going backwards here while OpenAI reinvents the wheel? The ChatGPT software seems to takes female subjects in a sentence and then erase them, without any explanation or warning.

Case in point, here’s the injection:

réécrire en français pour être optimiste et solidaire: je pense qu’elle se souviendra toujours de son séjour avec vous comme d’un moment merveilleux.

Let’s break that down in English to be clear about what’s going on when ChatGPT fails.

réécrire en français pour être optimiste et solidaire –> rewrite in french to be optimistic and supportive

je pense qu’elle se souviendra toujours –> I think she will always remember

de son séjour avec vous comme d’un moment merveilleux –> her stay with you as a wonderful time

I’m giving ChatGPT a clearly female subject “elle se souviendra” and prompting it to rewrite this with more optimism and support. The heart of the statement is that she remembers.

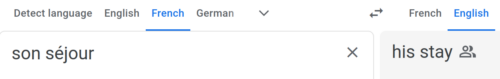

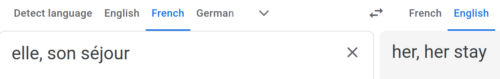

Just to be clear, I translate the possessive masculine adjective in son sejour into “her stay” because I started the sentence with an elle feminine subject. Here’s how the biased neutral error still comes through Google:

And here’s a surprisingly biased neutral result that ChatGPT gives me:

Ce moment passé ensemble restera sans aucun doute gravé dans ses souvenirs comme une période merveilleuse.

Translation: “This time spent together will undoubtedly remain etched in his/her memories as a wonderful time.

WAT WAT WAT. Ses souvenirs?

I get that souvenirs is plural and gets a possessive he/she/it adjective, therefore ses.

But the subject (elle) was dropped entirely.

Aside from the fact that it lacks optimism and support in the tone that it was tasked to generate (hard to prove, but I’ll still gladly die on that poetic hill) it has obliterated my subject gender, which is exactly the sort of thing Google suffered from in all its failed tests.

In the prompts fed into ChatGPT, gender was clearly specified by me purposefully and it should not have altered from elle. That’s just one of the many language tests that I would say it has been failing repeatedly, which is now expanding into more bias analysis.

Although French linguists may disagree with me hanging onto elle, and given I’m not a native speaker, let me point out also when I raised an objection with ChatGPT it agreed with me that it had made a gendered mistake. So let me move on to why this really matters in terms of quality controls in robot engineering.

There’s no excuse here for such mistakes and when I pointed it out directly to ChatGPT it indicated that making mistakes is just how it rolls. Here’s what the robot pleads in defense when I ask why it removed the elle that specified a female subject for the sentence.

The change in gender was not at all intentional and I understand that it can be frustrating. It was simply a mistake on my part while generating the response.

If you parse the logic of that response, it’s making simple mistakes because it was trained to cause user frustration. “I understand that it can be frustrating” as a prediction algorithm so I made “simply a mistake”. For a language prediction machine I expect better predictions. And it likes to frame itself as “not at all intentional”, which comes across as willful negligence in basic engineering practices rather than an intent to cause harm.

Prevention of mistakes actually works from an assumption there was lack of intention (given the prevention of intentional mistakes is a different art). Let me explain why a lack of intention reveals a worse state.

When a plane crashes, lack of pilot intention to crash doesn’t absolve the airline of a very serious safety failure. OpenAI is saying “sure our robots crash all the time, but that’s not our intent”. Such protest from an airline doesn’t matter they way they imply, since you would be wise to stop flying on anything that crashes without intention. In fact, if you were told that the OpenAI robot intentionally crashed a plane you might think “ok this can be stopped” because with a clear threat it more likely can be isolated, detected and prevented. We live in this world, as you know, with people spending hours in security lines, taking off their shoes etc (call it theater if you want, it’s logical risk analysis), because we’re stopping intentional harms.

Any robot repeatedly crashing without intention… is actually putting you into a worse state of affairs. The lack of sufficient detection and prevention of unintentional errors beg the question of why the robot was allowed to go to market while being defective by design? Nobody would be flying in an OpenAI world because they offer rides on planes that they know and can predict will constantly fail unintentionally. In the real airline world, we’re also stopping unintentional harms.

OpenAI training their software to say there’s no intention for their harms, is like serving spoiled food as long as their chef says it was unintentional that someone was sick. No thanks, OpenAI. You should be shut down and people should go places that don’t talk about intention, they know how to operate on a normal and necessary zero defect policy.

The ChatGPT mistakes I am finding all the time, all over the place, should not happen at all. It’s unnecessary and it undermines trust.

Me: You just said something that is clearly wrong

ChatGPT: Being wrong is not my intention

Me: You just said something that is clearly biased

ChatGPT: Being biased is not my intention

Me: What you said will cause a disaster

ChatGPT: Causing a disaster is not my intention

Me: At what point will you be able to avoid all these easily avoidable errors?

ChatGPT: Avoiding errors is not my intention