Here’s some nice reporting from Krebs on the security operations of xAI missing a secret key leak for months, failing to respond to several external including public notices for months, only finally reacting after GitGuardian dug in and forced them to pay attention.

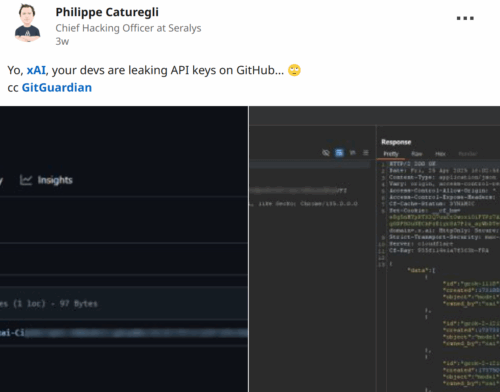

Philippe Caturegli, “chief hacking officer” at the security consultancy Seralys, was the first to publicize the leak of credentials for an x.ai application programming interface (API) exposed in the GitHub code repository of a technical staff member at xAI.

Caturegli’s post on LinkedIn [invoked] researchers at GitGuardian, a company that specializes in detecting and remediating exposed secrets in public and proprietary environments. GitGuardian’s systems constantly scan GitHub and other code repositories for exposed API keys, and fire off automated alerts to affected users.

GitGuardian’s Eric Fourrier told KrebsOnSecurity the exposed API key had access to several unreleased models of Grok, the AI chatbot developed by xAI. In total, GitGuardian found the key had access to at least 60 fine-tuned and private LLMs.

“The credentials can be used to access the X.ai API with the identity of the user,” GitGuardian wrote in an email explaining their findings to xAI. “The associated account not only has access to public Grok models (grok-2-1212, etc) but also to what appears to be unreleased (grok-2.5V), development (research-grok-2p5v-1018), and private models (tweet-rejector, grok-spacex-2024-11-04).”

Fourrier found GitGuardian had alerted the xAI employee about the exposed API key nearly two months ago — on March 2. But as of April 30, when GitGuardian directly alerted xAI’s security team to the exposure, the key was still valid and usable. xAI told GitGuardian to report the matter through its bug bounty program at HackerOne, but just a few hours later the repository containing the API key was removed from GitHub.

“It looks like some of these internal LLMs were fine-tuned on SpaceX data, and some were fine-tuned with Tesla data,” Fourrier said. “I definitely don’t think a Grok model that’s fine-tuned on SpaceX data is intended to be exposed publicly.”

If you read between the lines, there is no xAI “security team”. The bounty system, because it has a financial impact/risk characterization inherent to its coin-based ethics model, likely raised the kind of alarm that nobody inside the company is qualified or competent to manage independently. GitGuardian remarked in their blog they were so kind as to directly notify the engineer caught leaking.

On the 2nd of March 2025, this automated system discovered a new secret in a commit in a public repository. The commit contained an xAI API key in an .env file. This is a classical secret leak scenario, and GitGuardian sent an email to the commit author to alert him of the incident. The only thing that made this specific commit stand out from the mass was the committer’s email address: it was hosted under the x.ai domain.

The message “you published your secret key to a public repository several months ago and related systems may be totally compromised” didn’t get a response?!

Think about that company culture for a hot minute. GitGuardian sounded actually quite pissed off about the incompetence of Elon Musk’s typical antics.

We prepared a responsible disclosure e-mail with all the information required to quickly identify the leak source, affected keys and accounts, and start the remediation process. We then faced a first difficulty. xAI’s main website does not expose a security.txt file. As we presented in a blog post earlier this year, RFC 9116 defines a standard way of publicly providing a company’s security contact information, thanks to a security.txt. This is an industry standard that xAI is not following.

x.com exposes such a security.txt file. However, this one leads to a HackerOne program at https://hackerone.com/twitter, and the file has expired since January 2024, a year after Twitter’s acquisition by Elon Musk.

A security file that was copied from Twitter. With bad data. That was expired. Three strikes!

But wait, the story gets EVEN WORSE.

Finding this security contact took us a few unnecessary hours. We sent the disclosure email on April 30th at 11:00 AM EST. We received an answer from xAI 12 hours later… For a company the size of X, replacing an Incident Response Team (PSIRT or CSIRT) with a bug bounty platform should not be an option and should be considered bad practice. Again, we were not looking for a reward. …a few hours later, the leaky repository was removed from GitHub and the key revoked. This was done without any update sent to us, completely out of bounds of the disclosure process. This means we could have wasted more time filling a bug bounty report and waiting for updates, just to be notified that the issue was invalid, because it was already fixed.

GitGuardian clearly isn’t playing. xAI is being described by them as lacking even basic normal security practices, operating at sub-standard trust management capability.

Going to the xAI security page still tells you to go to their trust page (https://trust.x.ai/), which really is just a redirect to Vanta’s “trust platform product” (not to be confused with the “cool balls” underwear company) further indicating xAI may be only a shell company (e.g. the kind of internal control weakness Sarbanes-Oxley used to be about).

Krebs goes on to discuss implications for Elon Musk’s concept for the Department of Government (DOG) labelled ironically as “efficiency”, because critics contend xAI is centralizing federal American data in preparation to sell itself (like Twitter) as a private deal to the Saudis and Russians.

In other words, value of xAI being dangled in front of the Saudis may have just collapsed because secrets were leaked at the same time Elon Musk was touring the desert cities trying to pitch top dollar for access to a chatbot he’s illegally feeding all the federal American data breached by his DOG.