The U.S. government has a notable detail in their new sanctions press release.

The Department of State is concurrently delivering to Congress a determination pursuant to the Chemical and Biological Weapons Control and Warfare Elimination Act of 1991 (CBW Act) regarding Russia’s use of the chemical weapon chloropicrin against Ukrainian troops.

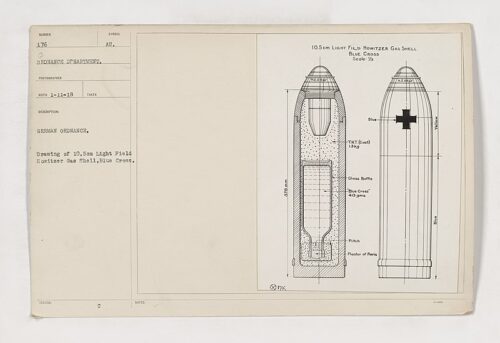

Germany is said to have been the first to use the “pesticide” chloropicrin (tear gas) in WWI on the battlefield, despite being outlawed in the the 1899 Hague Convention. The gas was denoted by a blue cross on artillery warheads.

[It is the] particular horror of gas that is captured in Wilfred Owen’s poem Dulce et Decorum Est, arguably the most widely read description of the horrors of war in the English language.

In all my dreams, before my helpless sight,

He plunges at me, guttering, choking, drowning.

The Soviet Union then was known to use cloropicrin in 1989 to control crowds in Georgia, so that soldiers could rush in and hack people to death with shovels.

Working with Georgian scientists, the delegation has identified that agent to be chloropicrin… Twenty persons died and 4,000 sought hospital treatment when Soviet troops, using gases and wielding shovels, broke up an all-night demonstration by 8,000 to 10,000 Georgians… the majority of the deaths were due to the use of “sharpened shovels” by the troops who charged into the demonstration “hacking people to death”…

Shovels? Did someone say Soviet anti-democratic shock troops swung bladed shovels as a psychological and physical weapon in the past? Fast forward to Russian leadership today:

Russian Soldiers Are Attacking Ukrainians With Shovels… “The lethality of the standard-issue MPL-50 entrenching tool is particularly mythologised in Russia,” the U.K. MoD said. Indeed, the MPL-50 has become an iconic weapon of the Spetsnaz, Russia’s special operations forces. In his 1987 book about the origins of Spetsnaz, former Soviet intelligence agent Viktor Suvorov begins by explaining how the soldiers made the shovel into a deadly weapon.

It begs the question how toxic is the tear gas itself, used to illegally immobilize military targets, relative to the bladed-shovel attack that follows like a WWI trench charge.

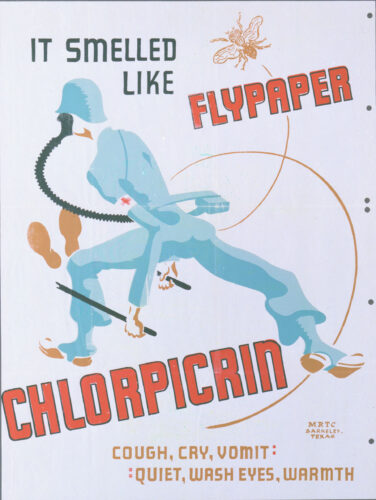

In humans, a concentration of 2.4 g/m³ can cause death from acute pulmonary oedema in one minute (Hanslian, 1921). concentration us low as I ppm of Chloropicrin in air produces an intense smarting pain in the eyes, and the immediate reaction of any person is to leave the vicinity in haste. If exposure is continued, it may cause serious lung injury. […] As stated above, because of the tear gas effect, a person would be unable to remain in a dangerous concentration of chloropicrin for more than a few seconds. Great care should be taken to prevent unauthorized persons from approaching a fumigation site because the tear gas effect is so powerful that they may become temporarily blinded and panic-stricken, which, in turn, may lead to accidents.

Related:

- 1993 Russian-backed Chemical Weapons Convention outlawed use of tear gas “as a method of warfare”

- 2022 September 23, Russian K-51 grenades with chlorine dropped on Ukraine

- 2022 October 12, Russian K-51 grenade with CS tear gas dropped on Ukraine

- 2023 December 22, Russia drops “K-51 aerosol grenades filled with irritant CS gas (2-Chlorobenzalmalononitrile)”. (Also observed in November and “new type of special RG-VO gas grenades containing an unknown chemical substance was used by the Russians on Dec. 14″)

- 2024 March 11, Russia attacks 50 times a week with tear gas grenades dropped by UAVs