American history is full of censorship and denials, as evidenced perhaps best by the KKK controlling the Oklahoma narrative for so long.

The massacre took place over two days in 1921, a long-suppressed episode of racial violence that destroyed a community known as Black Wall Street and ended with as many as 300 Black people killed, thousands of Black residents forced into internment camps overseen by the National Guard and more than 1,200 homes, businesses, schools and churches destroyed.

It’s 2024 and American families are still just now learning what happened 100 years ago to their relatives under extremist violent racism directed and spread by President Woodrow Wilson.

The three are among 11 sets of remains exhumed during the latest excavation in Oaklawn Cemetery, state archaeologist Kary Stackelbeck said Friday.

“Two of those gunshot victims display evidence of munitions from two different weapons,” Stackelbeck said. “The third individual who is a gunshot victim also displays evidence of burning.”

Evidence of burning. The U.S. government is clearly implicated in racist executions, concentration camps, mass graves, and even burning the bodies… a decade before Nazi Germany. Why do you think Hitler openly boasted Henry Ford was his big inspiration, or why Hitler named his personal train the “Amerika”? Nazi Germany looked to the American violent systemic suppression of non-whites as a rough blueprint for genocode.

The Tulsa victims were decorated veterans and successful business people, targeted within just one area in a large coordinated nation-wide terror campaign that had lasted years (e.g. “Red Summer” in nearly 30 cities); to remove and block all prosperity of American Blacks. It was made easy to hide under President Wilson and his toxic nativist “America First” slogan. For example, he had ordered all Blacks segregated and removed from positions of government. He even sent federal troops to round up and murder Black farmers in Arkansas after they organized meetings, so the very act of group speech to represent Black voices was systematically interrupted and criminalized.

President Wilson replied… that he had made “no promises in particular to Negroes [sic], except to do them justice.” […] Many African American employees were downgraded and even fired. Employees who were downgraded were transferred [out of sight].

Perhaps most notable, to those wondering what happened after this KKK President finished his second term in 1921, Warren Harding was elected in a landslide and gave a 10 minute speech June 6 at Lincoln University calling on Americans to educate themselves better and never forget the Tulsa Massacre.

Much is said about the problem of the races, but let me tell you that there is nothing that government can do which is akin to educational work. One of the great difficulties with popular government is that citizenship expects at the hands of government that which it should do for itself. No Government can wave a magical wand and take a race from bondage to citizenship in half a century. All that the Government can do is to afford an opportunity for good citizenship.

The colored race, in order to come into its own, must do the great work itself, in preparing for that participation. Nothing will accomplish so much as educational preparation. I commend the valuable work which this institution is doing in that direction. It is a fine contrast to the unhappy and distressing spectacle that we saw the other day out in one of the Western States. God grant that, in the soberness, the fairness and the justice of this country, we shall never have another spectacle like it.

Spectacle, what spectacle? His point about a magical wand and things taking time is notable. Harding was calling out Tulsa’s massacre in a Presidential address, like it was front of mind for all Americans. Somehow this brief moment in time, the magic of that message, was soon lost within the momentum of Wilson’s KKK empire.

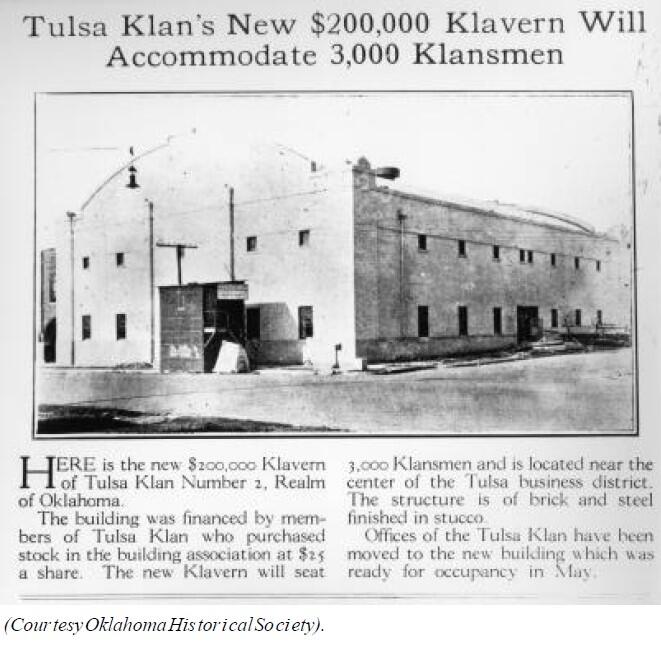

Tulsa officials immediately moved to competely erase the massacre from records, going so far as to build a new white supremacist meeting center (“Klavern”) directly on top of the firebombed Black business and homes.

It was a pattern of long-term and coordinated oppression that was being repeated throughout the country.

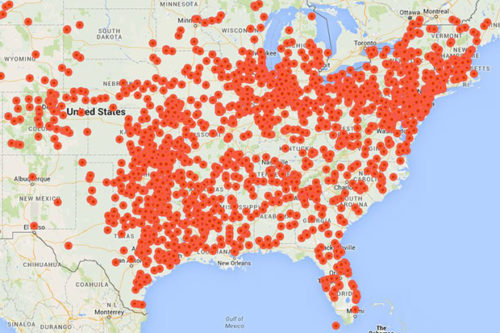

A horrible “contagion” had been spread across America after 1915, as thousands of KKK domestic terrorism and disinformation cells sprouted under President Wilson.

Around the same time as the national KKK “poison” network was busy re-writing American history to forget Tulsa, Harding in October gave a speech in Alabama that clarified his thoughts in ending the long problem of American institutionalized racism.

I would say let the black man vote when he is fit to vote: prohibit the white man voting when he unfit to vote. Especially would I appeal to the self-respect of the colored race. I would inculcate in it the wish to improve itself: distinct race, with a heredity, a set of traditions, an array of aspirations all its own. […] I would accent that a black man can not be a white man, and that he does not need and should not aspire to be as much like a white man as possible in order to accomplish the best that is possible for him. He should seek to be, and he should be encouraged to be, the best possible black man, and not the best possible imitation of a white man.

Prohibit the white man voting? By August 1923 he was dead, with no real explanation.

Mrs. Harding refused all entreaties to allow the doctors to conduct an autopsy and instead ordered that her husband be embalmed shortly after his death. Dr. Wilbur was especially frustrated by this refusal because the press and a bereaved public blamed the president’s doctors for incompetence, malpractice and even plots of poisoning the president.

Remember? Never forget? I dare you to find even one American who remembers President Harding’s first name or the year he took office, let alone his speeches on racism and the Tulsa massacre.