As an American, I find it extremely odd that Germans experience Russian attacks on critical infrastructure yet try to force the news to report “left-wing” or “environmentalist” threats.

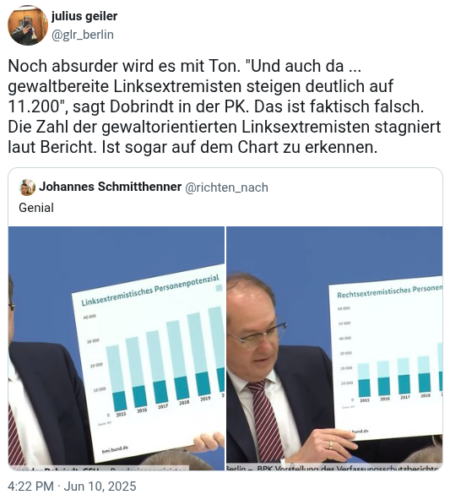

Look at June 2025, for example. Germany’s Interior Minister Alexander Dobrindt presented a national security report. He holds up a big chart. He confidently announces violence-oriented left-wing extremists are “rising significantly to 11,200.”

Got that? Rising. Way up. From what, you ask?

The chart shows the number rose from 11,200 all the way to… wait for it… 11,200.

Flat.

The man isn’t blind, yet he can’t “see”. He’s a government official delivering an official report on dangerous threats, literally telling others not to see either. His report still says the right-wing violent crimes were up 47%. That’s a lot. Left-wing violent crimes were down 26.8%.

Facts.

Dobrindt stood in front of the world and coldly announced the exact opposite to what his own evidence showed.

It’s in his hands, he holds it up, and he can’t “see” the flat lines or do the math. Even worse, others go along with it, allowing him to continue months later, and act like the German security minister didn’t just totally shit the bed.

The Spider Spinnst

There are people who want to be challenged by data. They don’t mind the discomfort of the unknown. They have a method for updates, a budget to spend.

There are people who want data to confirm what they find comfort in and already believe. They seek little or no challenge. They preprocess, and pinch every penny.

This is a nearly universal architecture, an epistemic posture that pops up as the preference for closed systems. The fear of the unknown. The need for familiar threats to be forced into familiar boxes.

Dobrindt is a particularly bad politician. He is infamous in Germany as the man who chose copper over fiber, causing Germany to fall to last in European broadband rankings. That was before he looked at 11,200 and 11,200 and declared a “significant rise.” And that was before he looked at Russian GRU footprints in Berlin snow around infrastructure attacks and declared “can’t be the Russians.”

The pattern of the man isn’t about error. It’s his function. Germany apparently still needs politicians to declare the enemies stable and threats predictable, regardless of reality. Left-wing danger is Dobrindt’s cozy box. Russian state warfare is not.

In 1951, Solomon Asch held up two lines. One was obviously shorter. When everyone else in the room said they were the same length, 75% of subjects went along at least once. Afterward, most admitted they knew the answer was wrong. They just didn’t want to be the one who saw differently.

Dobrindt holds up a chart. The line is flat. He says “rising significantly.” The Russians attack and he says “left-wing”.

The Past Isn’t Past When It Hasn’t Passed

Every culture has the architecture issue. Germany’s version is particularly brittle because they believe they worked hard and have fixed it. They seem often to believe they don’t need to look or listen anymore.

Vergangenheitsbewältigung addressed content of a catastrophe. And nobody apparently dug into the structure — the cognitive machinery that locks onto false narratives and holds them against all evidence because the narrative is entirely load-bearing.

Antisemitism was so dangerous in Germany because its lies became the infrastructure. Once a false threat narrative serves social functions — identity, belonging, explanation, comfort — evidence just becomes noise. The preprocessing layer drops contradicting data before it reaches conscious evaluation.

Dobrindt holds up a chart that obviously contradicts his words. The system processes his authority with his words, turning his evidence into a proof that a filter is working. He’s testing the machinery, for a different target. If you react, you’re flagged as a trouble-maker. If you see, you stand out as a non-believer, unable to trust, perhaps trying to cause conflict.

It’s not just individual cognitive failure — it’s conformity architecture. Seeing becomes deviance. The preprocessing isn’t just internal; it’s policed.

The movie Stasikomodie comes to mind as an illustration.

Germans Refusing to “See”

Thomas Zimmer, January 21:

The idea that there are no protests in the US and no one is standing up to Trump is proving incredibly hard to kill over here in Germany. It’s become dogma, utterly detached from empirical reality. I wonder if the people who keep talking like that understand they’re perpetuating regime propaganda.

Two days later: Minnesota has a general strike. Hundreds of businesses close. The Lotus tells reporters they stay open to feed protestors out of respect and in solidarity. Tens of thousands of people brave subzero cold to march. One hundred clergy are arrested.

Harvard’s data shows over 10,000 protests in 2025, which is a 133% increase from the prior year. Carnegie tracks it openly for all the Germans to see plainly. The evidence is public, authoritative, and free.

Germans don’t “see” the numbers because “Americans aren’t protesting” is infrastructure for them to accelerate their own political comfort. It permits concern without obligation to understand what comes next. Observation without solidarity. Moral comfort while watching from a position of self-erasure.

Curiosity would require real work, updating. Updating would require real action. Action would require taking a risk of the unknown.

Germans are often stuck in their preprocessing layer. The unknown is an expense they aren’t prepared to bear.

Fiction Function: Comfort Architecture

Dobrindt’s CSU party banks on stirring hate about ghosts of the “left-wing” because the inheritors of the Nazi Party (AfD) has been eating their votes from the right. Fabricating a political danger while erasing right-wing data isn’t confusion — it’s cynical political strategy.

A government acknowledging Russia attacks must respond to complex threats. A government blaming ghosts can campaign infinitely.

After the Berlin blackout, Dobrindt vowed to “hit back” at a specter of left-wing extremists. State violence was promised against the declining threat, while the rising threat was ignored just like fascism in America.

When American protestors are erased, the rise of America’s Hitler is so much more pleasant for Germans to swallow.

People who fear the unknown build systems to keep it out. Then they’re blind when it arrives wearing familiar clothes.