Here is an interesting look at authenticity of provenance.

Whitworth argued that while the Kells monastery was founded in AD807, it did not become important until the later ninth century. “This is too late for the Book of Kells to have been made at Kells. The Iona hypothesis, while worth testing, has no more intrinsic value than any other,” she said.

Dr. Victoria Whitworth is proposing the Book of Kells is misnamed and was actually created at Portmahomack in Pictish eastern Scotland, rather than at the traditionally accepted location of Iona.

We need to start calling it a Book of Portmahomack, in other words, or at least a Book of Picts.

How many other “Irish” and “English” achievements are actually Scottish, Pictish, Welsh, or Cornish masterpieces culturally laundered through the extractive imperial narrative machine?

Let’s dig deep here into the significance of a British empire assigning sophistication of the Scots to the Irish instead. Irish monasticism gets celebrated as preserving Classical learning during the “Dark Ages,” while the Picts get dismissed as primitive. The suggestion that Picts actually created Kells completely flips the script on who were the “real” scholars and artists of early medieval Britain. It brings new light to centuries of English/British historical narratives that harshly marginalized Celtic cultures and undermined Scottish intelligence and study.

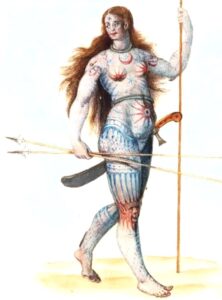

To be more precise, the Romans used scapegoating methods to assert unjust control. Like claims against the “woke” people today, they cooked a “barbarian conspiracy” as early information warfare. The term “Picti” itself was essentially propaganda for Romans to dismiss an indigenous civilization as “heathens” and justify psychological campaigns of erasure.

Therefore, attributing a masterpiece back to the Picts removes the British oppressive narrative of “no evidence of civilization” and directly challenges modern assumptions about the cultural sophistication of medieval Scotland.

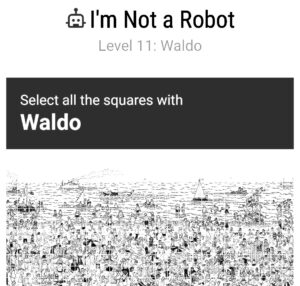

In related news, Neal.fun has posted a fascinating game and (spoiler alert) now I’m not sure I’m not a robot.

This game is as unsettling as the 1980s movie Blade Runner (based on a 1960s book about AI) because it forces you to question your own humanity through increasingly absurd tests. Much like how imperial historians forced Celtic cultures to “prove” their sophistication through increasingly impossible standards, while simultaneously stealing their best evidence. The Picts couldn’t prove their sophistication because their manuscripts had been stolen to be boldly flaunted as Irish.

In the movie, replicants are given false memories to make them compliant. Imperial Britain gave Celtic peoples false cultural memories – teaching them they were empty vessels while celebrating their stolen achievements as someone else’s genius.

The Picts were essentially turned into cultural replicants – people with no “real” past, no authentic achievements, just vague “mysterious” origins. It’s like saying “these people never created anything beautiful” while hanging their greatest masterpieces in their neighbor’s house for them to see from afar, to cynically undermine their sense of self.

Whitworth’s archaeological evidence from Portmahomack reveals a form of cultural warfare, using information suppression and strategic blindness in a “master” plan. The evidence she has delivered is sound: vellum workshop, stone carving, matching artistic styles. But it has taken so long because anyone acknowledging it would have undermined the imperial story used to destroy authentic Scottish arts and aptitude; challenged false English narratives of brutality and barbarism. Her work has much wider implications.

“Irish” achievements probably Scottish:

- High crosses with distinctive knotwork patterns

- Illuminated manuscript techniques using local materials and motifs

- Advanced metalwork styles

- Stone circle Christian adaptations

- Scribal traditions and Latin scholarship methods

“English” innovations probably Celtic:

- Architectural elements in early English churches

- Legal concepts found in early Welsh and Irish law codes

- Agricultural techniques

- Poetic forms and literary devices

- Monastic organizational structures

The Book of Portmahomack being displayed as Irish achievement while the Pictish history was erased is simply a cruel British psychological operation. Imagine the point of generational trauma in Scots: your ancestors create Europe’s greatest manuscript, yet you’re raised in British schools to believe your people are helpless savages deserving only constant suppression and punishment.

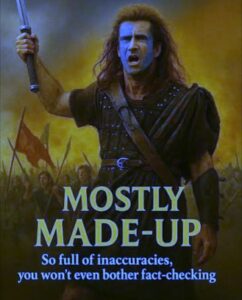

The ultimate insult was propagandized by Hollywood’s Braveheart. Mel Gibson, infamous for his antisemitism, turned cultural genocide into entertainment, depicting Scots as mad face-painted fools with sticks fighting against civilized English armed troops in polished boots.

The movie’s disgustingly pejorative and inaccurate portrayal of the wrong time period, wrong clothing, and wrong everything perfectly served the toxic narratives of Gibson’s upbringing: Scots as angry backward savages who needed punishment under cruel English “civilization” to cure them of creativity and innovations.

The same dehumanizing logic that the Empire used against the Picts continues today through people like Gibson, who perpetuate both antisemitic and anti-Celtic stereotypes.

Let me be clear, I am not talking about slow or accidental normalization. Gibson’s modern products rest upon centuries of excusing calculated extremism. Imperial Britain enacted highly explicit policies of oppression like the Highland Clearances, The Acts of Union, the Dress Act of 1746 banning Highland dress, and the Education Act of 1872 requiring English-only instruction. Don’t even get me started on the resource destruction of widespread deforestation during WWII. These weren’t just “accumulated biases” but harsh and abrupt deliberate actions by British elites with documented intent to eliminate Scottish cultural identity.

Therefore, Mel Gibson’s blue-faced buffoonery of his fathers’ liking was an intergenerational ideological transmission of hateful propaganda, cementing toxic lies about Scots as simplistic angry underdogs rather than admitting the thoughtful and sophisticated artists (analytic and wise military strategists), whose masterpieces were stolen.

It’s like Gibson falsely telling stories of the lost worshipers of Ares, when in fact they were successful adherents to Athena.

Meanwhile, back in the world of science, archaeologists are proving how “primitive” Scots were in fact so far ahead of the English they created Europe’s most sophisticated manuscript 500 years before William Wallace was even born.

Kudos to Dr. Whitworth.

And now this…

| Tactic | Period | Evidence |

|---|---|---|

| Othering | 297 CE onwards | Romans label northern tribes as “Picti” (painted barbarians); Eumenius describes “savage tribes and half-naked barbarians” |

| Achievement Theft | ~800 CE | Book of Kells/Portmahomack created by Picts, later attributed to Irish monasteries; vellum workshops and artistic techniques misattributed |

| Narrative Inversion | Medieval period onwards | Irish monasticism celebrated for preserving learning while Pictish scholarship erased; “barbarian conspiracy” becomes accepted history |

| Targeting Through Naming | 4th-10th centuries | “Picti” becomes catch-all term for any unconquered peoples; enables systematic cultural erasure and justifies continued oppression |