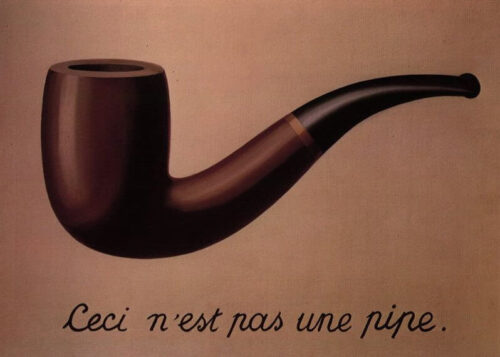

See what I did there? It worries me that too many people are forgetting almost nobody really has been able to tell what is true since… forever. I gave a great example of this in 2020: Abraham Lincoln.

A print of abolitionist U.S. President Abraham Lincoln was in fact a composite, a fake. Thomas Hicks had placed Lincoln’s unmistakable head on the distinguishable body of Andrew Jackson’s rabidly pro-slavery Vice President John Calhoun. A very intentionally political act.

The fakery went quietly along until Stefan Lorant, art director for London Picture Post magazine, noticed a very obvious key to unlock Hick’s puzzle — Lincoln’s mole was on the wrong side of his face.

Here’s a story about Gary Marcus, a renowned AI expert, basically ignoring all the context in the world:

His immediate concern is that the internet will be flooded with false photos, videos and text, and the average person will “not be able to know what is true anymore.”

Will be flooded?

That is literally what the Internet has done since its origin. The printing press flooded the average person. The radio flooded the average person. It’s not like the Internet, in true reinvention of the wheel fashion, grew in popularity because it came with an inherent truth filter.

The opposite, bad packets were always there for bad actors and — despite a huge amount of money invested for decades into “defenses” — many bad packets continue to flow.

Markets always are dangerous, deceptive places if left without systems of trust formed with morality (as philosopher David Hume explained rather clearly in the 1700s, perhaps too clearly given the church then chastised him for being a thinker/non-believer).

So where does our confidence and ability to move forward stem from? Starting a garden (pun not intended) requires a plan to assess and address, curate if you will, risky growth. We put speed limiters into use to ensure pivots, such as making a turn or changing lanes, won’t be terminal.

Historians, let alone philosophers and anthropologists, might even argue that having troubles with the truth has been the human condition across all communication methods for centuries if not longer. Does my “professor of the year” blog post from 2008 or the social construction of reality ring any bells?

Americans really should know exactly what to do, given it has such a long history of regulating speech with lots of censorship; from the awful gag rule to preserve slavery, or banning Black Americans from viewing Birth of a Nation, all the way to the cleverly named WWII Office of Censorship.

What’s that? You’ve never heard of the U.S. Office of Censorship, or read its important report from 1945 saying Americans are far better off because of their work?

This is me unsurprised. Let me put it another way. Study history when you want to curate a better future, especially if you want to regulate AI.

Not only study history to understand the true source of troubles brewing now, growing worse by the day… but also to learn where and how to find truth in an ocean of lies generated by flagrant charlatans (e.g. Tesla wouldn’t exist without fraud, as I presented in 2016).

If more people studied history for more time we could worry less about the general public having skills in finding truth. Elon Musk probably would be in jail. Sadly the number of people getting history degrees has been in decline, while the number of people killed by a Tesla skyrockets. Already 19 dead from Elon Musk spreading flagrant untruths about AI. See the problem?

The average person doesn’t know what is true, but they know who they trust; a resulting power distribution is known by them almost instinctively. They follow some path of ascertaining truism through family, groups, associations, “celebrity” etc. that provide them a sense of safety even when truth is absent. And few (in America especially) are encouraged to steep themselves in the kinds of thinking that break away from easy, routine and minimal judgment contexts.

Just one example of historians at work is a new book about finding truth in the average person’s sea of lies, called Myth America. It was sent to me by very prominent historians talking about how little everyone really knows right now, exposing truths against some very popular American falsehoods.

This book is great.

Yet who will have the time and mindset to read it calmly and ponder the future deeply when they’re just trying to earn enough to feed their kids and cover rising medical bills to stay out of debtor prison?

Also books are old technology so they are read with heaps of skepticism. People start by wondering whether to trust the authors, the editors and so forth. AI, as with any disruptive technology in history, throws that askew and strains power dynamics (why do you think printing presses were burned by 1830s American cancel culture?).

People carry bias into their uncertainty, which predictably disarms certain forms of caution/resistance during a disruption caused by new technology. They want to believe in something, swimming towards a newly fabricated reality and grasping onto things that appear to float.

It is similar to Advanced Fee Fraud working so well with email these days instead of paper letters. An attacker falsely promises great rewards later, a pitch about safety, if the target reader is willing (greedy) to believe in some immediate lies coming through their computer screen.

Thus the danger is not just in falsehoods, which surround us all the time our whole lives, but how old falsehoods get replaced with new falsehoods through a disruptive new medium of delivery: fear during rapid changes to the falsehoods believed.

What do you mean boys can’t wear pink, given it was a military tradition for decades? Who says girls aren’t good at computers when they literally invented programming and led the hardware and software teams where quality mattered most (e.g. Bletchly Park was over 60% women)?

This is best understood as a power shift process that invites radical even abrupt breaks depending on who tries to gain control over society, who can amass power and how!

Knowledge is poweful stuff; creation and curation of what people “know” is often thus political. How dare you prove the world is not flat, undermining the authority of those who told people untruths?

AI can very rapidly rotate on falsehoods like the world being flat, replacing known and stable ones with some new and very unstable, dangerous untruths. Much of this is like the stuff we should all study from way back in the late 1700s.

It’s exactly the kind of automated information explosion the world experienced during industrialization, leading people eventually into world wars. Here’s a falsehood that a lot of people believed as an example: fascism.

Old falsehoods during industrialization fell away (e.g. a strong man is a worker who doesn’t need breaks and healthcare) and were replaced with new falsehoods (e.g. a strong machine is a worker that doesn’t need strict quality control, careful human oversight and very narrow purpose).

The uncertainty of sudden changes in who or what to believe next (power) very clearly scares people, especially in environments unprepared to handle surges of discontent when falsehoods or even truths rotate.

Inability to address political discontent (whether based in things false or true) is why the French experienced a violent disruptive revolution yet Germany and England did not.

That’s why the more fundamental problem is how Americans can immediately develop methods for reaching a middle ground as a favored political position on technology, instead of only a left and right (divisive terms from the explosive French Revolution).

New falsehoods need new leadership through a deliberative and thoughtful process of change, handling the ever-present falsehoods people depend upon for a feeling of safety.

Without the U.S. political climate forming a strong alliance, something that can hold a middle ground, AI can easily accelerate polarization that historically presages a slide into hot war to handle transitions — political contests won by other means.

Right, Shakespeare?

The poet describes a relationship built on mutual deception that deceives neither party: the mistress claims constancy and the poet claims youth.

When my love swears that she is made of truth

I do believe her though I know she lies,

That she might think me some untutored youth,

Unlearnèd in the world’s false subtleties.

Thus vainly thinking that she thinks me young,

Although she knows my days are past the best,

Simply I credit her false-speaking tongue;

On both sides thus is simple truth suppressed.

But wherefore says she not she is unjust?

And wherefore say not I that I am old?

O, love’s best habit is in seeming trust,

And age in love loves not to have years told.

Therefore I lie with her and she with me,

And in our faults by lies we flattered be.