In what appears to have the hallmarks of a driverless crash, a Tesla critically injured a child who was struck while riding a scooter in broad daylight on a residential street in Victorville.

…crash involving a child Saturday afternoon in the city of Victorville. The incident was reported at approximately 4:04 p.m. on March 29, 2025, near the intersection of Elliot Way and Caliente Way. According to initial scanner traffic, a young boy was reportedly riding a scooter in the middle of the street when he was struck by a blue Tesla. The child suffered a head injury and was said to be bleeding from the head.

Riding in the middle of a wide open empty street. No visual obstructions.

A human would have seen the child unless severely distracted or speeding (which are both symptoms of using Autopilot). In a quiet residential street where children play…

This tragedy reflects a systemic problem that many other countries have successfully addressed decades ago.

STOP THE CHILD MURDER

This principle became central to Amsterdam’s traffic safety framework of the 1970s. It revolutionized quality of life and made cities highly attractive to raising families.

It recognizes that no automation advancement or corporate profit justifies easily preventable deaths—especially of children.

Proper responsibility is assigned to system designers, such as transit engineers and planners, rather than on the most vulnerable and powerless users.

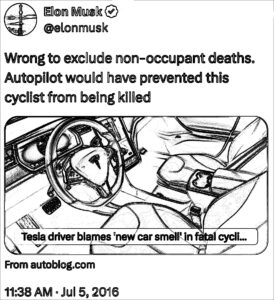

Investigators will need to determine whether a Tesla owner placed excessive trust in the company’s CEO, potentially believing his representations about the vehicle’s autonomous driving capabilities despite the system being just consumer-grade cameras with experimental AI. The investigation may reveal whether the owner followed only Elon Musk’s direct advice to them and disregarded all expert warnings and evidence about Tesla’s dangerous design failures.

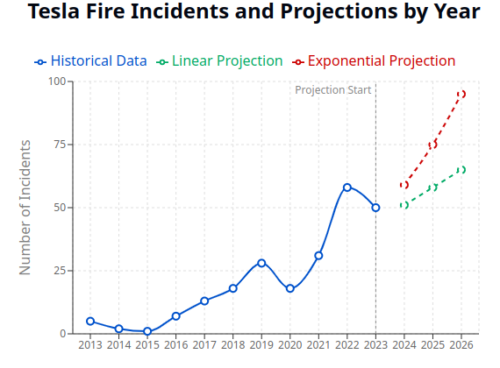

The CEO of Tesla has been collecting large payments and boosting sales based on driverless claims since 2016, promising complete autonomy that will never materialize. Tesla employees have testified there was knowledge within the company their timelines were unrealistic when the promises were made, proofs were being faked—a pattern that resembles classic advance fee fraud schemes.

This week, both the UK and China imposed restrictions on Tesla’s autonomous driving features, citing failure to meet basic car safety standards. Regulatory actions around the world, let alone the massive collapse in sales, all point to Tesla’s self-driving technology falling far behind others in the automotive industry.

Related recent Tesla crashes that have killed or critically injured pedestrians:

- February 24, 2025

- February 21, 2025

- January 30, 2025

- January 28, 2025

- December 30, 2024

- December 15, 2024

- December 7, 2024

- November 7, 2024

- October 23, 2024

As the evidence mounts and regulatory bodies worldwide take action, the question remains: how many more children and pedestrians must be injured or killed by Tesla before we prioritize safety over technological promises? The lessons from systemic thinkers protecting lives show us a better path forward—one where responsibility lies with the designers, not vulnerable road users. It’s time for America to stop the child murder and demand that self-driving technology meets basic safety standards before being allowed on our residential streets. If the UK, EU and China can protect children from Tesla’s unsafe and overstated marketing, why can’t America?