Zoom engineering management practices have been exposed as far below industry standards of safety and product security. They have been doing a terrible job, and it is easy now to explain how and why. Just look at their encryption.

The Citizen Lab April 3rd, 2020 report broke the news on Zoom practicing deception with weak encryption and gave this top-level finding:

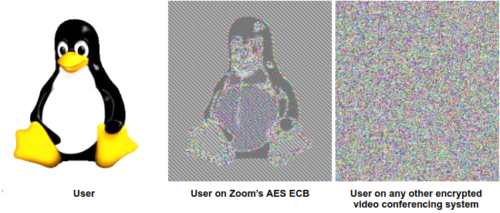

Zoom documentation claims that the app uses “AES-256” encryption for meetings where possible. However, we find that in each Zoom meeting, a single AES-128 key is used in ECB mode by all participants to encrypt and decrypt audio and video. The use of ECB mode is not recommended because patterns present in the plaintext are preserved during encryption.

It’s a long report with excellent details, definitely worth reading if you have the time. It even includes the famous electronic codebook (ECB) mode penguin, which illustrates why ECB is considered so broken for confidentiality that nobody should be using it.

I say famous here because anyone thinking about writing software to use AES surely knows of or has seen this image. It’s from an early 2000s education campaign meant to prevent ECB mode selection.

There’s even an ECB Penguin bot on Twitter that encrypts images with AES-128-ECB that you send it so you can quickly visualize how it fails.

A problem is simply that using ECB means identical plaintext blocks generate identical ciphertext blocks, which maintains recognizable patterns. This also means when you decipher one block you see the contents in all of the identical blocks. So it is very manifestly the wrong choice for streams of lots of data intended to be confidential.

However, while Citizen Lab included the core image to illustrate this failure, they also left out a crucial third frame on the right that can drive home what industry norms are compared to Zoom’s unfortunate decision.

The main reason this Linux penguin image became famous in encryption circles is because it shows huge weakness faster than trying to explain ECB cracking. It makes it obvious why Zoom really screwed up.

Now, just for fun, I’ll still try to explain here the old-fashioned way.

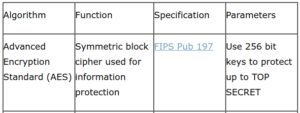

Advanced Encryption Standard (AES) is a U.S. National Institute of Standards and Technology (NIST) algorithm for encryption.

Here’s our confidential message that nobody should see:

zoom

Here’s our secret (passphrase/password) we will use to generate a key:

whywouldyouuseECB

Conversion of password from ASCII to Hex could simply give us a 128 bit block (16 bytes of ASCII into 32 HEX characters):

77 68 79 77 6f 75 6c 64 79 6f 75 75 73 65 45 43

Yet we want to generate a SHA256 hash from our passphrase to get ourselves a “strong” key (used here just as another example of poor decision risks, since PBKDF2 is a far safer choice to generate an AES key):

cbc406369f3d59ca1cc1115e726cac59d646f7fada1805db44dfc0a684b235c4

We then take our plaintext “zoom” and use our key to generate the following ciphertext blocks (AES block size is always 128 bit — 32 Hex characters — even when keys used are longer such as AES-256, which uses 256 bit keys):

a53d9e8a03c9627d2f0e1c88922b7f3f

ad850495b0fc5e2f0c7b0bf06fdf5aad

ad850495b0fc5e2f0c7b0bf06fdf5aad

b3a9589b68698d4718236c4bf3658412

I’ve kept the 128 bit blocks separate above and highlighted the middle two because you can see exactly how “zoom” repetitive plaintext is reflected by two identical blocks.

It’s not as obvious as the penguin, but you still kind of see the point, right?

If we string these blocks together, as if sending over a network, to the human eye it is deceptively random-looking, like this:

a53d9e8a03c9627d2f0e1c88922b7f3fad850495b0fc5e2f0c7b0bf06fdf5aadad850495b0fc5e2f0c7b0bf06fdf5aadb3a9589b68698d4718236c4bf3658412

And back to the key, if we run decryption on our stream, we see our confidential content padded out in blocks uniformly sized:

z***************o***************o***************m

You also probably noticed at this point that if anyone grabs our string they can replay it. So using ECB also brings an obvious simple copy-and-paste risk.

A key takeaway, pun intended of course, is that Zoom used known weak and undesirable protection by choosing AES-128 ECB. That’s bad.

It is made worse because they told customers it was AES-256; they’re not disclosing their actual protection level and calling it something it’s not. That’s misleading customers who may run away when they hear AES-128 ECB (as they probably should).

Maybe run away is too strong, but I can tell you all the cloud providers treat AES-256 as a minimum target (I’ve spent decades eliminating weak cryptography from platforms, nobody today wants to hear AES-128). At least two “academic” attacks have been published for AES-128: “key leak and retrieval in cache” and “finding the key four times faster“.

And the NSA published a revealing doc in 2015 saying AES-256 was their minimum guidance all the way up to top secret information.

On top of all that, the keys for Zoom were being generated in China even for users in America not communicating with anyone in China.

Insert conspiracy theory here: AES-128 was deemed unsafe by NSA in 2015 and ECB has been deemed unsafe for streams by everyone since forever… and then Zoom just oops “accidentally” generates AES-128 ECB keys on Chinese servers for American meetings? Uhhhh.

It’s all a huge mess and part of a larger mismanagement pattern, pun intended of course. Weak confidentiality protections are pervasive in Zoom engineering.

Here are some more examples to round out why I consider it pervasive mismanagement.

Zoom used no authentication for their “record to cloud” feature, so customers were unwittingly posting private videos onto a publicly accessible service with no password. Zoom stored calls with a default naming scheme that users stored in insecure open Amazon S3 “buckets” that could be easily discovered.

Do you know what encrypted video that needs no password is called? Decrypted.

If someone chose to add authentication to protect their recorded video, the Zoom cloud only allowed a 10 character password (protip: NIST recommends long passwords. 10 is short) and Zoom had no brute force protections for these short passwords.

They also used no randomness in their meeting ID, kept it a short number and left it exposed permanently on the user interface.

Again all of this means that Zoom fundamentally didn’t put the basic work in to keep secrets safe; didn’t apply well-known industry-standard methods that are decades old. Or to put it another way, it doesn’t even matter that Zoom chose broken unsafe encryption routed through China and lied about it when they also basically defaulted to public access for the encrypted content!

It would be very nice, preferred really, if there were some way to say these engineering decisions were naive or even accidental.

However, there are now two major factors prohibiting that comfortable conclusion.

- The first is set in stone: Zoom CEO was the former VP of engineering at WebEx after it was acquired by Cisco and tried to publicly shame them for using his “buggy code“. He was well aware of both safe coding practices as well as the damage to reputation from bugs, since he tried to use that as a competitive weapon in direct competition with his former employer.

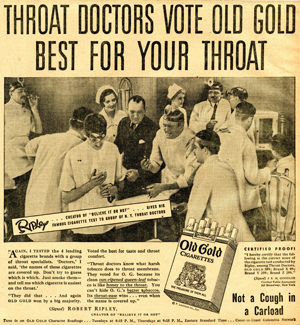

- The second is an entirely new development that validates why and how Zoom ended up where they are today: the CEO announced he will bring on board the ex-CSO of Facebook (now working at Stanford, arguably still for Facebook) to lead a group of CSO. The last thing Zoom needs (or anyone for that matter) is twelve CSO doing steak dinners and golf trips while chatting at the 30,000 foot level about being safe (basically a government lobby group). The CEO needs expert product security managers with their ear to the ground, digging through tickets and seeing detailed customer complaints, integrated deep into the engineering organization. Instead he has announced an appeal-to-authority fallacy (list of names and associations) with a very political agenda, just like when tobacco companies hired Stanford doctors to tell everyone smoking is now safe.

Here’s the garbage post that Zoom made about their future of security, which is little more than boasting about political circles, authority and accolades.

…Chief Security Officer of Facebook, where he led a team charged with understanding and mitigating information security risks for the company’s 2.5 billion users… a contributor to Harvard’s Defending Digital Democracy Project and an advisor to Stanford’s Cybersecurity Policy Program and UC Berkeley’s Center for Long-Term Cybersecurity. He is also a member of the Aspen Institute’s Cyber Security Task Force, the Bay Area CSO Council, and the Council on Foreign Relations. And, he serves on the advisory board to NATO’s Collective Cybersecurity Center of Excellence.

We are thrilled to have Alex on board. He is a fan of our platform…

None of that, not one sentence is a positive sign for customers. It’s no different, as I said above in point two, from tobacco companies laying out a PR campaign that they’ve brought on board a Stanford or Harvard doctor to be on a payroll to tell kids to smoke.

Even worse is that the CEO admits he can’t be advised on privacy or security by anyone below a C-level

…we are establishing an Advisory Board that will include a subset of CISOs who will act as advisors to me personally. This group will enable me to be a more effective and thoughtful leader…

If that doesn’t say he doesn’t know how to manage security at all, I’m not sure what does. He’s neither announcing promotion of anyone inside the organization, nor is he announcing a hire of someone to lead engineering who he will entrust with day-to-day transformation… the PR is all about him improving his own skills and reputation and armoring against critics by buying a herd to hide inside.

This is not about patching or a quick fix. It really is about organizational culture and management theory. Who would choose ECB mode for encryption, would so poorly manage the weak secrets making bad encryption worse, and after all that… be thrilled to bring on board the least successful CSO in history? Their new security advisor infamously pre-announced big projects (e.g. encryption at Yahoo in 2014) that went absolutely nowhere (never even launched a prototype) is accused of facilitating atrocities and facing government prosecution for crimes, and who demonstrably failed to protect customers from massive harms.

Zoom just hired the ECB of CSOs, so I’m just wondering how and when everyone will see that fact as clearly as with the penguin image. Perhaps it might look something like this.

Update April 12: Jitsi has posted a nice blog entry called “This is what end-to-end encryption should look like!” These guys really get it, so if you ask me for better solutions, they’re giving a great example. Superb transparency, low key modest approach. Don’t be surprised instead when Zoom rolls out some basic config change like AES-256-GCM by default and wants to throw itself a ticker-tape parade for mission accomplished. Again, the issue isn’t a single flaw or a config, it’s the culture.

Update April 13: a third-party (cyber-itl.org) security assessment of the Zoom linux client finds many serious and fundamental flaws, once again showing how terrible general Zoom engineering management practices have been, willfully violating industry standards of safety and product security.

It lacks so many base security mitigations it would not be allowed as a target in many Capture The Flag contests. Linux Zoom would be considered too easy to exploit! Perhaps Zoom using a 5 year out of date development environment helps (2015). It’s not hard to find vulnerable coding in the product either. There are plenty of secure-coding-101 flaws here.

These are really rube, 101-level, flaws that any reasonable engineering management organization would have done something about years ago. It is easy to predict how this form of negligence turns out, and the CEO attacked his former employer for using his buggy code, so ask why did Zoom believe they could get away with it?

Update November 11: The FTC calls out Zoom for being a fraud, yet neither penalizes them nor compensates their victims.

…’increased users risk of remote video surveillance by strangers and remained on users’ computers even after they deleted the Zoom app, and would automatically reinstall the Zoom app—without any user action—in certain circumstances,’ the FTC said. The FTC alleged that Zoom’s deployment of the software without adequate notice or user consent violated US law banning unfair and deceptive business practices.

And they basically lied for years and years about security.

…Zoom claimed it offers end-to-end encryption in its June 2016 and July 2017 HIPAA compliance guides… also claimed it offered end-to-end encryption in a January 2019 white paper, in an April 2017 blog post, and in direct responses to inquiries from customers and potential customers… In fact, Zoom did not provide end-to-end encryption for any Zoom Meeting…

Since June 2016 Zoom lied repeatedly about its encryption practices in order to juice sales in American healthcare while diverting traffic through China. Think hard about that.

Zoom implemented the old Skype open-source, freeware SILK codec. It tends to push a lot of noise in calls that creates a form of randomness in the ECB blocks. I’m not excusing their stupid decision, just saying maybe they thought they could use a broken encryption design because it would take a nation-state *cough* *cough* to really take advantage of it.

2007 research: https://web.archive.org/web/20130919042214/http://cs.unc.edu/~fabian/papers/voip-vbr.pdf

identified which language was being spoken; subsequent work identified speakers and known phrases

2011 research: https://web.archive.org/web/20110626053354/https://www.cs.unc.edu/~amw/resources/hooktonfoniks.pdf

produced partial transcripts “without a priori knowledge of target phrases”