Why a Think Tank Report on Deception Misses the Point—And Makes States More Vulnerable

I was excited to watch the presentation yesterday of a recent New America report on “The Future of Deception in War“. It seemed throughout the talk, however, that a certain perspective (the “operator”, the quiet professional) was missing. I even asked what the presenters thought about Soviet use of disinformation that was so excessive it hurt the Soviets.

They didn’t answer the question, but I asked because cultural corruption is a serious problem, like accounting for radiation when dealing with nuclear weapons. When deception is unregulated and institutionalized, it dangerously corrodes internal culture. Soviet officers learned that career advancement came through convincing lies rather than operational competence. This created military leadership that was excellent at bureaucratic maneuvering but terrible at actual warfare, as evidenced in Afghanistan and later Chechnya. Worse, their over-compartmentalization meant different parts of their centralized government couldn’t coordinate—creating the opposite of effective deception.

This isn’t the first time I’ve seen academic approaches miss the operational realities of information warfare. As I wrote in 2019 about the CIA’s origins, effective information operations have always required understanding that “America cannot afford to resume its prewar indifference” to the dangerous handling of deception.

What’s invisible, cumulative, and potentially catastrophic if not carefully managed by experts with hands on experience? Deception.

Then I read the report and, with much disappointment, found that it exemplifies everything wrong with how military institutions approach deception. Like French generals building elaborate fortifications while German tanks rolled through the Ardennes, the analysis comes through as theoretical frameworks for warfare that no longer exists.

As much as Mr. Singer loves to pull historical references, even citing the Bible and Mossad in the same breath, he seems to have completely missed Toffler, let alone Heraclitus: the river he wants to paint us a picture of was already gone the moment he took out his brush.

The report’s fundamental flaw isn’t in its details—it’s in treating deception as a problem that can be solved through systematic analysis rather than understood through practice. This is dangerous because it creates the illusion of preparation while actually making us more vulnerable.

Academia is a Hallucination

The authors approach deception like engineers design bridges: detailed planning, formal integration processes, measurable outcomes, systematic rollout procedures. They propose “dedicated doctrine,” “standardized approaches,” and “strategic deception staffs.” This is waterfall methodology applied to a domain that requires agile thinking.

Real deception practitioners—poker players, con artists, intelligence officers who’ve operated in denied areas—know something the report authors don’t: deception dies the moment you systematize it.

Every successful military deception in history shared common characteristics the report ignores:

- They were improvisational responses to immediate opportunities

- They exploited enemy assumptions rather than following friendly doctrine

- They succeeded because they violated expectations, including their own side’s expectations

- They were abandoned the moment they stopped working

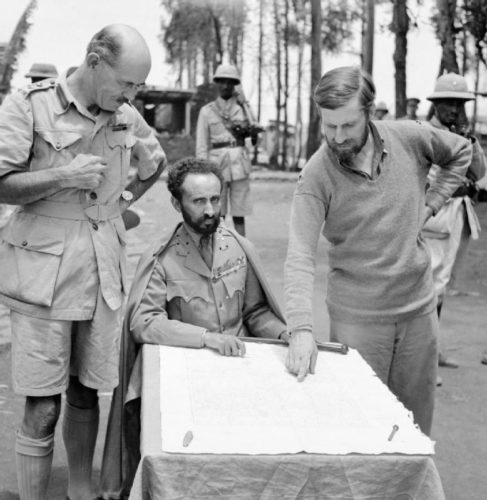

Consider four deceptions separated by nuance yet united by genius: the Haversack Ruse at Beersheba (1917), Ethiopia Mission 101 (1940), Operation Bertram (1942) and Operation Mincemeat (1943). Each succeeded through what I warned over a decade ago is Big Data vulnerability to “seed set theory” – an unshakeable core of truth, dropped by a relative influencer, spreading with improvised lies around it.

The haversack was covered in real (horse) blood with convincing photos, military maps and orders. Mission 101 took a proven WWI artillery fuse design and used 20,000 irregular African troops with a bottle of the finest whiskey to rout 300,000 heavily armed and armored fascists. Mincemeat was an actual corpse with meticulously authentic personal effects.

None of these could have emerged from systematic planning processes. Each required someone to intuitively grasp what truth would be most convincing to a particular enemy in a unique moment, then place the right seed with human creativity into the right soil, that no doctrine could capture.

It’s no coincidence that Orde Wingate, founder of Commando doctrine, considered Laurence of Arabia a flamboyant self-important bureaucrat. One of them delivered an operations guideline that we use to this day around the world and in every conflict, the other created Saudi Arabia.

The Wealthy Bureaucrat Trap

The report’s emphasis on “integrating deception planning into normal tactical planning processes” reveals profound misunderstanding. You cannot bureaucratize deception any more than you can bureaucratize jazz improvisation. The qualities that make effective military officers—following doctrine, systematic thinking, institutional loyalty—are precisely opposite to the qualities that make effective deceivers.

Consider the report’s proposed “principles for military deception”:

- “Ensure approaches are credible, verifiable, executable, and measurable”

- “Make security a priority” with “strictest need-to-know criteria”

- “Integrate planning and control”

This is exactly how NOT to do deception. Real deception is:

- Incredible until it suddenly makes perfect sense

- Unverifiable by design

- Unmeasurable in traditional metrics

- Shared widely enough to seem authentic

- Chaotic and loosely coordinated

Tech Silver Bullets are for Mythological Enemies

The report’s fascination with AI-powered deception systems reveals another blind spot. Complex technological solutions create single points of catastrophic failure. When your sophisticated deepfake system gets compromised, your entire deception capability dies. When your simple human lies get exposed, you adapt and try different simple human lies.

Historical successful deceptions—from D-Day’s Operation Fortitude to Midway’s intelligence breakthrough—succeeded through human insight, not technological sophistication. They worked because someone understood their enemy’s psychology well enough to feed them convincing lies.

The Meta-Deception Problem

Perhaps worth noting also is how the authors seem unaware, or make no mention of the risk, that they might be targets of deception themselves. They cite Ukrainian and Russian examples without consideration and caveat that some of those “successful” deceptions might actually be deceptions aimed at Western analysts like them.

Publishing detailed sharp analysis of deception techniques demonstrates the authors don’t fully appreciate their messy and fuzzy subject. Real practitioners know that explaining your methods kills them. This report essentially advocates for the kind of capabilities that its own existence undermines. Think about that for a minute.

Alternative Agility

What would effective military deception actually look like? Take lessons from domains that really understand deception:

- Stay Always Hot: Maintain multiple small deception operations continuously rather than launching elaborate schemes. Like DevOps systems, deception should be running constantly, not activated for special occasions.

- Fail Fast: Better to have small lies exposed quickly than catastrophic ones discovered later. Build feedback loops that tell you immediately when deceptions stop working.

- Test in Production: You cannot really test deception except against actual adversaries. Wargames and simulations create false confidence.

- Embrace Uncertainty: The goal isn’t perfect deception—it’s maintaining operational effectiveness while operating in environments where truth and falsehood become indistinguishable.

- Microservices Over Monoliths: Distributed, loosely-coupled deception efforts are more resilient than grand unified schemes that fail catastrophically.

Tea Leaves from Ukraine

The report celebrates Ukraine’s “rapid adaptation cycles” in deception, but misses the deeper lesson. Ukrainian success comes not from sophisticated planning but from cultural comfort with improvisation and institutional tolerance for failure.

Some of the best jazz and rock clubs of the Cold War were in musty basements of Prague, fundamentally undermining faith in Soviet controls. West Berlin’s military occupation during the Cold War removed all curfews just to force the kinds of “bebop” freedom of thought believed to destroy Soviet narratives.

Ukrainian tank commanders don’t follow deception doctrine—they lie constantly, creatively, and without asking permission. When lies stop working, they try different lies. This isn’t systematizable because it depends on human judgment operating faster than institutional processes.

Important Strategic Warning

China and Russia aren’t beating us at deception because they have better doctrine or technology. They’re succeeding because their institutions are culturally comfortable with dishonesty and operationally comfortable with uncertainty.

Western military institutions trying to compete through systematic approaches to deception are like French generals in 1940—building elaborate defenses against the last war while their enemies drive around them.

Country Boy Cow Path Techniques

Instead of trying to bureaucratize deception, military institutions should focus on what actually matters:

- Cultural Adaptation: Create institutional tolerance for failure, improvisation, and calculated dishonesty. This requires changing personnel systems that punish risk-taking.

- Human Networks: Invest in education of people to curiously understand foreign cultures well enough to craft believable lies, not technologies that automate deception.

- Rapid Feedback Systems: Build capabilities that tell you immediately when your deceptions are working or failing, not elaborate planning systems.

- Operational Security Through Simplicity: Use simple, hard-to-detect deceptions rather than sophisticated, fragile technological solutions.

- Embrace the Unknown: Accept that effective deception cannot be measured, systematized, or fully controlled. This is a feature, not a bug.

A Newer America

The New America report represents the militarization of management consulting—sophisticated-sounding solutions that miss fundamental realities. By treating deception as an engineering problem rather than a human art, it creates dangerous overconfidence while actually making us more vulnerable.

Real military advantage comes not from better deception doctrine but from institutional agility that lets you operate effectively when everyone is lying to everyone else—including themselves.

The authors end with: “We should not deceive ourselves into thinking that change is not needed.” They’re right about change being needed. They’re wrong about what kind of change.

Instead of building a Maginot Line of deception doctrine (the report’s recommendations are dangerously counterproductive), we need the institutional equivalent of Orde Wingate’s Chindits: fast, flexible, and comfortable with uncertainty. Because in a world where everyone can deceive, the advantage goes to whoever can adapt fastest when their lies inevitably fail.

The fact they published a detailed report giving the McDonald’s guide to McDeception shows they completely missed the point. Might as well hand it directly to the Russians and Chinese and ask if they want fries with that. I’ve seen what happens when operators try to follow doctrine written by people who’ve never had to actually deceive anyone with their life on the line. You get rigid, predictable bullshit that the enemy sees coming from a mile away. Shoot and scoot turns into sitting duck with a missile up your ass in hover mode. Real life. Real lives. Dusted