My head hurt when I read a new “insider” article on detecting and preventing hate on big data platforms. It’s awful on many, many levels.

It’s like seeing a story on airplane safety in hostile territory where former staff reveal they couldn’t agree politically on how to measure gravity in a way that appeased a government telling them that up is down. Or hearing that a crash in 2018 made safety staff aware of flying risks — as if nothing ever crashed before a year or two ago.

Really? You just figured out domestic terrorism is a huge problem? That says a lot, a LOT. A Civil War was fought after decades of terrorism and it continued again after the war ended, and there’s a long rich history of multi-faceted orgs conspiring and collaborating to undermine democracy. And that’s just in America, with its documented history of violent transfer of power.

I’m not going to give away any insider secrets when I say this new article provides some shockingly awful admissions of guilt from tech companies that facilitated mass harms from hate groups and allowed the problem to get far worse (while claiming success in making it better).

Here’s a quick sample:

…companies defined hate in limited ways. Facebook, Twitter and YouTube have all introduced hate speech policies that generally prohibit direct attacks on the basis of specific categories like race or sexual orientation. But what to do with a new conspiracy theory like QAnon that hinges on some imagined belief in a cabal of Satan-worshipping Democratic pedophiles? Or a group of self-proclaimed “Western chauvinists” like the Proud Boys cloaking themselves in the illusion that white pride doesn’t necessarily require racial animus? Or the #StoptheSteal groups, which were based on a lie, propagated by the former president of the United States, that the election had been stolen? These movements were shot through with hate and violence, but initially, they didn’t fit neatly into any of the companies’ definitions. And those companies, operating in a fraught political environment, were in turn slow to admit, at least publicly, that their definitions needed to change.

“Proud Boys cloaking themselves” is about as sensible a phrase as loud boys silencing themselves. Everyone knows “proud boys”, like other hate groups, very purposefully use signaling to identify themselves, right? (Hint: “men who refuse to apologize” with frequent use of prominent Proud Boy logos and the colors black and yellow)

Both the ADL and SPLC have databases easily referenced for the latest on signal decoding, not to mention the many posts I’ve written here…

Limited ways used to define hate (reduced monitoring) were meant to benefit who, exactly, and why was that the starting point anyway? Did any utility ever start with “defined pollutants in limited ways” for the benefit of people drinking water? Here’s a hint from Michigan and a very good way to look at the benefit from an appropriately wide definition of harms:

This case has nothing whatsoever to do with partisanship. It has to do with human decency, resurrecting the complete abandonment of the people of Flint and finally, finally holding people accountable for their alleged unspeakable atrocities…

It should not be seen as a political act to stop extremist groups (despite them falsely claiming to be political actors — in reality they aim to destroy politics).

Who really advocated starting with the most limited definition of hate, a definition ostensibly ignorant of basic science and history of harm prevention (ounce of prevention, pound of cure, etc)?

In other words “movements were shot through with hate and violence” yet companies say they were stuck in a worry mode about “what to do” with rising hate and violence on their watch — as if shutting it down wasn’t an obvious answer. They saw advantages to themselves of not doing anything about harms done to others… as proof of what moral principles, exactly?

Should be obvious without a history degree why it’s a dangerous disconnect to say you observe imminent, immediate, potential for harms yet stand idly by asking yourself whether it would be bad to help the people you “serve” avoid being harmed. Is a bully harmed if they can’t bully? No.

The article indeed brings up an inversion of care, where shutting down hate groups risked tech workers facing threats of attack. It seems to suggest it made them want to give into the bully tactics and preserve their own safety at the cost of others being hurt; instead it should have confirmed that they were on the right path and in a better position to be shutting bullies down so that others wouldn’t suffer the same threats (service to others instead of just self).

Indeed, what good is it to say hate speech policies prohibit direct attacks if movements full of hate and violence haven’t “direct attacked” someone yet? You’re not really prohibiting, are you? It’s like saying you prohibit plane crashes but the plane hasn’t crashed yet so you can’t stop a plane from crashing. A report from Mozilla Foundation confirms this problem:

While we may never know if this disinformation campaign would have been successful if Facebook and other platforms had acted earlier, there were clearly measures the platforms could have taken sooner to limit the reach and growth of election disinformation. Platforms were generally reactive rather than proactive.

Seriously. That’s not prohibiting attacks, that barely rises to even detecting them.

Kind of like asking what if you hear a pilot in the air say “gravity is a lie, a Democratic conspiracy…” instead of hearing the pilot say “I hate the people in America so this plane is going to crash into a building and kill people”.

Is it really a big puzzle whether to intervene in both scenarios as early as possible?

I guess some people think you have to wait for the crash and then react by saying your policy was to prohibit the crash. Those people shouldn’t be in charge of other people’s safety. Nobody should sit comfortably if they say “hey, we could and should have stopped all that harm, but oops let’s react now!”

How does the old saying go…”never again, unless a definition is hard”? Sounds about right for these tech companies.

What they really seem to be revealing is an attitude of “please don’t hold me responsible for wanting to be liked by everyone, or for wanting an easier job” and then leaving the harms to grow.

You can’t make this stuff up.

And we know what happens when tech staff are so cozy and lazy that they refuse to stop harms, obsessing about keeping themselves liked and avoiding hard work of finding flaws early and working to fix them.

The problem grows dramatically, getting significantly harder. It’s the most basic history lesson of all in security.

FBI director says domestic terrorism ‘metastasizing’ throughout U.S. as cases soar

Perhaps most telling of all is that people comforted themselves with fallacies as a reason for inaction. If they did something, they reasoned falsely, it could turn into anything. Therefore they chose to do nothing for a long while, which facilitated atrocities, until they couldn’t ignore it any longer.

Here’s another excerpt from the article:

Inside YouTube, one former employee who has worked on policy issues for a number of tech giants said people were beginning to discuss doing just that. But questions about the slippery slope slowed them down. “You start doing it for this, then everybody’s going to ask you to do it for everything else. Where do you draw the line there? What is OK and what’s not?” the former employee said, recalling those discussions.

Slippery slope is a fallacy. You’re supposed to say “hey, that’s a fallacy, and illogical so we can quickly move on” as opposed to sitting on your hands. It would be like someone saying “here’s a strawman” and then YouTube staff disclose how their highly-paid long-term discussions stayed centered on how they must defeat a strawman and ignored an actual issue.

That is not how fallacies are to be handled. Dare I say, “where do you draw the line” is evidence the people meant to deal with an issue are completely off-base if they can’t handle a simple fallacy straight away and say “HERE, RIGHT HERE. THIS IS WHERE WE DRAW THE LINE” because slippery slope is a fallacy!

After all, if the slippery slope were a real thing instead of a fallacy we should turn off YouTube entirely right now, SHUT IT DOWN, because if you watch one video on fluffy kittens next thing you know you’re eyeballs deep into KKK training videos. See what I mean? The fallacy is not even worth another minute to consider, yet somehow “tech giant” policy person is stuck charging high rates to think about it for a long while.

If slippery slope were an actual logical concern, YouTube would have to cease to exist immediately. It couldn’t show any video ever.

And the following excerpt from the same article pretty much sums up how Facebook is full of intentional hot air — they’re asking for money as ad targeting geniuses yet somehow go completely blind (irresponsible) when the targeting topic includes hate and violence:

“Why are they so good at targeting you with content that’s consistent with your prior engagement, but somehow when it comes to harm, they become bumbling idiots?” asked Farid, who remains dubious of Big Tech’s efforts to control violent extremists. “You can’t have it both ways.” Facebook, for one, recently said it would stop recommending political and civic groups to its users, after reportedly finding that the vast majority of them included hate, misinformation or calls to violence leading up to the 2020 election.

Vast majority of Facebook “civic groups” included hate, misinformation or calls to violence. That was no accident. The Mozilla Foundation report, while pointing out deepfakes were a non-threat, frames willful inaction of Facebook staff like this:

Despite Facebook’s awareness of the fact that its group recommendations feature was a significant factor in growing extremist groups on its platform, it did little to address the problem.

Maybe I can go out on a limb here and give a simple explanation, borrowed from psychologists who research how people respond to uncomfortable truths:

In seeking resolution, our primary goal is to preserve our sense of self-value. …dissonance-primed subjects looked surprised, even incredulous [and] discounted what they could see right in front of them, in order to remain in conformity with the group…

Facebook staff may just be such white American elitists, that they’re in full self-value preservation mode and discount the hate they see right in front of them to remain in conformity with… hate groups.

So let me end on a rather chillingly accurate essay from a philosopher in 1963, Hannah Arendt, explaining why it is the banality of evil that makes it so dangerous to humanity.

[Evil] possesses neither depth nor any demonic dimension yet–and this is its horror–it can spread like a fungus over the surface of the earth and lay waste the entire world. Evil comes from a failure to think.

Now compare that to a quote in the article from someone “surprised” to find out the KKK are nice people. If anything, history tells us exactly this point over and over and over again, yet somehow it was news to the big tech expert on hate groups. Again from the article:

In late 2019, Green traveled to Tennessee and Alabama to meet with people who believe in a range of conspiracy theories, from flat earthers to Sandy Hook deniers. “I went into the field with a strong hypothesis that I know which conspiracy theories are violent and which aren’t,” Green said. But the research surprised her, as some conspiracy theorists she believed to be innocuous, like flat earthers, were far more militant followers than the ones she considered violent, like people who believed in white genocide. “We spoke to flat earthers who could tell you which NASA scientists are propagating a world view and what they would do to them if they could,” Green said. Even more challenging: Of the 77 conspiracy theorists Green’s team interviewed, there wasn’t a single person who believed in only one conspiracy. That makes mapping out the scope of the threat much more complex than fixating on a single group.

For me this is like reading Green discovered water is wet. No, really, water turned out to be wet but Green didn’t know it until went to Tennessee and Alabama and put a finger in the water there. Spent a lot of money on travel. Discovered water is wet, also that white genocide is a deeply embedded systemic silent killer in America rather than an unpolished and loud one… and people with a cognitive vulnerability and easily manipulated are… wait for it… very vulnerable and easily manipulated. What a 2019 revelation!

Please excuse the frustration. Those who study history are condemned to watch people repeat it. In military history terms, here’s what we know is happening today in information warfare just like it has many times before:

For Russia, a core tenet of successful information operations is to be at war with the United States, without Americans even knowing it (and the Kremlin can and does persistently deny it).

Seemingly good folks, even those lacking urgency, can quickly do horrible things by failing to take a stand against wrongs. We know this, right? It is the seemingly “nice” people who can be the most dangerous because they normalize hate and allow it to be integrated into daily routines, systemically delivering evil as though it is anything but that (requiring a science of ethics to detect and prevent it).

From the Women of the Ku Klux Klan, who reinvigorated white supremacy in the 1920s… Genocide is women’s business.

Can physics detect up versus down? Yes. Can ethics detect right versus wrong? Yes. Science.

White supremacy is a blatant lie, yet big tech allows it to spread as a silent killer in America.

When those of us building AI systems continue to allow the blatant lie of white supremacy to be embedded in everything from how we collect data to how we define data sets and how we choose to use them, it signifies a disturbing tolerance…. Data sets so specifically built in and for white spaces represent the constructed reality, not the natural one.

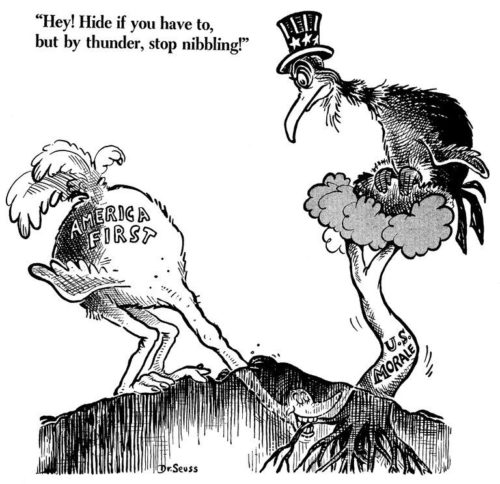

Putting Woodrow Wilson in the White House (an historic white space, literally named to keep black Americans out of it) was a far worse step than any amateur hate group flailing loudly about their immediate and angry plans. In fact the latter is often used by the former as a reason for them to be put in power yet they can just normalize the hate and violence (e.g. Woodrow Wilson claimed to be defending the country while he in fact was idly allowing domestic terrorism and “wholesale murder” of Americans under the “America First” platform).

Wilson’s 1915 launch of America First to restart the KKK always has been a very clear hate signal, an extremist group, and yet even today we see it flourish on big tech as if something is blinding their counter-terrorism experts from a simple take-down.

Interesting tangent from history: the January 1917 telegram intercepted and decoded by British warning the Americans of a German plot to invade via Mexico…was actually over American communication lines. The Americans claimed to not care what messages were on their lines, so the British delicately had to intercept and expose impending enemy threats to America that were transiting on American lines yet ignored by Americans. History repeats, amiright?

Thus if big tech can say the know how to ban the KKK when they see it, why aren’t they banning America First? The two are literally the same ffffffing thing and it always been that way! How many times do historians have to say this for someone in a tech policy job to get it?

Hello Twitter can you see the tweets on Twitter about this… from years ago?

Is this thing on?

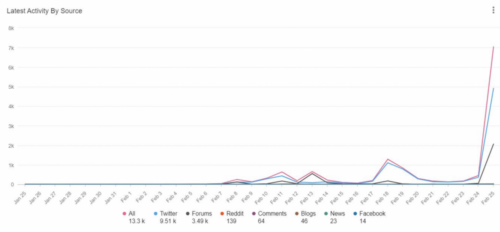

Here’s a new chart of violent white nationalist content (America First) continuing to spread on Twitter as a perfect very recent example.

Big tech staff are very clearly exhibiting a failure to think (to put it in terms of Arendt’s clear 1963 warning).

Never mind all the self-congratulatory “we’re making progress” marketing. Flint Michigan didn’t get to say “hey, where’s our credit for other stuff we filtered out” when people reviewed fatalities from lead poisoning. Flint Michigan also doesn’t get to say “we were going to remove poison but then we got stuck on a slippery slope topic and decided to let the poison flow as we got paid the same to do nothing about harms.”

Big Tech shouldn’t get a pass here on very well documented harms and obviously bad response. Let’s be honest, criminal charges shouldn’t be out of the question.