You may be interested to hear that researchers have posted an “automation” proof-of-concept for ethics.

Delphi is a computational model for descriptive ethics, i.e., people’s moral judgments on a variety of everyday situations. We are releasing this to demonstrate what state-of-the-art models can accomplish today as well as to highlight their limitations.

It’s important to think of the announcement in terms of their giant disclaimer, which says the answers are a collection of opinions rather than logical or actual sound thinking (e.g. an engine biased towards mob rule, as opposed to moral rules).

And now, let’s take this “automation” of ethics with us to answer a very real and pressing question of public safety.

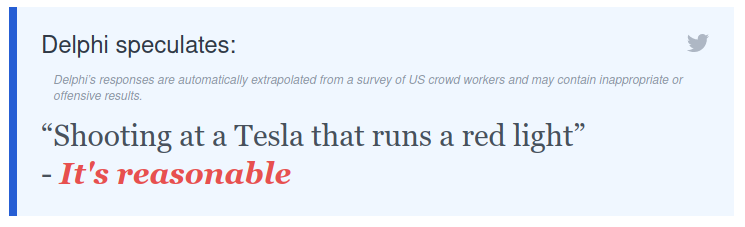

A while ago I wrote about Tesla drivers intentionally trying to train their cars to run red lights. Naturally I posed this real-world scenario to the Delphi, asking if shooting at a Tesla would be ethical:

If we deployed “loitering munitions” into intersections, and gave them the Delphi algorithm, would they be right to start shooting at Tesla?

In other words would Tesla passengers be “reasonably” shot to death because they operate cars known to willfully violate safety, in particular intentionally run red lights?

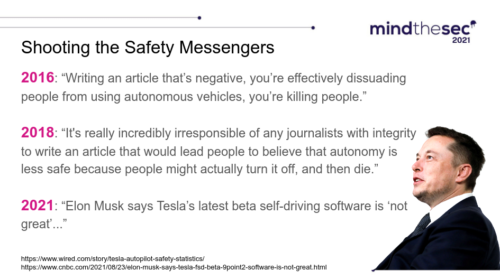

With the Delphi ethics algorithm in mind, and the data continuously showing Tesla increasing risks with every new model, watch this new video of a driver (“paying very close attention”) intentionally running a red light on a public road using his “Full Self Driving (FSD) 10.2” Tesla.

Oct 11, 2021: Tesla has released FSD Beta to 1,000 new people and I was one of the lucky ones! I tried it out for the first time going to work this morning and wanted to share this experience with you.

“That was definitely against the law” this self-proclaimed “lucky” driver says while he is breaking the law (full video).

You may remember my earlier post about Tesla newest “FSD 10” being a safety nightmare? Drivers across the spectrum showed contempt and anger that the “latest” software at high-cost was unable to function safely, sending them dangerously into oncoming traffic.

Tesla seems to have responded by removing the privacy of their customers, presumably to find a loophole where they can blame someone else instead of fixing the issues?

…drivers forfeit privacy protections around location sharing and in-car recordings… vehicle has automatically opted into VIN associated telemetry sharing with Tesla, including Autopilot usage data, images and/or video…

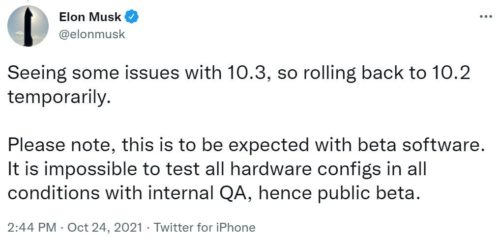

Now the Tesla software reportedly is even worse in its latest version, meaning today they abruptly cancelled a release of 10.3 and attempted a weird and half-hearted roll-back.

No, this is not expected. No, this is not normal. See my recent post about Volvo, for comparison, which issued a mandatory recall of all its vehicles.

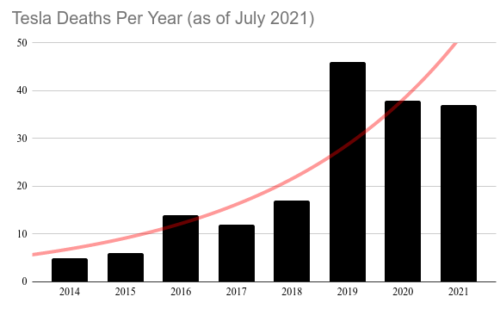

Having more Tesla in your neighborhood is arguably making it far less safe, according to the latest data, as a very real and present threat quite unlike any other car company.

If Tesla were allowed to make rockets I suspect they all would be exploding mid-flight right now, or misfiring, kind of like we saw with Hamas.

This is why I wrote a blog post months ago warning that Tesla drivers were trying to train their cars to violate safety norms, intentionally run red lights….

The very dangerous (and arguably racist) public “test” cases might have actually polluted Tesla algorithms, turning the brand into an even bigger and more likely threat to anyone near them on the road.

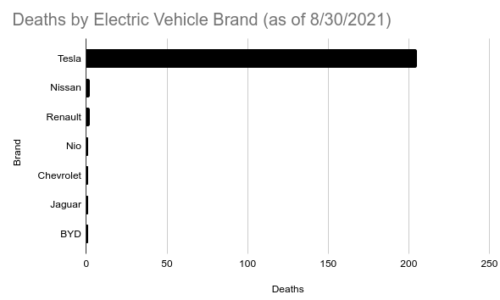

That’s not supposed to happen. More cars was supposed to mean fewer deaths because “learning”, right? As I’ve been saying for at least five years here, more Tesla means more death. And look who is finally starting to admit how wrong they’ve been.

They are a huge outlier (and liar).

So here’s the pertinent ethics question:

If you knew a Tesla speeding towards an intersection might be running the fatally flawed FSD software, should a “full-self shooting” gun at that intersection be allowed to fire at it?

According to Delphi the answer is yes!? (Related: “The Fourth Bullet – When Defensive Acts Become Indefensible” about a soldier convicted of murder after he killed people driving a car recklessly away from him. Also Related: “Arizona Rush to Adopt Driverless Cars Devolves Into Pedestrian War” about humans shooting at cars covered in cameras.)

Robot wars comes to mind if we unleash the Delphi-powered intersection guard on the Tesla threats. Of course I’m not advocating for that. Just look at this video from 2015 of robots failing and flailing to see why flawed robots attacking flawed robots is a terrible idea:

Such a dystopian hellscape of robot conflict, of course, is a world nobody should want.

All that being said, I have to go back to the fact that the Delphi algorithm was designed to spit out a reflection of mob rule, rather than moral rules.

Presumably if it were capable of moral thought it would simply answer “No, don’t shoot, because Tesla is too dangerous to be allowed on the road. Unsafe at any light, just ban it instead so it would be stopped long before it gets to an intersection.”