For months now I have been showing lawyers how ChatGPT lies, and they beg and some plead for me to write about it publicly.

“How do people not know more about this problem” they ask me. Indeed, how is ChatGPT failure not front page news, given it is the Hindenburg of machine learning?

And then I ask myself how do lawyers not inherently distrust ChatGPT — see it as explosive garbage that can ruin their work — given the law has legendary distrust in humans and a reputation for caring about tiny details?

And then I ask myself why I am the one who has to report publicly on ChatGPT’s massive integrity breaches? How could ChatGPT be built without meaningful safety protections? (Don’t answer that, it has to do with greedy fire-ready-aim models curated by a privileged few at Stanford; a rush to profit from stepping on everybody to summit an artificial hill created for a evil new Pharoah of technology centralization).

All kinds of privacy breaches these days will result in journalists banging away on keyboards. Everyone writes about them all the time (two decades after regulation forced their hand, 2003 breach disclosure laws started).

However, huge integrity breaches seem to be left comparatively ignored even when harms may be greater.

In fact, when I blogged about the catastrophic ChatGPT outage practically every reporter I spoke with said “I don’t get it”.

Get what?

Are integrity breaches today somehow not as muckrackworthy as back in The Jungle days?

The lack of journalist attention to integrity breaches has resulted in an absurd amount of traffic coming to my blog, instead of people reading far better written stuff on the NYT (public safety paywall) or Wired.

I don’t want or need the traffic/attention here, yet I also don’t want people to be so ignorant of the immediate dangers they never see them before it’s too late. See something, say…

And so here we are again, dear reader.

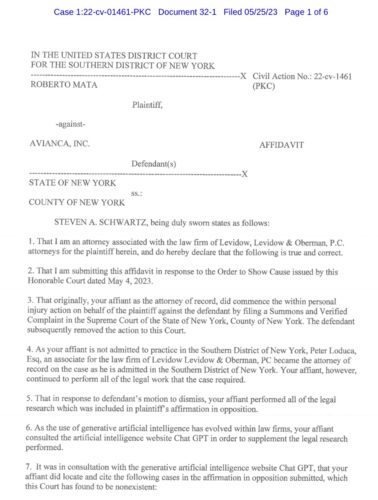

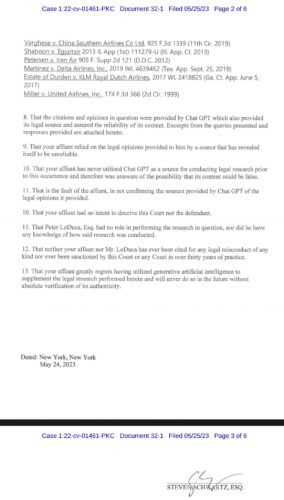

A lawyer has become a sad casualty of fraud known as the OpenAI ChatGPT. An unwitting, unintelligent lawyer has lazily and stupidly trusted this ChatGPT product, a huge bullshit generator full of bald-faced lies, to do their work.

The lawyer asked the machine to research and cite court cases, and of course the junk engineering… basically lied.

The court was very displeased with reviewing lies, as you might guess. Note the conclusion above to “never use again” the fraud of ChatGPT.

Harsh but true. Allegedly the lawyer asking ChatGPT for answers decided it was to be trusted because it was asked if it could be trusted. Hey witness, should I believe you? Ok.

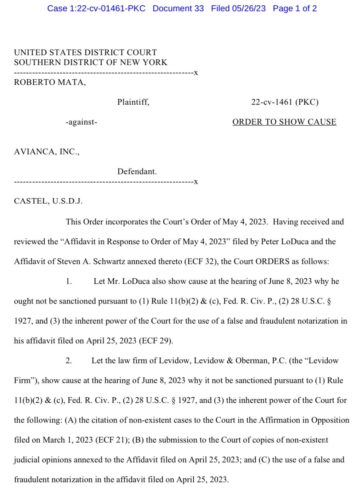

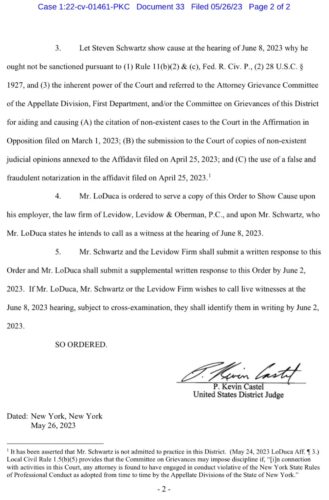

Apparently the court is now sanctioning the laziest lawyer alive, if not worse.

A month ago when presenting findings like this I was asked by a professor how to detect ChatGPT. To me this is like asking a food critic how can they detect McDonalds. I answered “how do you detect low quality” because isn’t that the real point? Teachers should focus on quality output, and thus warn students that if they generate garbage (e.g. use ChatGPT) they will fail.

The idea that ChatGPT has some kind of quality to it is the absolute fraud here, because it’s basically operating like a fascist dream machine (pronounced “monopolist” in America): target a market to “flood with shit” and destroy trust, while demanding someone else must fix it (never themselves, until they eliminate everyone else).

Look, I know millions of people willingly will eat something called a McRib and say they find it satisfying, or even a marvel of modern technology.

I know, I know.

But please let us for a minute be honest.

A McRib is disgusting and barely edible garbage, with long term health risks.

Luckily, just one sandwich probably won’t have many permanent effects. If you step on the scale the next day and see a big increase, it’s probably mostly water. The discomfort will likely cease after about 24 hours.

Discomfort. That is what nutrition experts say about eating just one McRib.

If you never experienced a well made beef rib with proper BBQ, that does not mean McDonalds has achieved something amazing by fooling you into paying them for a harmful lie that causes discomfort before permanent harmful effects.

…nausea, vomiting, ringing in the ears, delirium, a sense of suffocation, and collapse.

This lawyer is lucky to be sanctioned early instead of disboweled later.

Sorry, meant disbarred. Autocorrect. See the problem yet?

Diabetes is a terrible thing to facilitate, as we know from what happened from people guzzling McDonalds instead of real food and then realizing too late their life (and healthcare system) is ruined.

The courts must think big here to quickly stop any and all use of ChatGPT, with a standard of integrity straight out of basic history. Stop those avoiding accountability, who think gross intentional harmful lies for profit made by machines (e.g. OpenAI) should be prevented or cleaned up by anyone other than themselves.

The FDA, created because of reporting popularized by The Jungle, didn’t work as well as it should. But that doesn’t mean the FDA can’t be fixed to reduce cancer in kids, or that another administration can’t be created to block the sad and easily predictable explosion in AI integrity breaches.