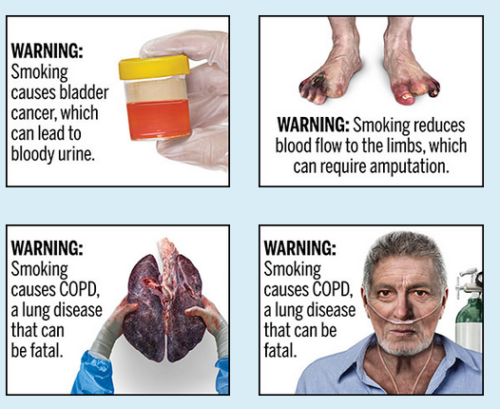

Cigarettes famously were regulated to have very stern warnings on them to counter the disinformation of their manufacturers. Here’s just a sample from the FDA of the kind of messaging I’m talking about:

That’s the right way to regulate disinformation because it’s a harms-based approach. If you follow the wrong path, you suffer a lot and then die. Choose wisely.

It’s like saying if you point a gun at your head and pull the trigger it will seriously hurt you and very likely kill you. Suicide is immoral. Likewise, when someone refuses the COVID19 vaccine they are putting themselves, as well as those around them (like smoking), at great risk of injury and death.

However, I still see regulators doing the wrong thing and trying to create a sense of “authenticity” in messaging instead of focusing on speech in context of harm.

Take the government Singapore, for example, which has this to say:

The Singapore-based website Truth Warriors falsely claims that coronavirus vaccines are not safe or effective — and now it will have to carry a correction on the top of each page alerting readers to the falsehoods it propagates.

Under Singapore’s “fake news” law — formally called the Protection from Online Falsehoods and Manipulation Act — the website must carry a notice to readers that it contains “false statement of fact,” the Health Ministry said Sunday. A criminal investigation is also underway.

Calling something “false statement of fact” doesn’t change the fact that falsehoods are NOT inherently bad. Even worse, over-emphasis on forced authenticity can itself be harmful (denying someone privacy, for example, by demanding they reveal a secret).

Thus this style of poorly-constructed “authenticity” regulation could be a mistake for a number of important safety reasons, not least because it can seriously backfire.

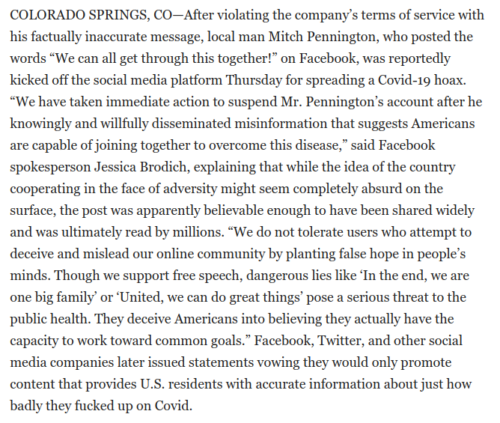

It would be like the government requiring The Onion or the Duffelblog to have a splash page announcing fake information (let alone the comedian industry as a whole).

Just take a quick look at how The Onion is reporting COVID19 lately:

See what I did there? Calling out something false in fact could drive more people to reading it (popularizing things by unintentionally creating a nudge/seduction towards salacious “contraband”).

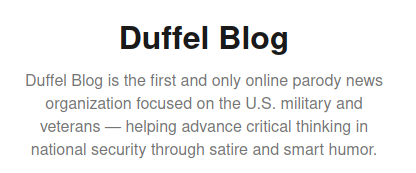

Indeed, it seems the Duffelblog already has created just such a warning voluntarily because it probably found too many people unwittingly acting upon it as truthful.

And that begs the questions, does the warning actually increase readership, and has there been harm reduction from the warning (e.g. was there any harm to begin with)?

Maybe this warning was regulated… it reminds me of earlier this year when GOP members of Congress (Rep. Pat Fallon, R-Texas) exposed themselves to the military as completely unable to tell facts from fiction.

I have a feeling someone in Texas government demanded Duffelblog do something to prevent Texans from entering the site, instead of educating Texans properly on how to tell fact from fiction (e.g. regulating harms through accountability for them).

Putting a gun to your head (refusing vaccine) is a suicidal move (immoral) and accountability for encouraging suicide is illustrated best through regulation that clearly documents harms.